Heart attacks strike about 1.2 million people every year in America alone, many of them fatally. Of those, most are caused by coronary artery disease–the biggest killer of both men and women in the U.S.–and something like 70 percent of those strike without warning. Coronary artery disease is sneaky like that. Symptoms generally don’t outwardly manifest themselves until someone is on the floor, short of breath, wondering what just kicked them in the chest. Doctors battling these cardiac blockages generally enter the fight at a severe disadvantage. The disease almost always benefits from the element of surprise.

“We have no way to predict where the blockages will be, and a lot of the time we catch the stenoses too late,” says Dr. Frank Rybicki, director of the Applied Imaging Science Laboratory at Brigham and Women’s Hospital in Boston, referring to arterial blockages by their clinical name. “Wouldn’t it be great and lifesaving if we could predict where a lesion will be ahead of time? If you could catch a lesion before it causes symptoms, before it causes a heart attack, you could do a lot of good.”

Rybicki and his professional peers can’t see the future, but increasingly computers can. The same capacity for algorithmic prediction that allows Netflix to know what movies you want to watch or astronomers to model the complexity of the cosmos is helping an international team of researchers–including computer scientists and doctors from Harvard, medical doctors and imaging experts from Brigham and Women’s Hospital (including Rybicki), a team of computer scientists and physicists in Italy, and chip designers at NVIDIA–to mash up fluid dynamics, computer science, physics, and cardiac medicine via a computer custom-built from graphics processors.

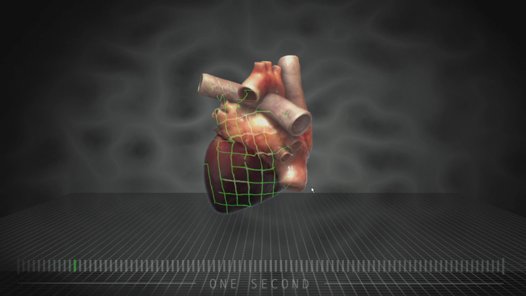

Called multiscale hemodynamics, the technology not only lets doctors see exactly how blood is moving through a particular patient’s heart, but also predicts where future arterial blockages are likely to form, tipping the scales back in doctors’ favor. In essence, it predicts heart attacks before they happen. And it does all of this using nothing more than a simple CT scan.

That’s a marked improvement over the current method of peering into the inner workings of the heart. Heart attacks occur when plaque builds up in the coronary arteries around the heart, leading to blockages that restrict blood flow. That plaque develops in areas within the arterial trees where stress on the arterial walls is low. It’s like an eddy in a stream–the places in the stream where blood slows and pools are the places where life-threatening plaque develops.

“This was completely outside the realm of possibility a year or two ago.”

But doctors currently have no idea where those places are. Every patient’s arterial trees are different, possessing unique geometries that affect the way blood flows through them, so there’s no universal model. If a doctor suspects a patient may be developing coronary artery disease for any reason, the current standard course of action involves threading a camera-equipped catheter through the circulatory system and into the heart so doctors can look for the plaque directly. In other words, patients undergo a surgery before doctors are even sure there is a problem. It’s invasive, expensive, and physically taxing on the human body.

Compare that to computed tomography (CT). CT is cheap, a non-invasive imaging technology used for everything from identifying and mapping complex bone fractures to hunting for tumors. Every radiology lab has a CT scanner, and though CT technology has been around for decades the machines are still improving at an admirable tick, delivering higher spatial resolutions all the time. Better inputs. Richer data.

Researchers at Brigham and Women’s Applied Imaging Science Laboratory could see the potential in this richer CT data emerging in recent years, but they weren’t equipped to create the kinds of models that can predict myocardial infarctions. Recognizing their medical imaging problem was also a computer science problem, they enlisted the help of various computer scientists, like Dr. Efthimios Kaxiras, director of the Institute for Applied Computational Science at Harvard.

Applying complex physics and fluid dynamics equations–formulas requiring mind-numbing numbers of individual computations–Kaxiras and his colleagues began modeling the way blood flows through the arterial trees of an individual patient from CT scans of the patient’s heart. And just like that, doctors could now peer inside a patient’s heart without actually venturing inside. Their holy grail–the ability to see heart blockages forming before they turn into heart attacks–was within reach.

But from a computer modeling standpoint this was anything but easy, Kaxiras says. Blood is a tricky medium to model, even for high-powered computing platforms, because it is made up of so many particles of varying sizes and characteristics. Variables abound: there are red blood cells, white blood cells, plasma, particulate matter, etc. Then you have to take into account things like the degree to which the blood is oxygenated, which affects the way it behaves as a fluid.

Tapping the resources of some of the country’s biggest, baddest supercomputers–IBM’s Blue Gene ran an early version of their models–the team was able to generate these working fluid dynamics models of the heart. But if they were going to revolutionize personalized medicine, they needed to somehow port their software away from these massive, expensive, water-cooled machines and bring it down to the clinical level.

“So we turned to this new technology that NVIDIA is providing through its graphics cards, and recoded the whole approach,” Kaxiras says. “These graphics cards offer a much, much cheaper way of doing the same calculation without losing any of the accuracy.”

GPUs are designed to manage the values of multiple pixels on a screen, distributing the workload between various cores rather than one (in other words, they have a special knack for addressing parallel computing problems). And while they are not ideal for every kind of processing, they are particularly good at certain kinds of math problems. In the last few years, NVIDIA has been exploring ways to use their powerful GPU arrays to solve these complex mathematical problems, and it just so happens one of the many computer science labs NVIDIA tapped to help them develop best practices for their GPUs was Kaxiras’s lab at Harvard.

Between Brigham and Women’s computer science problem and NVIDIA’s GPUs, Kaxiras and his computer science colleagues found a harmonious fit. At this point, the work went overseas where a team of Italian researchers–two computational physicists and two computer scientists with specialized experience programming these graphics processors–programmed a GPU-driven platform capable of running the multiscale hemodynamics models at a fraction of the cost and complexity of a supercomputer.

“This is completely new,” Kaxiras says. “This was completely outside the realm of possibility a year or two ago. This can be deployed in a hospital setting. A year or two ago, doctors would have to collaborate with someone like me to get these kinds of results, and that can take weeks or months.”

Now it takes an afternoon, and with every incremental improvement in the technology–in the quality of the CT scans, in the efficiency of the software, in the power of the GPUs, in the strength of the equations–the process grows a little faster.

“In five to ten years this technology is going to be so good and so well-oiled that you are going to be able to push a single button on the CT scanner and get all of this information in a few hours,” Rybicki says. “Or maybe in a few minutes. All of the pieces are there.”

Right now, Kaxiras, Rybicki, and their myriad collaborators on two continents are working to pull together a clinical prototype that puts all of the computational hardware in one package so it can be easily deployed in any radiology suite. That means multiscale hemodynamics could soon become a normal part of any patient’s regular medical care. Doctors will be able–for the price of a CT scan–to regularly peer inside their patients’ hearts and see them not only as they look right now, but as they will likely look in the future, enabling them to predict heart attacks before they happen and preemptively attack problems before they even have a chance to become threats.

How’s that for the element of surprise?