Doctors hope the future of cancer treatment is personal: Using genetics, they’ll be able to match patients with precisely the drug or treatment option that will fight their tumors. However, information on tumor genetics often isn’t linked with data on how well patients with those tumors did on particular treatments. This makes it difficult for researchers to tailor treatments to individual patients. “Sometimes all that’s known is how long patients lived who had a particular pathology, if that’s even known,” says Kenneth Kehl, a medical oncologist at the Dana-Farber Cancer Institute in Boston. “Asking questions like which mutations predict benefit from a particular treatment has been more challenging than one might expect.”

To help ease those challenges, Kehl worked on a team that developed a machine learning algorithm that could pull information from doctors’ and radiologists’ notes in electronic health records in order to identify how particular patients’ cancer progressed. Their tool, published this week in the journal JAMA Oncology, might in the future help identify patients who could benefit from clinical trials or other specific interventions—and it’s a piece of larger efforts to bring artificial intelligence into oncology.

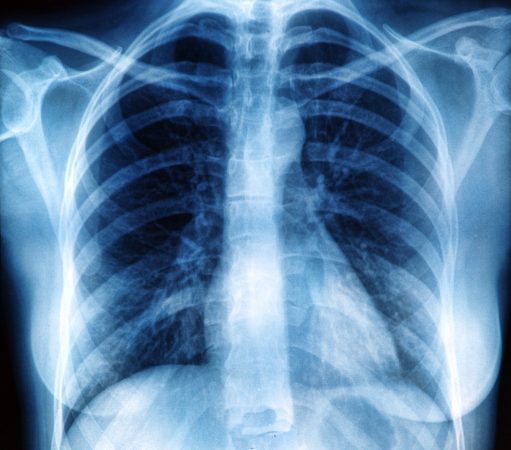

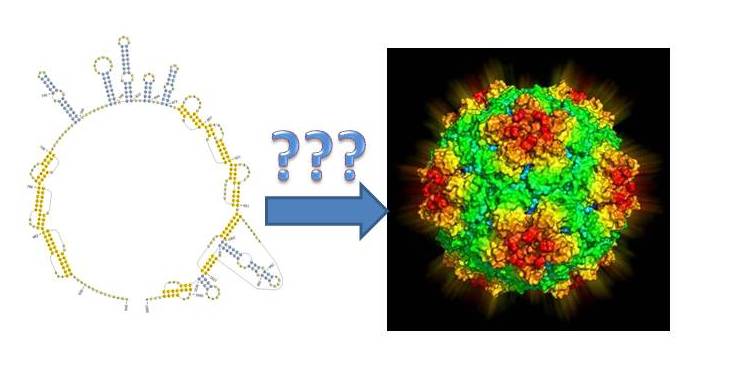

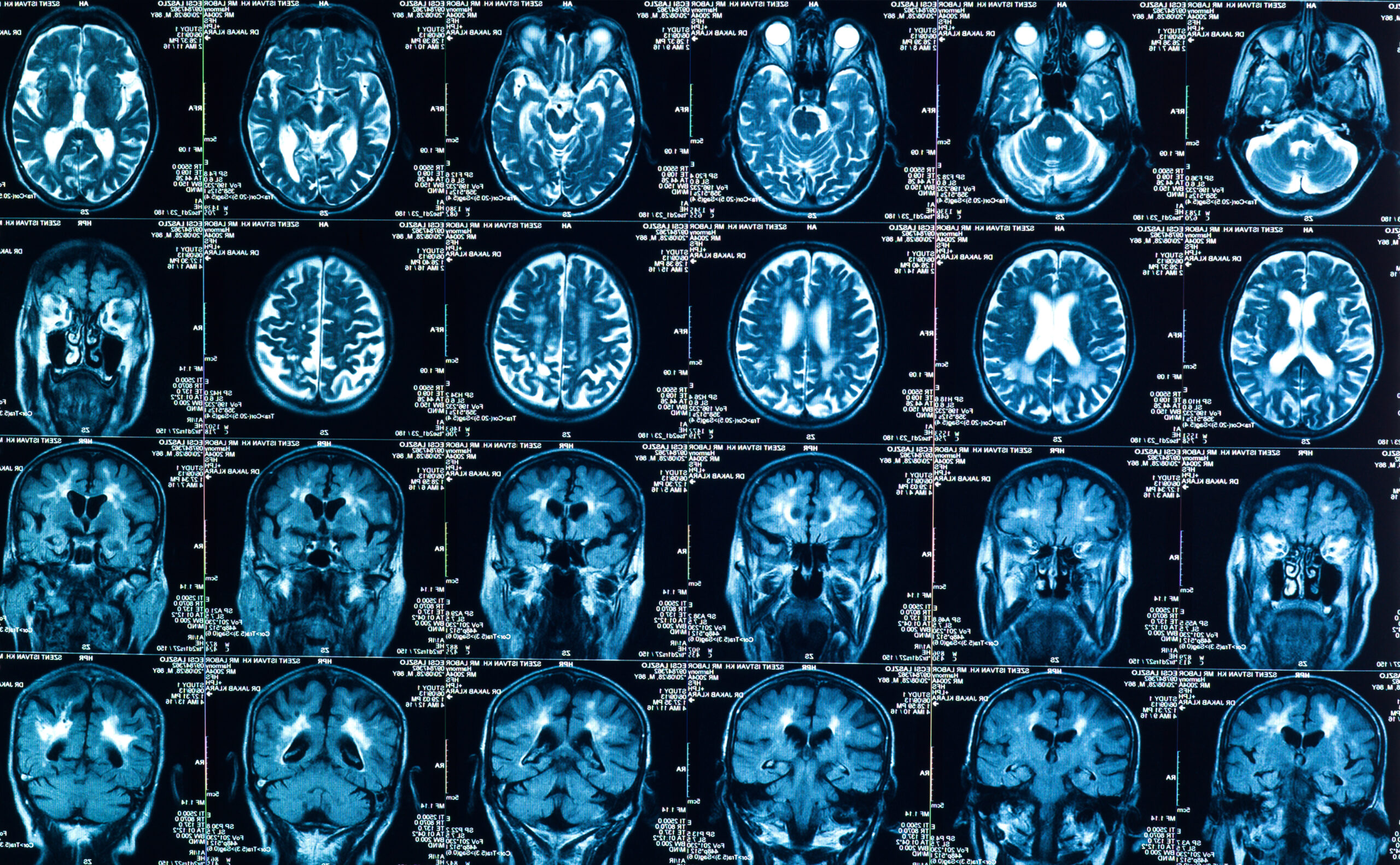

Most of the information about the progression of tumors in cancer patients is contained in written notes from radiologists, who examine scans and track changes in the status of the cancer. Because it’s raw text—not choices from a drop-down menu or data points in a spreadsheet—most analytic methods can’t pull the relevant information. The tool created in this study leveraged improvements in machine learning for language to identify those details in electronic health records.

The machine learning system was able to identify cancer outcomes as well as human readers, and much more rapidly. Human readers could only get through three patient records an hour. The tool would be able to analyze an entire cohort of thousands of cancer patients in around 10 minutes.

Hypothetically, Kehl says, the tool could be leveraged to sweep the health records of every patient at an institution and identify those who are eligible for and would need clinical trials, and match them to the best possible treatments based on the characteristics of their disease. “It’s possible to find patients at scale,” Kehl says.

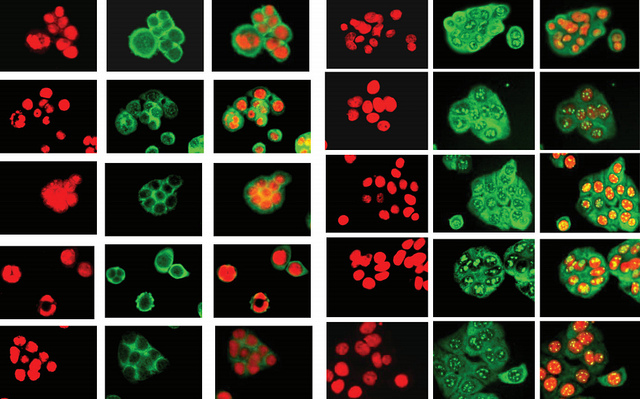

For this particular tool, the scans from cancer patients were initially read by human radiologists. But artificial intelligence and machine learning can read images, as well, and research shows that they can analyze scans of tumors as effectively as human radiologists. In another study published this month, radiologists and artificial intelligence experts partnered to develop an algorithm that could determine if lumps on a thyroid should be biopsied—and found that recommendations from the machine learning tool recommended biopsies similarly to expert radiologists using the American College of Radiology (ACR) system.

Assessing thyroid lumps can be time consuming, and radiologists can face challenges using the ACR system. “We wanted to see if deep learning can perform those decisions automatically,” says study author Maciej Mazurowski, associate professor of radiology and electrical and computer engineering at Duke University.

There’s more work to be done on artificial intelligence and scan analysis before these tools can take the place of radiologists, Mazurowski says, but recent research indicates that it’s possible for AI to perform at the level of radiologists. “The final question, even if we can show that these work as well as humans, will be whether and to what extent it will be adopted into the healthcare system. It’s not just whether it works.”

Visual analysis is further along in medicine and oncology than textual analysis, Kehl says, but both could be components of integration of machine learning into the normal practice of care. It might be possible, for example, to integrate machine interpretation of scans into the overall electronic health record analysis, he says. “That would mean looking at how much information we get from images themselves, how much do we get from human interpretation, and what could we get from the model looking at images,” he says. “The optimal strategy still isn’t known.”

It seems possible that, going forward, artificial intelligence might help identify and monitor cancer progress, Kehl says. “It’s figuring out how we can incorporate AI generally, and in imaging, pathology, and health records, into the clinical workflow,” Kehl says.