Autonomous cars may be capable of driving around on their own, but they still need to be able to communicate their intentions to other people on the road. When there is no driver in the car, a pedestrian crossing in front of it has no one to connect with in a reassuring, hey, don’t hit me human-to-human, eye-contact kind of way.

A company called Drive.ai is working on solving that problem. Its autonomous Nissan vans, part of a forthcoming robo-taxi service in Frisco, Texas, will have dynamic signs—one on the front and back, and one on each side—capable of displaying different messages, like “Waiting for you to cross.”

Why did the self-driving car cross the road?

Cars today tend to have people driving them. “We are taking that human out,” says Bijit Halder, vice president of product at Drive.ai. “But how do we substitute that same emotional connection and communication and comfort?”

The signs are a way of addressing the no-human-inside problem, and the car’s orange and blue design is meant to be distinctive, so that they stand out and are easily recognizable to other drivers and pedestrians. They’re definitely not sleek.

“We weren’t optimizing for prettiness,” says Andrew Ng, an artificial intelligence expert and part of Drive.ai’s board. Compared to a standard car piloted by a real human, an autonomous vehicle has weaknesses and strengths. “It cannot make eye contact with you to let you know [it’s seen you]; it can’t recognize a construction worker’s hand gestures, waving the car forward,” Ng adds. In short, there’s only so much the AI can do right now. (On the strengths side, it’s not going to get distracted or drive drunk.)

While the Drive.ai team hasn’t finalized the messages that the vans will display, options include notifications like “passengers entering/exiting,” “pulling over” (with an arrow pointing in the right direction on the back and front signs), and “self-driving off,” so others know to actually pay attention to the human behind the wheel.

“I thought it would be really funny to have a ‘why did the chicken cross the road joke,’” says Ng, lightheartedly. “But maybe that would be a misuse of the exterior display panels.”

Don MacKenzie, the head of the sustainable transportation lab at the University of Washington, notes, via email, that “it is certainly important for driverless vehicles to communicate with human drivers and other road users.” But while signs on the vehicle are one way to do this, they might not be a perfect solution. “What happens when the message is obstructed by an object or glare?” he wonders.

The company announced on Monday that it would offer the robo-taxi service, which will be free at first, starting in July in one section of Frisco, Texas. Office workers and others in the area will be able hail a ride using an app. At first, a safety driver will be behind the wheel, and later they’ll move to a passenger seat and function as a “chaperone.” Finally, the cars should become passenger-only, although there will be a remote operators who can help out as needed.

Everything is different in Texas

Drive.ai isn’t the first company to plan a taxi service full of autonomous cars—Waymo has one in the works in the Phoenix, Arizona area, and Cruise, a part of GM, has a service planned for next year using autonomous vehicles that don’t even have steering wheels or pedals—but this is the first service of its kind in the Lone Star State. And then there’s Uber, which had self-driving cars on the roads in multiple places until one of their vehicles killed a pedestrian in March. They put their autonomous program on hold while the accident is investigated.

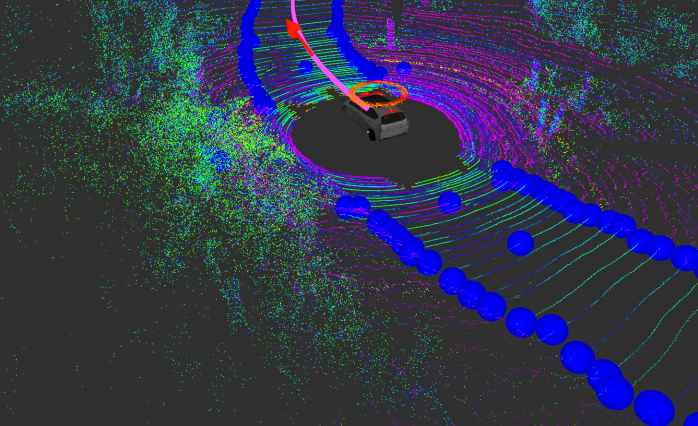

The signs on the Drive.ai vehicles are about broadcasting to others what they are doing, but the cars need to know how to drive around on their own in the first place. Drive.ai uses an artificial intelligence technique called deep learning to help them teach the system. For example, when a car is near a traffic light, it needs to be able to actually recognize not only that there is a traffic light somewhere in the scene, but also know what color is lit up.

But actually getting their cars driving around Texas presented a new challenge. “We found that the traffic light designs are actually different here in Texas compared to in California,” Ng says. So they had to train their system to recognize the lights by showing it annotated images of the Texan traffic signals.

After they taught the neural network on the new lights, “the system started working very well,” Ng says. Neural networks are a common AI tool that can learn from data; in this case, the Drive.ai team is using a neural network to both recognize where the traffic light is in the image as well as what color it is.

But everything isn’t handled by AI—if the neural network identifies a traffic light as red, the fact that a red light means “don’t go” is simple enough to program into the system as a straightforward rule.