“The Automatic Emergency Braking (AEB) or Autopilot systems may not function as designed, increasing the risk of a crash.” It’s a simple sentence, delivered with the calm finality of bureaucratic certainty. It is a literal post-mortem, the bottom-line-up-front from the National Highway Traffic Safety Administration’s investigation into the first fatal crash of an autonomous car—one made by Tesla Motors. The investigation into the crash closed today, and it will likely cast a long shadow over the future of self-driving cars, which have long been heralded as potentially life-saving devices.

There was no particularly unique flaw to the Tesla that Joshua Brown, of Canton Ohio, was driving on May 7th, 2016. Brown, who recorded multiple YouTube videos praising his Tesla Model S and its autopilot system, died on the scene from injuries, after his car collided with a tractor trailer crossing the highway west of Williston, Florida. The Office of Defects Evaluation’s task was to see if there was a unique failure—perhaps a production error or faulty part—responsible for the death, and if so, to find the changes needed to prevent such a failure in the future.

None were found. The Tesla’s Automatic Emergency Braking systems, according to the report “are rear-end collision avoidance technologies that are not designed to reliably perform in all crash modes, including crossing path collisions.” Immediately after the crash, the explanation for the failure (put forth by Tesla) was that the car likely failed to distinguish the white tractor trailer from the sky. It may be even simpler than that: the system was designed to prevent rear-end collisions, so the collision was one it just wasn’t set up to see.

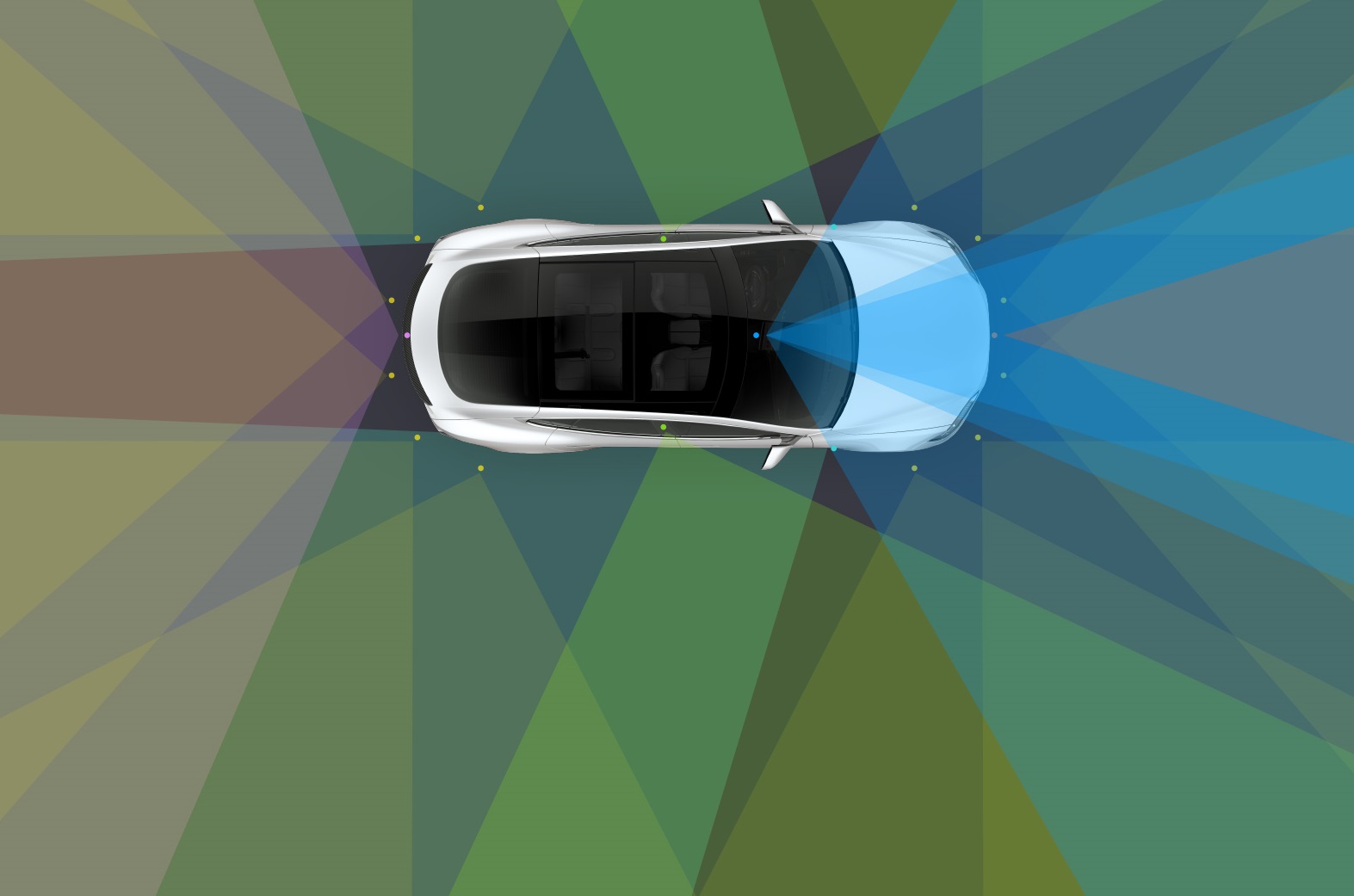

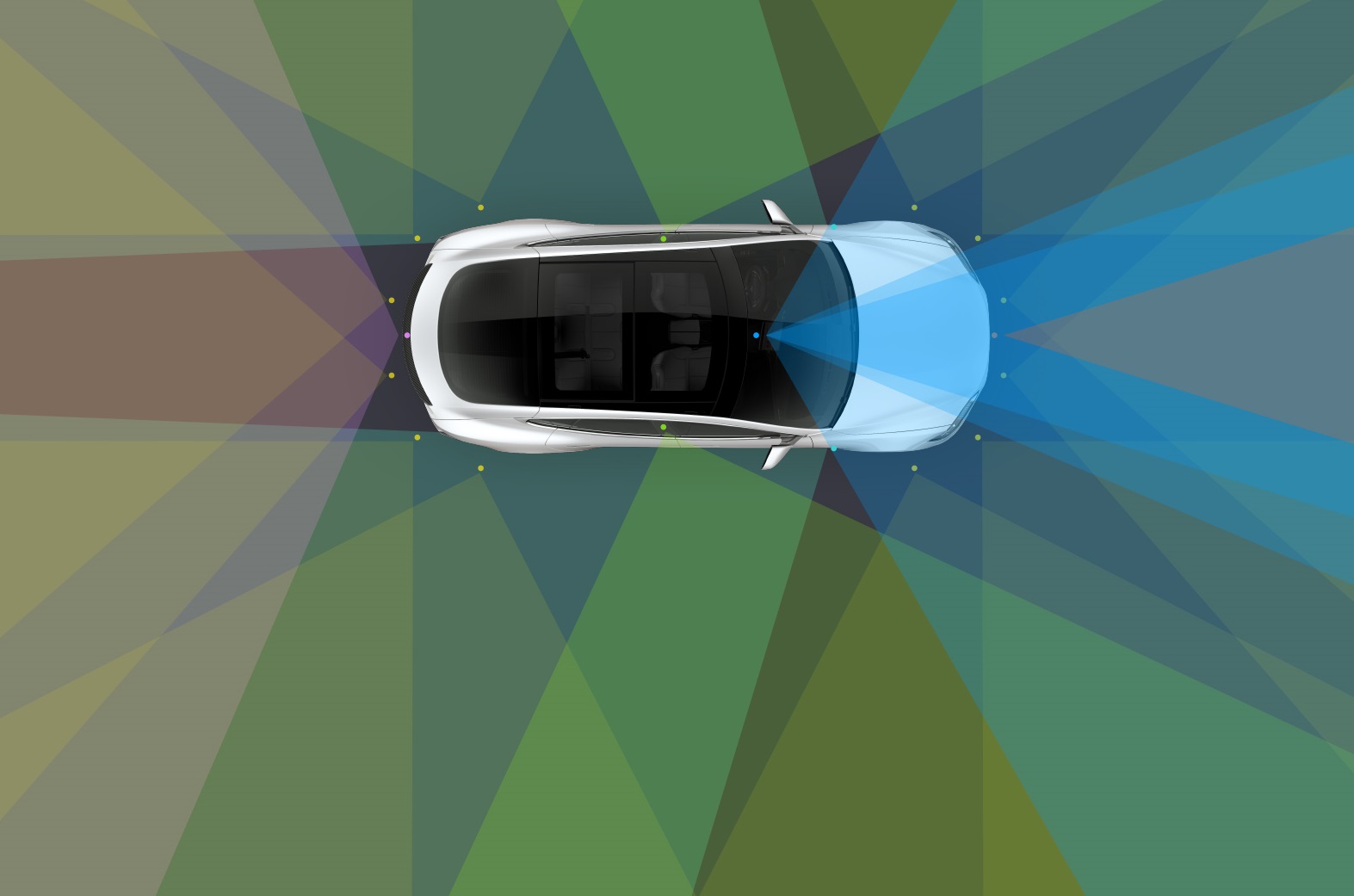

Tesla’s automatic emergency braking system uses cameras and radar to check for dangerous close objects, and—like all in the industry—is designed primarily to prevent rear-ending. In testing done as as part of the crash investigation, the government found the Tesla system was “state of the art” but that “braking for crossing path collisions, such as that present in the Florida fatal crash, are outside the expected performance capabilities of the system.”

There’s a telling footnote in the review: “NHTSA recognizes that other jurisdictions have raised concerns about Tesla’s use of the name “Autopilot.” This issue is outside the scoop [sic] of this investigation.”

The autopilot system, like cruise control before it, is a tool to help an attentive human driver manage the complicated system they are piloting. Yet the name “autopilot” itself invokes a sense of hands-free travel, with the machine itself making all the decisions and the human driver sitting pretty as a passenger. In describing how the autopilot system works, and how it performed specifically in the fatal crash, the review notes the system’s limitations, and safeguards built in by Tesla to ensure the human is actually paying attention. One of those safeguards, where the car asks the driver to put their hands back on the steering wheel and ultimately slows to a stop if they don’t, is a pretty good way to encourage responsible autopilot use. Still, it’s possible that the system’s name creates unrealistic expectations for the device, causing drivers to become lax.

If there is a defect, the report concludes, it’s with the human driver. “The Florida fatal crash appears to have involved a period of extended distraction (at least 7 seconds).”

“An attentive driver has superior situational awareness in most of these types of events,” the review continues, “particularly when coupled with the ability of an experienced driver to anticipate the actions of other drivers. Tesla has changed its driver monitoring strategy to promote driver attention to the driving environment.”

The best safety technology, for now, sits in the driver’s seat. Autopilot works best when drivers treat it as a tool designed to help them navigate the road more safely, rather than as a robotic chauffeur.

Read the full report.