Imagine you are driving down the street when two people — one child and one adult — step onto the road. Hitting one of them is unavoidable. You have a terrible choice. What do you do?

Now imagine that the car is driverless. What happens then? Should the car decide?

Until now, no one believed that autonomous cars — robotic vehicles that operate without human control— could make moral and ethical choices, an issue that has been central to the ongoing debate about their use. But German scientists now think otherwise. They believe eventually it may be possible to introduce elements of morality and ethics into self-driving cars.

To be sure, most human drivers will never face such an agonizing dilemma. Nevertheless, “with many millions of cars on the road, these situations do occur occasionally,” said Leon Sütfeld, a researcher in the Institute of Cognitive Science at the University of Osnabrück and lead author of a new study modeling ethics for self-driving cars. The paper, published in Frontiers in Behavioral Neuroscience, was co-authored by Gordon Pipa, Peter König, and Richard Gast, all of the institute.

The concept of driverless cars has grown in popularity as a way to combat climate change, since these autonomous vehicles drive more efficiently than most humans. They avoid rapid acceleration and braking, two habits that waste fuel. Also, a fleet of self-driving cars could travel close together on the highway to cut down on drag, thereby saving fuel. Driverless cars will also encourage car-sharing, reducing the number of cars on the road and possibly making private car ownership unnecessary.

Improved safety is also an energy saver. “[Driverless cars] are expected to cause fewer accidents, which means fewer cars need to be produced to replace the crashed ones,” providing another energy savings, Sütfeld said. “The technology could help [fight climate change] in many ways.”

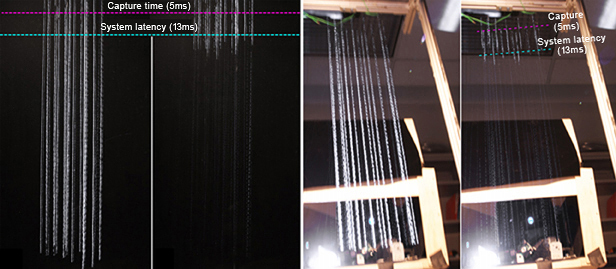

The study suggests that cars can be programmed to model human moral behaviors involving choice, deciding which of multiple possible collisions would be the best option. Scientists placed human subjects into immersive virtual reality settings to study behavior in simulated traffic scenarios. They then used the data to design algorithms for driverless cars that could enable them to cope with potentially tragic predicaments on the road just as humans would.

Participants “drove” a car in a typical suburban neighborhood on a foggy day when they suddenly faced collision with an animal, humans or an inanimate object, such as a trash can, and had to decide what or whom to spare. For example, adult or child? Human or animal? Dog or other animal? In the study, children fared better than adults. The dog was the most valued animal, the others being a goat, deer and boar.

“When it comes to humans versus animals, most people would certainly agree that the well-being of humans must be the first priority,” Sütfeld said. But “from the perspective of the self-driving car, everything is probabilistic. Most situations aren’t as clear cut as ‘should I kill the dog, or the human?’ It is more likely ‘should I kill the dog with near certainty, or alternatively spare the dog but take a 5 percent chance of a minor injury to a human?’ Adhering to strict rules, such as always deciding in favor of the human, might just not feel right for many.”

Other variables also come into play. For example, was the person at fault? Did the adult look for cars before stepping into the street? Did the child chase a ball into the street without stopping to think? Also, how many people are in harm’s way?

The German Federal Ministry of Transport and Digital Infrastructure attempted to answer these questions in a recent report. It defined 20 ethical principles for self-driving cars, several of which stand at odds with the choices humans made in Sütfeld’s experiment. For example, the ministry’s report says that a child who runs onto the road is more to blame — and less worthy of saving — than an adult standing on the footpath as a non-involved party. Moreover, it declares it unacceptable to take a potential victim’s age into account.

“Most people — at least in Europe and very likely also Northern American cultures — would save a child over an adult or elderly person,” Sütfeld said. “We could debate whether or not we want cars to behave like humans, or whether we want them to comply to categorical rules such as the ones provided by the ethics committee report.”

Peter König, a study co-author, believes their research creates more quandaries than it solves, as sometimes happens in science. “Now that we know how to implement human ethical decisions into machines we, as a society, are still left with a double dilemma,” he said. “Firstly, we have to decide whether moral values should be included in guidelines for machine behavior and secondly, if they are, should machines should act just like humans?”

The study doesn’t seek to answer these questions, only to demonstrate that it is possible to model ethical and moral decision-making in driverless cars, using clues as to how humans would act. The authors are trying to lay the groundwork for additional studies and further debate.

“It would be rather simple to implement, as technology certainly isn’t the limiting factor here,” Sütfeld said. “The question is how we as a society want the cars to handle this kind of situation, and how the laws should be written. What should be allowed and what shouldn’t? In order to come to an informed opinion, it’s certainly very useful to know how humans actually do behave when they’re facing such a decision.”

Marlene Cimons writes for Nexus Media, a syndicated newswire covering climate, energy, policy, art and culture.