The ocean is big, and our attempts to understand it are still largely surface-deep. According to the National Oceanic and Atmospheric Organization, around 80 percent of the big blue is “unmapped, unobserved, and unexplored.”

Ships are the primary way to collect information about the seas, but they’re costly to send out frequently. More recently, robotic buoys called Argo floats have been drifting with the currents, diving up and down to take a variety of measurements at depths up to 6,500 feet. But new aquatic robots from a lab at Caltech could rove deeper and take on more tailored underwater missions.

“We’re imagining an approach for global ocean exploration where you take swarms of smaller robots of various types and populate the ocean with them for tracking, for climate change, for understanding the physics of the ocean,” says John O. Dabiri, a professor of aeronautics and mechanical engineering at the California Institute of Technology.

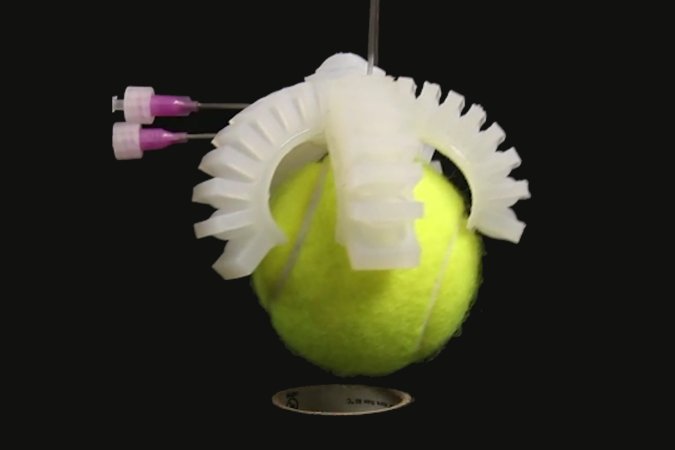

In comes CARL-Bot (Caltech Autonomous Reinforcement Learning Robot), a palm-sized aquatic robot that looks like a cross between a pill capsule and a dumbo octopus. It has motors for swimming around, is weighted to stay upright, and has sensors that can detect pressure, depth, acceleration, and orientation. Everything that CARL does is powered by a microcontroller inside, which has a 1-megabyte processor that’s smaller than a postage stamp.

CARL is the latest ocean-traversing innovation out of Dabiri’s lab, created and 3D-printed at home by Caltech graduate student Peter Gunnarson. The first tests Gunnarson ran with it were in his bathtub, since Caltech’s labs were closed at the start of 2021 because of COVID.

[Related: These free-floating robots can monitor the health of our oceans]

Right now, CARL can still be remotely controlled. But to really get to the deepest parts of the ocean, there can’t be any hand-holding involved. That means no researchers giving CARL directions—it needs to learn to navigate the mighty ocean on its own. Gunnarson and Dabiri sought out computer scientist Petros Koumoutsakos, who helped develop AI algorithms for CARL that could teach it to orient itself based on changes in its immediate environment and past experiences. Their research was published this week in Nature Communications.

CARL can decide to adjust its route on-the-fly to maneuver around the rough currents and get to its destination. Or it can stay put in a designated location using “minimal energy” from a lithium-ion battery.

CARL’s power lies in memories

The set of algorithms developed by Koumoutsakos can perform the wayfinding calculations on-board the small robot. The algorithms also take advantage of the robot’s memory of prior encounters, like how to get past a whirlpool. “We can use that information to decide how to navigate those situations in the future,” explains Dabiri.

CARL’s programming enables it to remember similar paths it has taken in previous missions, and “over repeated experiences, get better and better at sampling the ocean with less time and less energy,” Gunnarson adds.

A lot of machine learning is done in simulation, where all the data points are clean. But transferring that to the real world can be messy. Sensors sometimes get overwhelmed and might not pick up all the necessary metrics. “We’re just starting the trials in the physical tank,” says Gunnarson. The first step is to test if CARL can complete simple tasks, like repeated diving. A short video on Caltech’s blog shows the robot clumsily bobbing along and plunging into a still water tank.

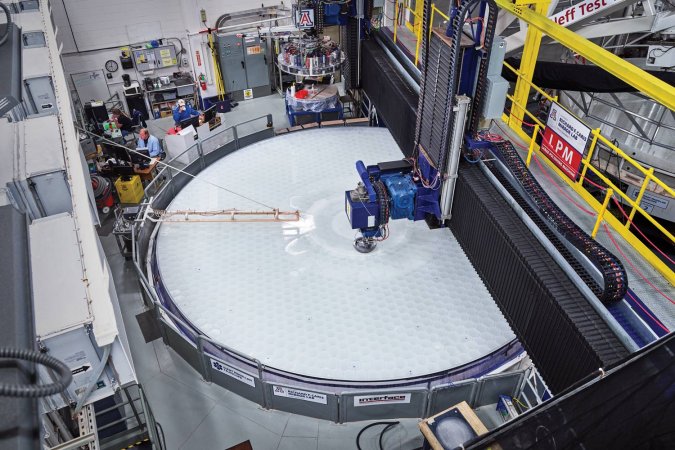

As testing moves along, the team plans to put CARL in a pool-like tank with small jets that can generate horizontal currents for it to navigate through. When the robot graduates from that, it will move to a two-story-tall facility that can mimic upwelling and downwelling currents. There, it will have to figure out how to maintain a certain depth in a region where the surrounding water is flowing in all directions.

[Related: Fish sounds tell us about underwater reefs—but we need better tech to really listen]

“Ultimately, though, we want CARL in the real world. He’ll leave the nest and go into the ocean and with repeated trials there, the goal would be for him to learn how to navigate on his own,” says Dabiri.

During the testing, the team will also adjust the sensors in and on CARL. “One of the questions we had is what is the minimal set of sensors that you can put onboard to accomplish the task,” Dabiri says. When a robot is decked out with tools like LiDAR or cameras, “that limits the ability of the system to go for very long in the ocean before you have to change the battery.”

By lightening the sensor load, researchers could lengthen CARL’s life and open up space to add scientific instruments to measure pH, salinity, temperature, and more.

CARL’s software could inspire the next bionic jellyfish

Early last year, Dabiri’s group published a paper on how they used electric zaps to control a jellyfish’s movements. It’s possible that adding a chip that harbors similar machine learning algorithms to CARL’s would enable researchers to better steer the jellies through the ocean.

“Figuring out how this navigation algorithm works on a real live jellyfish could take a lot of time and effort,” says Dabiri. In this regard, CARL provides a testing vessel for the algorithms that could eventually go into the mechanically modified creatures. Unlike robots and rovers, these jellies wouldn’t have depth limitations, as biologists know that they can exist in the Mariana Trench, some 30,000 feet below the surface.

[Related: Bionic jellyfish can swim three times faster]

CARL, in and of itself, can still be an useful asset in ocean monitoring. It can work alongside existing instruments like Argo floats, and go on solo missions to perform more fine-tuned explorations, given that it can get close to sea beds and other fragile structures. It can also track and tag along with biological organisms like a school of fish.

“You might one day in the future imagine 10,000 or a million CARLs (we’ll give them different names, I guess) all going out into the ocean to measure regions that we simply can’t access today simultaneously so that we get a time-resolved picture of how the ocean is changing,” Dabiri says. “That’s going to be really essential to model predictions of climate, but also to understand how the ocean works.”