Sonia Chernova wants you to train her robot. Two years ago, Chernova and some of her fellow roboticists at Worcester Polytechnic Institute (WPI) in Massachussets launched a remote robotics lab called RobotsFor.Me, a site where users can log in and teach robots how to function in physical space. It’s both more and less exciting than it sounds. Participants might play a game where they rack up points based on the number of objects they can help the robot pick up in 10 minutes. But these tutors aren’t exactly diving into an immersive, robot’s-eye-view interface. “We abstract everything,” says Chernova, who directs WPI’s Robot Autonomy and Interactive Learning lab. “They’ve never seen this robot. They’ve never been trained to use it properly. They don’t realize the robot costs hundreds of thousands of dollars.”

Chances are, they also don’t realize why this project is so desperately needed. Some context is in order.

A few weeks from now, some of the most advanced robots ever built will gather in California, and try, with all their mechanical might, to accomplish tasks that most 12-year-old humans could pull off with panache. Simple doorknobs will test their mettle. So will cinder blocks, and stairs. It’s all but guaranteed that some of the 25 machines scheduled to compete in the DARPA Robotics Challenge (DRC) on June 5 and 6 will skip some of those tasks, such as trying to drive a utility vehicle. Why? Because it’s really hard for many of them to fit into said vehicle, much less operate the pedals and the steering wheel at the same time. Spectators at the Fairplex in Pomona shouldn’t expect to see androids vaulting through the course—intended to loosely simulate a disaster zone—with inhuman precision and agility. If they’re lucky, the DRC’s robots will finish with merely subhuman competence. If they aren’t lucky, they’ll be carted off the course in pieces, because they took a spill while attempting something laughably routine, like picking up a dropped screwdriver. And during most of the competition, the robots will be in direct communication with their teams. So even with people effectively hovering over their shoulders, and telling them what to do, at every moment, it would be miraculous for any of the entries to make it through the course as quickly as a human might.

I don’t mean to diminish the DRC’s significance, or to imply that it won’t be thrilling. It’s the most exciting robotics competition since DARPA’s Urban Challenge, the 2007 contest that kicked off the race to develop driverless cars. But the DRC is important within a very specific context, that has little to do with the common perception of robots.

In orderly environments, such as factories and warehouses, machines can function with an impressive degree of autonomy, dutifully assembling or moving products, and fulfilling every corporation’s fantasies of unpaid and uncomplaining non-union labor. But drop a robot into an unstructured environment—robotics-speak for homes, offices, or any other disordered place—and prepare to be deeply unimpressed. By the time a robotic vacuum unleashes its innovative sensor suite and complex mapping and navigation algorithms to clean a single room, a person would have finished the entire house. And as useful as it is to offload grunt work to the mechanical couriers that ferry materials around hospitals, consider the occasional tragicomic image of one these machines stuck behind a trash can, waiting for a human Samaritan to free the robot from its prison of incompetence.

To appreciate robots means grading them on a curve.

To appreciate robots means grading them on a curve. The next time a journalist tries to startle you with sober analysis of science fiction’s recurring obsession with robotic uprisings, keep in mind that however smart artificially intelligent generals like Skynet or Ultron might be, their vast armies would be laughing stocks. Driverless cars are proficient at staying on the road in optimal conditions, and humanoid robots can usually (though not always) maintain a walking speed on the most level terrain. But take a robot car off-road, away from its highway dividers and preloaded laser maps and object recognition datasets, and it’s as likely to drive into a lake as ferret out human resistance fighters. And humanoid bots could be outrun at a casual jog, or turned into a smoking ruin by a small branch or gentle incline. Even systems like Boston Dynamics’ four-legged BigDog, which is incredibly stable in rough terrain, lack the ability to make sense of the environments they’re bounding through, and take action that doesn’t involve mobility. While armed aerial bots have been an established part of modern warfare for years, ground bots are, to put it very broadly, too dumb to pose a threat to anyone.

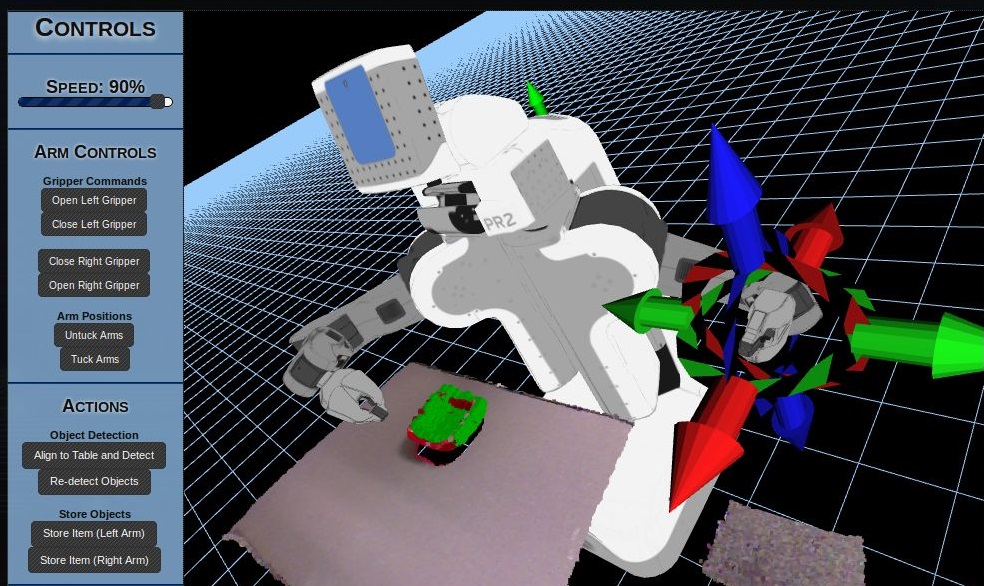

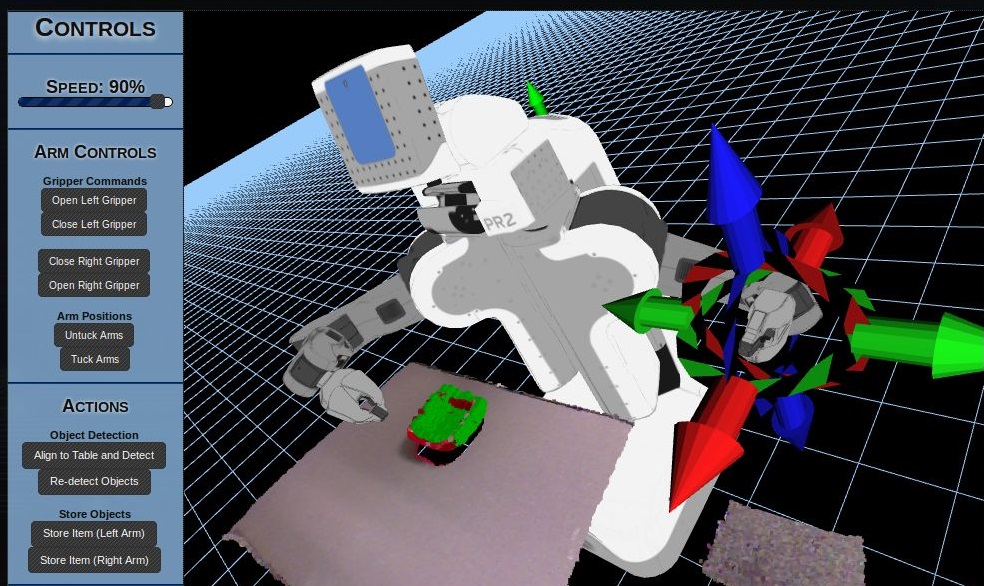

Chernova, to be clear, isn’t working on anything remotely resembling or related to military robots. That tangent is all mine. But the mission of RobotsFor.Me stands in stark contrast to the myth of robotic hypercompetence. Only in the movies are robots unerringly adept in unfamiliar environments. In reality systems like CARL (Crowdsourced Autonomy and Robot Learning), the wheeled, single-armed bot that WPI uses most often in its crowd sourced experiments, need all the training they can get. And while RobotsFor.Me has been accessible by the public since 2013, the need for teaching couldn’t be more obvious, now that we’ve seen how hard the some of DRC’s bots have struggled to stay vertical, much less prove that they can stand in for human responders in disaster zones.

One established method of teaching machines over the internet is to use Amazon’s Mechanical Turk, a service that turns humans into a kind of biological database. Roboticists can present a task, such as recognizing different objects in a given environment, and a distant network of people might label those objects, for a small fee. RobotsFor.Me’s distributed training approach is similar (though paying its users is less common) but it also lets Chernova study the training itself, in the hopes of making robots that are more autonomous, or at least better at taking orders. “My long term goal is to sell you a robot that you can bring home, and teach it to work for you, in the way that you want it to,” says Chernova. Mechanical Turk and RobotsFor.Me are effective in part because they provide a wealth of data. Chernova is exploring whether such crowd sourced input can give robots a baseline knowledge of the world, which might be supplemented by fast, intuitive training, to address differences in layout or occupants, or preferences in how you’d like tasks completed. RobotsFor.Me might eventually help a robot differentiate between a spoon and a fork. But the user should be able to tell the robot which size of fork should go on the table, without learning how to write code.

Just as RobotsFor.Me isn’t a work-in-progress towards armed ground bots, Chernova’s research isn’t focused specifically on building more efficient domestic servants. Her $433,351 grant from the National Science Foundation is directed towards learning from the data being collected by RobotsFor.Me studies, and investigating the ways it could benefit autonomy and training from one user, or many at once. It’s a small project (compared, for example, with the millions in funding already provided to some of the DRC teams) with potentially major implications. And before it injects much-needed smarts into home robots, it might lead to a more humble half-measure. Remember those hospital bots, lodged pathetically behind trash cans? “We can use this as a preliminary step to make robots smarter in the long-term, but also to develop ways to let humans log on, with no real training, and get the robot out of a tight spot,” says Chernova. “And each time somebody from that call center answers a robot and helps it, the robot can become more autonomous.” Though it’s not part of her funded research, Chernova seems promise for a call center model, to bridge the gap between constantly remote-controlling a robot, and trusting it to act with complete autonomy. “We can develop robots that are 90 percent autonomous pretty reliably today,” says Chernova. “It’s that 10 percent that’s the problem.”