In a paper published in Nature this week, Google’s Quantum AI researchers have demonstrated a way to reduce errors in quantum computers by increasing the number of “qubits” in operation. According to Google CEO Sundar Pichai, it’s a “big step forward” towards “making quantum applications meaningful to human progress.”

Traditional computers use binary bits—that can either be a zero or a one—to make calculations. Whether you’re playing a video game, editing a text document, or creating some AI generated art, all the underlying computational tasks are represented by strings of binary. But there are some kinds of complex calculations, like modeling atomic interactions, that are impossible to do at scale on traditional computers. Researchers have to rely on approximations which reduce the accuracy of the simulation, and renders the whole process somewhat pointless.

This is where quantum computers come in. Instead of regular bits, they use qubits that can be a zero, a one, or both at the the same time. They can even be entangled, rotated, and manipulated in other quantum-specific ways. Not only could a workable quantum computer allow researchers to better understand molecular interactions, but they could also allow us to model complex natural phenomenon, more easily detect credit card fraud, and discover new materials. (Of course, there are also some potential downsides—quantum computers can break the classical algorithms that secure everything today from passwords and banking transactions to corporate and government secrets.)

For now though, all this is largely theoretical. Quantum computers are currently much too small and error prone to change the world. Google’s latest research goes someway towards fixing the latter half of the problem. (IBM is trying to fix the first half.)

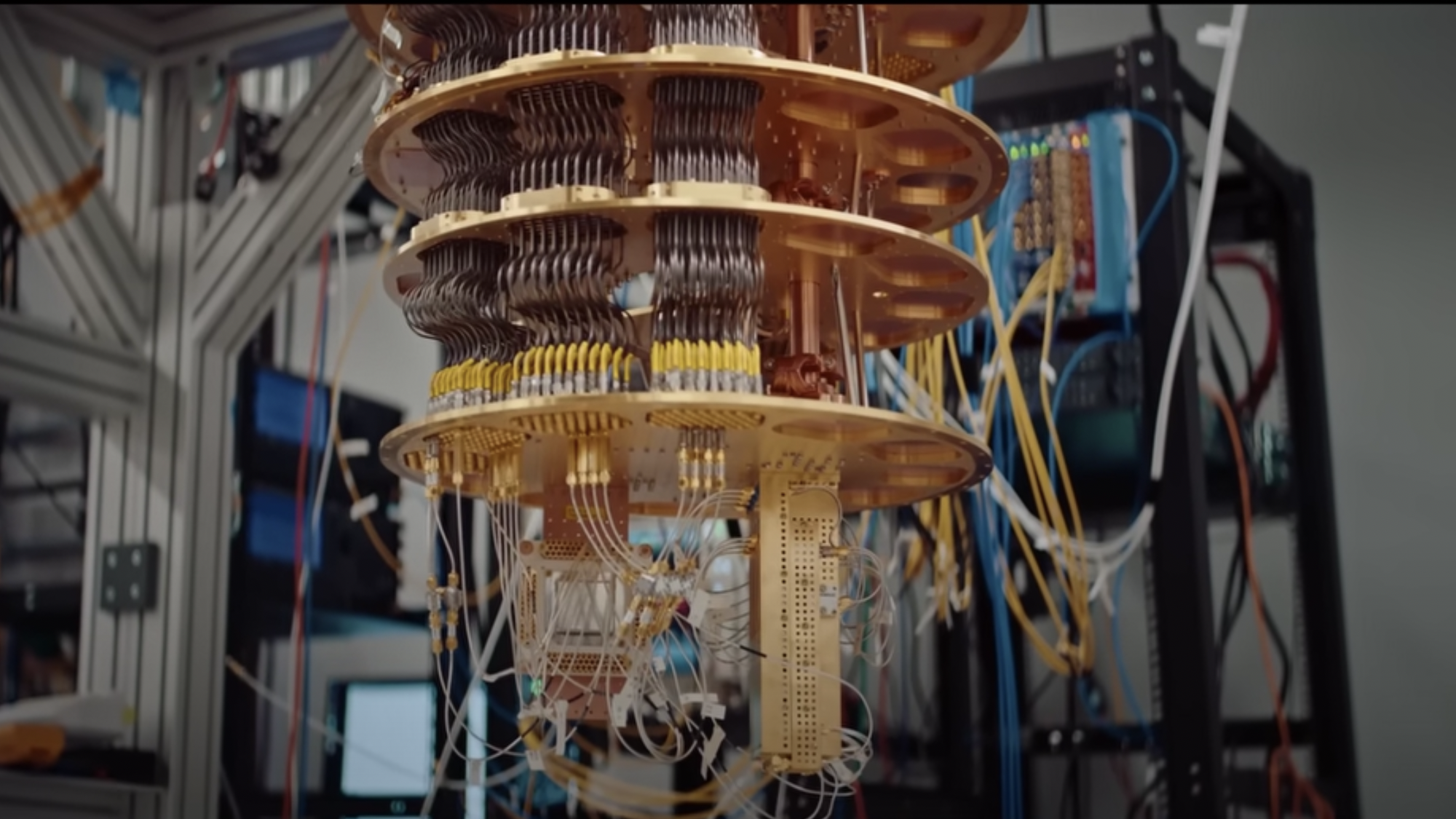

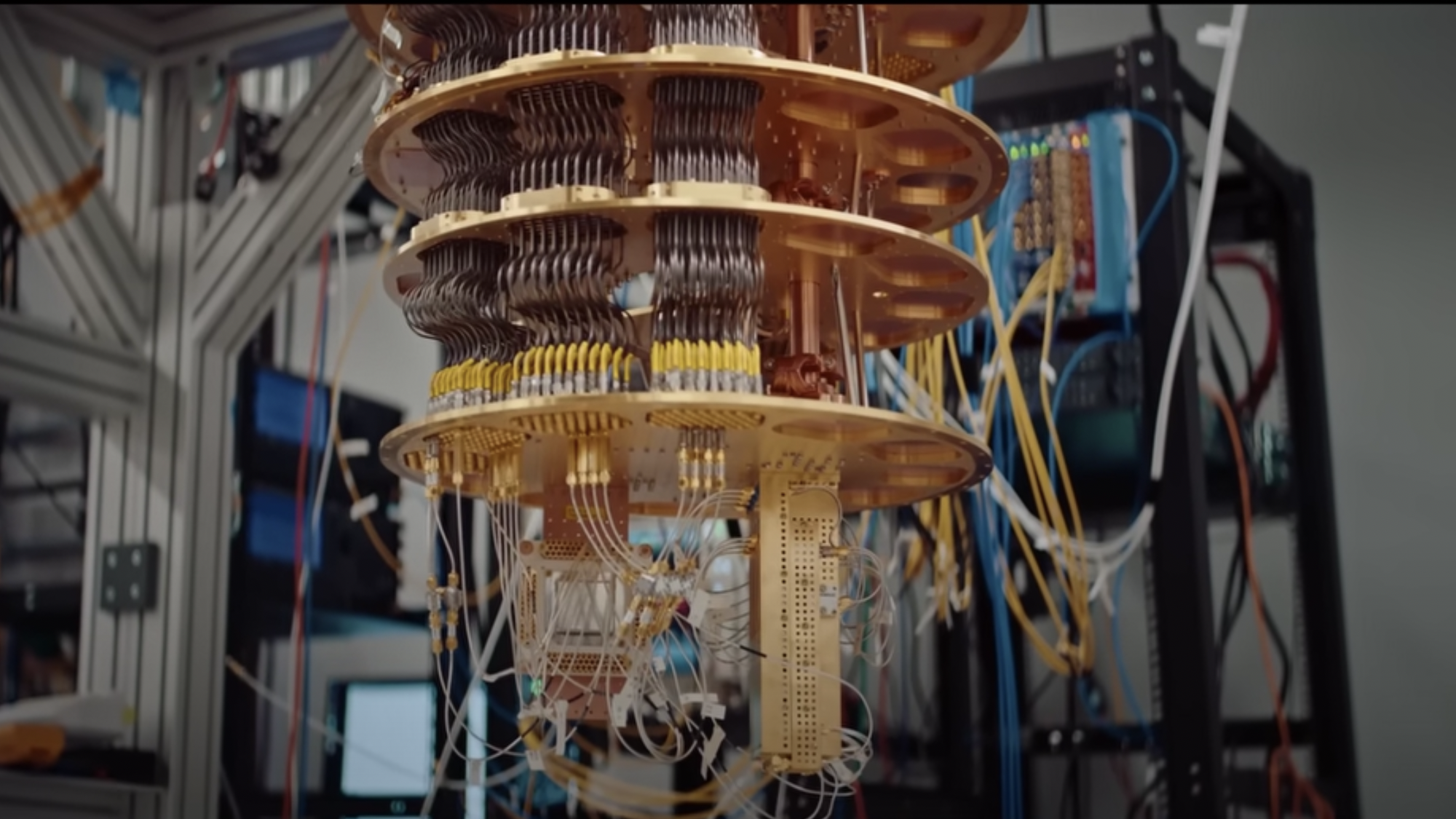

The problem is that quantum computers are incredibly sensitive to, well, everything. They have to operate in sealed, cryogenically cooled cases. Even a stray photon can cause a qubit to “decohere” or lose its quantum state, which creates all kinds of wild errors that interfere with the calculation of the problem. Until now, adding more qubits has also meant increasing your chances of getting a random error.

According to Google, its third generation Sycamore quantum processor with 53 qubits typically experiences error rates of between 1 in 10,000 and 1 in 100. That is orders of magnitude too high to solve real world problems; Google’s researchers reckon we will need qubits with error rates of between 1 in 1,000,000,000 and 1 in 1,000,000 for that.

Unfortunately, it’s highly unlikely that anyone will be able to get that increase in performance from the current designs for physical qubits. But by combining multiple physical qubits into a single logical qubit, Google has been able to demonstrate a potential path forward.

The research team gives a simple example of why this kind of set up can reduce errors: If “Bob wants to send Alice a single bit that reads ‘1’ across a noisy communication channel. Recognizing that the message is lost if the bit flips to ‘0’, Bob instead sends three bits: ‘111’. If one erroneously flips, Alice could take a majority vote (a simple error-correcting code) of all the received bits and still understand the intended message.”

Since qubits have additional states that they can flip to, things are a bit more complicated. It also really doesn’t help that, as we’re dealing with quantum, directly measuring their values can cause them to lose their “superposition”—a quantum quirk that allows them to have the value of ‘0’ and ‘1’ simultaneously. To overcome these issues, you need Quantum Error Correction (QEC) where information is encoded across multiple physical qubits to create a single logical qubit.

The researchers arranged two types of qubits (one for dealing with data, and one for measuring errors) in a checkerboard. According to Google, “‘Data’ qubits on the vertices make up the logical qubit, while ‘measure’ qubits at the center of each square are used for so-called ‘stabilizer measurements’.” The measure qubits are able to tell when an error has occurred without “revealing the value of the individual data qubits” and thus destroying the superposition state.

To create a single logical qubit, the Google researchers used 49 physical qubits: 25 data qubits and 24 measure qubits. Crucially, they tested this set up against a a logical qubit composed of 17 physical qubits (9 data qubits and 8 measure qubits) and found the larger grid outperformed the smaller one by being around 4 percent more accurate. While only a small improvement, it’s the first time in the field that adding more qubits reduced the number of errors instead of increasing it. (Theoretically, a grid of 577 qubits would have an error rate close to the target 1 in 10,000,000).

And despite its recent layoffs, Google is seemingly committed to more quantum research. In his blog post, Pichai says that Google will “continue to work towards a day when quantum computers can work in tandem with classical computers to expand the boundaries of human knowledge and help us find solutions to some of the world’s most complex problems.”