Army Developing Drones That Can Recognize Your Face From a Distance

And even recognize your intentions

It’s not enough for the U.S. military to be able to monitor you from afar. The U.S. Army wants its drones to know you through and through, reports Danger Room, and it is imbuing them with the ability to recognize you in a crowd and even to know what you are thinking and feeling. Like a best friend that at any moment might vaporize you with a hellfire missile.

Of a handful of contracts just handed out by the Army, two are notable for their unique ISR capabilities. One would arm drones with facial recognition software that can remember faces so targets can’t disappear into crowds. The other sounds far more unsettling: a human behavior engine capable of stacking informant info against intelligence data against other evidence to predict a person’s intent. That’s right: the act of determining whether you are friend or foe could be turned over to the machines.

That’s a bit disquieting whether you are an insurgent warfighter or not. But back to the overarching topic at hand: The U.S. military is pulling in more ISR data than it knows what to do with these days, a lot of it useless noise that’s inconsequential to ongoing operations. And, as DR notes, the strategy in Afghanistan has changed from one of winning hearts and minds through nation building projects to targeting specific bad guys.

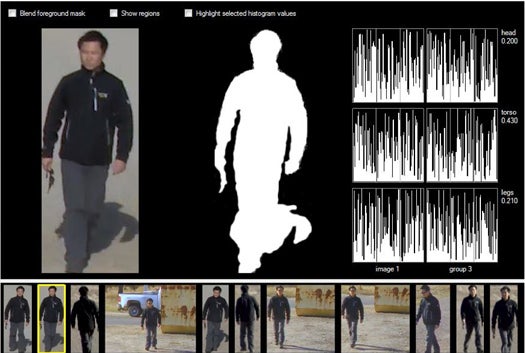

The hard part is keeping up with the bad guys, and that’s where Progeny Systems Corporation’s “Long Range, Non-cooperative, Biometric Tagging, Tracking and Location” system comes into play. The facial recognition layer of its technology is pretty standard: take some 2-D pictures of a target’s face, use them to build a 3-D model, and then use that 3-D model to recognize the face later.

But that’s not necessarily easy. It’s difficult enough for computers to pull off biometric facial recognition when the subject is stationary and looking straight at the camera. Toss in the many variables inherent in aerial ISR–a moving target who may be in profile or looking downward, a moving drone, low resolution cameras, etc.–and it’s a major challenge.

Progeny’s system, if it works the way the company and the Army envision it, needs just 50 pixels between the target’s eyes in a 2-D image to build the 3-D model. “Any pose, any expression, any face,” the company’s lead biometric researcher tells Danger Room. From that model stored in Progeny’s database, the system could identify the target from an even lower resolution image or video.

The closer the drone is to the subject, the better all of this works. But progeny also layers in a second kind of recognition that can work at more than 750 feet. This “soft biometric” system basically takes in a bunch of non-facial but otherwise outwardly relevant data–skin color, height and build, age, gender–to build a larger kind of model for its vision algorithms to work with. If a body is moving through the crowd, Progeny claims that a drone circling high overhead can keep track of him or her simply using this larger, whole-body identification system.

But what good is tracking if you don’t know who your enemies are? Another contract handed out to Charles River Analytics seeks to develop a human behavior engine known as Adversary Behavior Acquisition, Collection, Understanding, and Summarization (ABACUS). It mashes up all kinds of behavioral data into a system that churns out an assessment of adversarial intent, determining if a subject has enough built up resentment toward the U.S. and its aims to be a potential threat.

So pretty soon the drones may know who you are, where you’re going, and what you’re planning to do when you get there.