Whether or not lethal robots already exist is a matter of definition as much as anything else. Land mines, immobile explosives that go off when a human steps on them, are autonomous and lethal, though no one really thinks of them as killer robots. A new study by the Arizona State University Global Security Initiative, supported by funding from Elon Musk’s Future of Life Institute, looks at autonomous systems that already exist in weapons, creating a baseline for how we understand deadly decision by machines in the future. And it poses a very interesting question: what if autonomous machines aren’t informing how humans make decisions, but replacing them?

Consider the simple homing missile. First developed in the 1960s, homing missiles aren’t typically what people think of as an autonomous, lethal machine. A human points the missile at the target, and a human decides to launch it. Well, mostly. Roff, the paper’s author defines homing as “the capability of a weapons system to direct itself to follow an identified target.”

Of all the targeting technologies, homing emerged earliest. The danger posed to humans by homing is that, because it’s so well-known and widespread, it’s possible to fool. Roff writes:

Trusting the machine to find the right target can have horrific human consequences. In the summer of 2014, Russian-backed separatists fighting in eastern Ukraine shot down several Ukrainian military transports. It’s possible that the human responsible for shooting down flight MH-17 over Ukraine misread the missile launcher’s radar, imagining another troop transport instead of a commercial airliner full of innocent non-combatants. The homing targeting system used by the Buk missile launcher is good at finding airplanes in the sky; it is less good at distinguishing between large transports and airliners.

Future autonomous targeting technology will likely be better at not just finding their way to a selected target, but independently identifying the target before going in for the kill. Writes Roff:

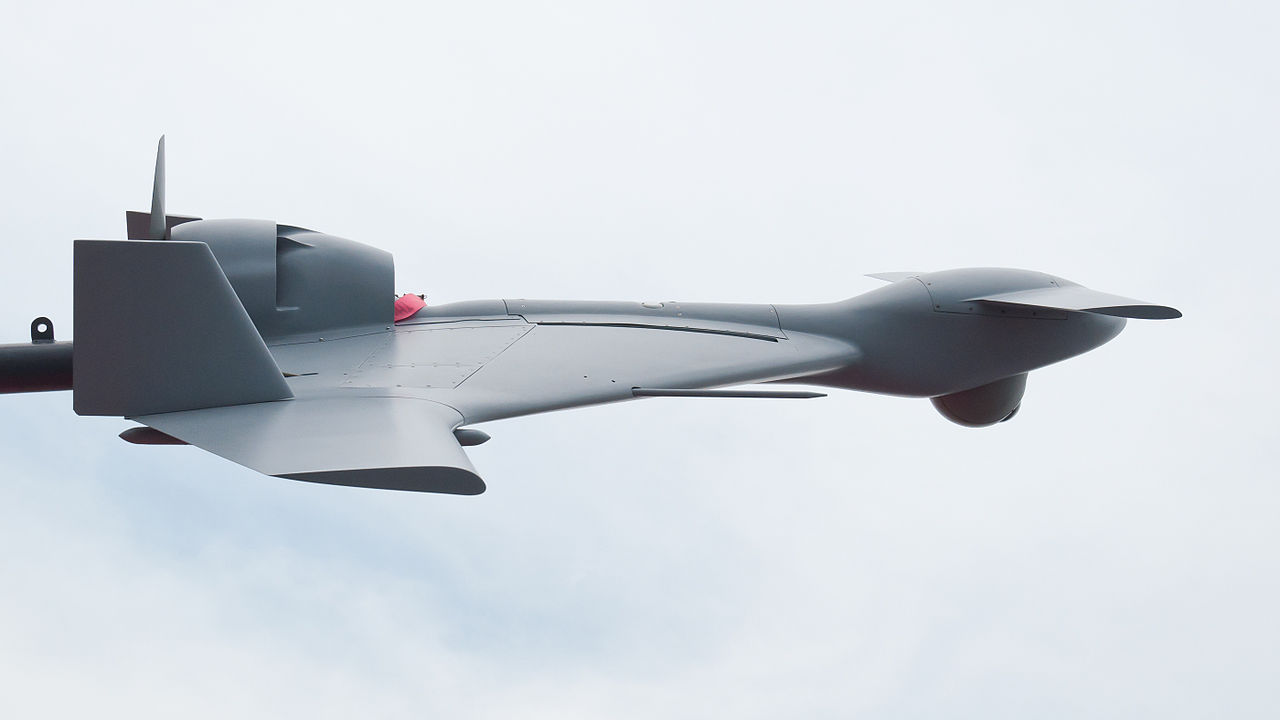

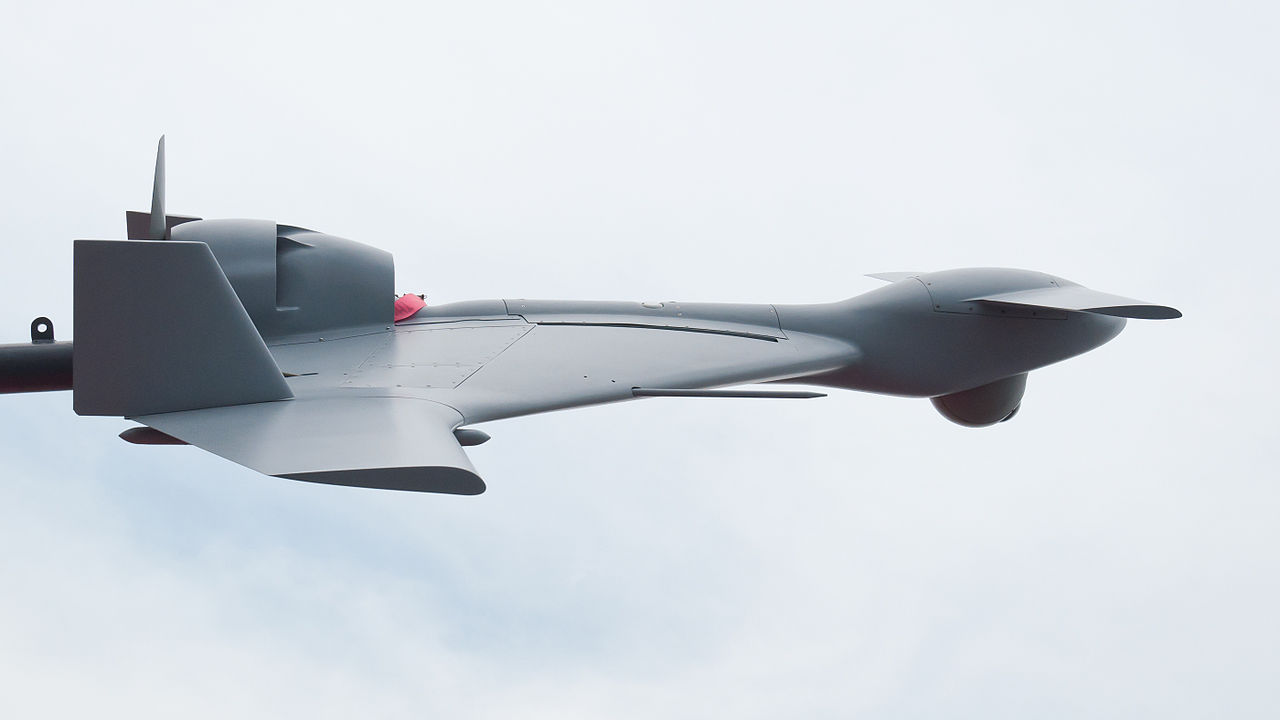

This is the vision of the future where robots fly over battlefields, scanning objects below, checking them against an internal list of approved targets, and then making a decision to attack. These weapons will most likely be designed to wait for human approval before they strike (“human in the loop,” as the Pentagon likes to say). Things happen on the battlefield incredibly fast and it’s just as likely that military leaders may find a weapon of which approval to attack takes too long to be of use–and will instead encourage full autonomy for the machine.

As the Pentagon and the international community wrestle with the problem of lethal autonomous machines, Roff’s study provides a wealth of sobering data.