This article was originally featured on Undark.

WITHIN DAYS of Russia’s recent invasion of Ukraine, several social media companies took steps to reduce the circulation of Russian state-backed media and anti-Ukrainian propaganda. Meta (formerly Facebook), for example, said it took down about 40 accounts, part of a larger network that had already spread across Facebook, Instagram, Twitter, YouTube, Telegram, and Russian social media. The accounts used fake personas, replete with profile pictures likely generated with artificial intelligence, posing as news editors, engineers, and scientists in Kyiv. The people behind the network also created phony news websites that portrayed Ukraine as a failed state betrayed by the West.

Disinformation campaigns have become pervasive in the vast realm of social media. Will technology companies’ recent efforts to combat propaganda be effective? Because outsiders are not privy to most of the inner workings of the handful of companies that run the digital world—the details of where information originates, how it spreads, and how it affects the real world—it’s hard to know.

Joshua Tucker directs New York University’s Jordan Center for the Advanced Study of Russia and co-directs the school’s Center for Social Media and Politics. When we spoke in mid-March, he had just come from a meeting with colleagues strategizing how to trace the spread of Russian state narratives in Western media. But that investigation—most of his research, in fact—is hampered because, in the name of protecting user privacy and intellectual property, social media companies do not share all the details of the algorithms they use to manipulate what you see when you enter their world, nor most of the data they collect while you’re there.

The stakes for understanding how that manipulated world affects individuals and society have never been higher. In recent years, journalists, researchers, and even company insiders have accused platforms of allowing hate speech and extremism to flourish, particularly on the far right. Last October, Frances Haugen, a former product manager at Facebook, testified before a U.S. Senate Committee that the company prioritizes profits over safety. “The result has been a system that amplifies division, extremism, and polarization—and undermining societies around the world,” she said in her opening remarks. “In some cases, this dangerous online talk has led to actual violence that harms and even kills people.”

In the United States, answers about whether Facebook and Instagram impacted the 2020 election and Jan. 6 insurrection may come from a project Tucker co-directs involving a collaboration between Meta and 16 additional outside researchers. It’s research that, for now, couldn’t be done any other way, said project member Deen Freelon, an associate professor at the Hussman School of Journalism and Media at the University of North Carolina. “But it absolutely is not independent research because the Facebook researchers are holding our hands metaphorically in terms of what we can and can’t do.”

Tucker and Freelon are among scores of researchers and journalists calling for greater access to social media data, even if that requires new laws that would incentivize or force companies to share information. Questions about whether, say, Instagram worsens body image issues for teenage girls or YouTube sucks people into conspiracies may only be satisfactorily answered by outsiders. “Facilitating more independent research will allow the inquiry to go to the places it needs to go, even if that ends up making the company look bad in some instances,” said Freelon, who is also a principal researcher at the University of North Carolina’s Center for Information, Technology, and Public Life.

For now, a handful of giant for-profit companies control how much the public knows about what goes on in the digital world, said Tucker. While the companies can initiate cool research collaborations, he said, they can also shut those collaborations down at any time. “Always, always, always you are at the whim of the platforms,” he said. When it comes to data access, he added, “this is not where we want to be as a society.”

TUCKER RECALLED THE early days of social media research about a decade ago as brimming with the promise. The new type of communication generated a treasure trove of information to mine for answers about human thoughts and behavior. But that initial excitement has faded a bit as Twitter turned out to be the only company consistently open to data sharing. As a result, studies about the platform dominate research even though Twitter has far fewer users than most other networks. And even this research has limitations, said Tucker. He can’t find out the number of people who see a tweet, for example, information he needs to more accurately gauge impact.

He rattled off a list of the other information he can’t get to. “We don’t know what YouTube is recommending to people,” he said. TikTok, owned by the Chinese technology company ByteDance, is notoriously closed to research, although it shares more of users’ information with outside companies than any other major platform according to a recent analysis by the mobile marketing company URL Genius. The world’s most popular social network, Facebook, makes very little data public, said Tucker. The company’s free tool CrowdTangle allows you to track public posts, for example. But you still can’t find out the number of people who see a post or read comments, nor glean precise demographic information.

In a phone call and email with Undark, Meta spokesperson Mavis Jones contested that characterization of the company, stating that Meta actually supplies more research data than most of its competitors. As evidence of the commitment to transparency, she pointed out that Meta recently consolidated data-sharing efforts into one group focused on the independent study of social issues.

“It absolutely is not independent research because the Facebook researchers are holding our hands metaphorically in terms of what we can and can’t do,” said Freelon.

To access social media data, researchers and journalists have gotten creative. Emily Chen, a computer-science graduate student at the University of Southern California said that researchers may resort to using a computer program to harvest large amounts of publicly available information from a website or app, a process called scraping. Scraping data without permission typically violates companies’ terms of service and the legalities of this approach are still tied up in the courts. “As researchers, we’re forced to kind of reckon with the question of whether or not our research questions are important enough for us to cross into this gray area,” said Chen.

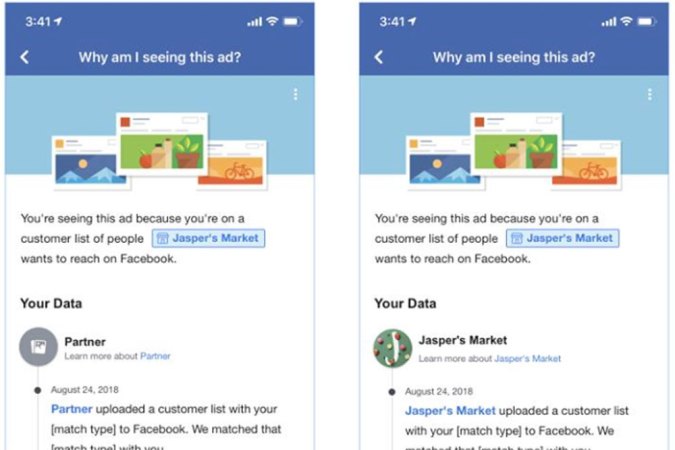

Researchers often get away with scraping, but platforms can potentially shut them out at any time. However, Meta told me that scraping without permission is strictly against corporate policy. And, indeed, last summer Meta went so far as to disable the accounts of researchers in New York University’s Ad Observatory project, which had been collecting Facebook data to study political ads.

Another approach is to look over the shoulder of social media users. In 2020, The Markup, a nonprofit newsroom covering technology, announced the launch of its Citizen Browser Project. The news outlet paid a nationally representative sample of 1,200 adults to install a custom-made browser on their desktop computers. The browser periodically gathers information from people’s Facebook feeds—with personally identifiable data being removed.

“We are like photojournalists on the street of algorithm city,” joked Surya Mattu, the investigative data journalist who developed the browser. “We’re just trying to capture what’s actually happening and trying to find a way to talk about it.” The browser’s snapshots reveal a digital world where news and recommendations look entirely different depending on your political affiliation and where—contrary to Facebook’s assertions—many people see extremist and sensationalist content more often than they would when viewing mainstream sources. (To see what’s currently trending on Facebook according to The Markup’s data, go to @citizenbrowser on Twitter.)

WHAT COULD JOURNALISTS and social scientists shed light on if they had a better view of the digital world? In a commentary published in December 2020 in the journal Harvard Kennedy School Misinformation Review, 43 researchers submitted 15 hypothetical projects for tackling disinformation that they could pursue “if social media data were more readily available.” Freelon’s group detailed how they could determine the origin of misleading stories (currently nearly impossible), identify which platforms play the biggest role in spreading misinformation, and determine which correction strategies work with which audiences.

An engineer by training, The Markup’s Mattu would like to see social media data work more like open-source software, which allows users to view the code, add features, and fix problems. For something operating at the scale of Facebook, there’s no way one group of people can figure out how it will work across all ecosystems, contexts, and cultures, he said. Social media companies need to be transparent about issues such as algorithms that may wind up prioritizing extreme content or advertisers that find ways to target people by race. “We should accept that these kinds of problems are a feature, not a bug of social networks that exist at the scale of 2 billion people,” he said. “And we should be able to talk about them in a more honest way.”

Chen also sees collaboration as the way forward. She envisions a world where researchers can simply ask for data rather than resorting to gray areas such as scraping. “One of the best ways to tackle the current information warfare that we’re seeing is for institutions, whether corporate or academic, to really work together,” she said.

“We are like photojournalists on the street of algorithm city,” joked Mattu.

One of the main barriers to greater access is protecting users’ privacy. Social media users are reasonably concerned that outsiders might get their hands on sensitive information and use it for theft or fraud. And, for many reasons, people expect information shared in private accounts to stay private. Freelon is working on a study looking at racism, incivility, voter suppression, and other “anti-normative” content that could damage someone’s reputation if made public. To protect users’ privacy, he doesn’t have access to the algorithms that could identify those people. “Now, personally, do I care if racist people get hurt?” he said. “No. But I can certainly see how Facebook might be concerned about something like that.”

Current laws are set up to preserve the privacy of people’s data, not with the idea of facilitating research that will inform society about the impact of social media. The U.S. Congress is currently considering bipartisan legislation such as the Kids Online Safety Act, the Social Media DATA Act, and The Platform Accountability and Transparency Act, which compel social media companies to provide more data for research while still maintaining provisions to protect user privacy. PATA, for example, mandates the creation of privacy and cybersecurity standards and protects participating academics, journalists, and companies from legal action due to privacy breaches.

In the book “Social Media and Democracy,” co-editors Tucker and Stanford law professor Nathaniel Persily write: “The need for real-time production of rigorous, policy-relevant scientific research on the effects of new technology on political communication has never been more urgent.”

It’s been an eventful couple of years since the book was published in 2020. Is the need for solid research even more pressing? I asked Tucker.

Yes, he answered, but that urgency stems not just from the need to understand how social media affects us, but also to ensure that protective actions we take do more good than harm. In the absence of comprehensive data, all of us—citizens, journalists, pundits, and policy makers—are crafting narratives about the impact of social media that may be based on incomplete, sometimes erroneous information, said Tucker.

While social media has given voice to hate speech, extremism, and fake news around the world, Tucker’s research reveals that our assumptions about how and where that happens aren’t always correct. For example, his team is currently observing people’s YouTube searches and finding that, contrary to popular belief, the platform doesn’t invariably lead users down rabbit holes of extremism. His research has also shown that echo chambers and the sharing of fake news are not as pervasive as commonly thought. And in a study published in January 2021, Tucker and colleagues found spikes of hate speech and white nationalist rhetoric on Twitter during Donald Trump’s 2016 presidential campaign and its immediate aftermath, but no persistent increase of hateful language or that particular stripe of extremism.

“If we’re going to make policy based on these kinds of received wisdom, we really, really have to know whether or not these received wisdoms are correct or not,” said Tucker.

More and better research depends on social media companies creating a window for outsiders to peer inside. “We figure out ways to make do with what we can get access to, and that’s the creativity of being a scholar in this field,” said Tucker. “Society would be better served if we were able to work on what we thought were the most interesting research questions.”