At a party a few weeks ago, I had the unenviable task of trying to pictorially represent “Sesame Street.” The most ridiculous-looking blob, which was supposed to be Big Bird, flowed from my dry erase marker. But as soon as I put a curly-haired face-thing on top of what appeared to be a garbage can, someone shouted the answer. We humans are pretty good at guessing each other’s badly rendered line drawings.

Computers are not so skilled, however. Now a new algorithm developed at Brown University and the Technical University of Berlin aims to improve matters. It’s the first computer application designed for “semantic understanding” of abstract drawings, and the research team says it could improve search applications and sketch-based interfaces. Someday, building on this research and other line-drawing computer recognition, you might be able to finger-draw something on your iPhone and turn up a real answer.

The program can identify simple abstract sketches 56 percent of the time, compared to humans’ 73 percent average. Even those sorely lacking in verisimilitude can be detected, which is the key breakthrough here. Computers can already recognize accurate sketches, like a police sketch of a suspect compared to photos of a face, for example. But for the type of abstract sketches we all grow up with, it’s a different challenge.

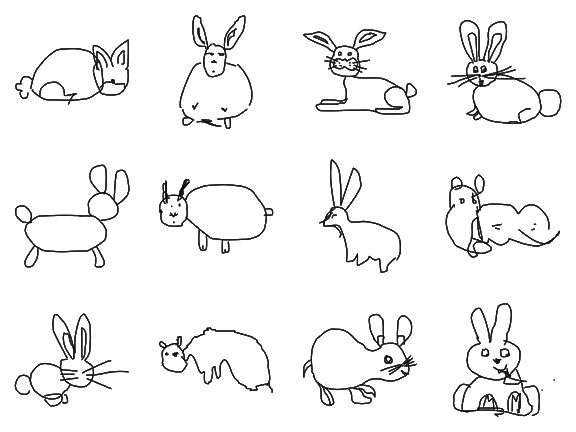

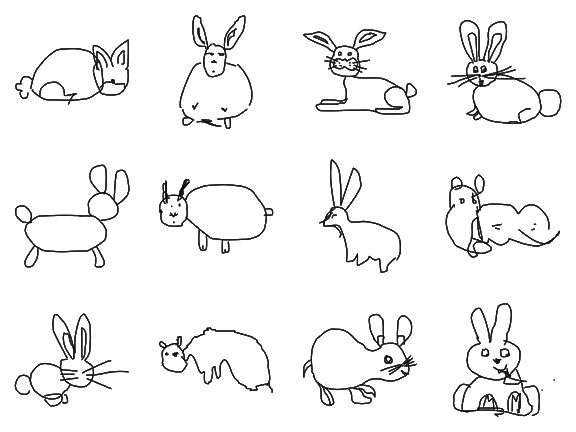

Think of it this way — if you’re asked to draw a rabbit, you would probably draw something with buck teeth, huge ears and exaggerated whiskers. Other people would easily recognize this cartoonish representation, just like my friends figured out my abstract rendering of Oscar the Grouch. But it doesn’t actually resemble the real thing in any meaningful way, so a computer would have no idea what it is. It’s sort of like training Watson to play “Jeopardy!” — there are subtle tricks and meanings that a human can distinguish, but which present a tough challenge for something built in the black-and-white, ones-and-zeroes world.

James Hays, assistant professor of computer science at Brown, and Matthias Eitz and Marc Alexa from TU Berlin, set out to solve this problem. They devised a list of everyday things people might feel like doodling, settled on 250 categories and used Amazon’s Mechanical Turk crowdsourcing platform to hire some sketch artists. They took 20,000 unique sketches and fed them into existing machine-learning algorithms to train the system. The project culminated in a fun real-time computer Pictionary, where the system tries to recognize objects as the person draws them. Watch it in action at the bottom of the page.

Some of the drawings are priceless, and many of the computer guesses are hilarious. You can see the entire set here. Some favorites: the surprising accuracy of giraffe, the creativity of SpongeBob drawings, and the amazing inaccuracy of lobster, which, like a dog, apparently looks like everything.

To expand their data set, the team is thinking about gamifying this concept into something you can play on iOS or Android devices. There’s already an iPhone app, which you can download here.

The goal would be improved sketch-based search, the researchers say. That could improve computer accessibility for speech, movement or literacy-impaired people — and it could work in any language, too. The team presented their project at SIGGRAPH last month.

[via Brown University]