Every year at its Max conference, Adobe gives “sneak peeks” at new tech that will one day make its way into apps like Photoshop, and its video editing software Premiere. These demos gave us our first look at Adobe’s seemingly magic Content Aware Fill tool, which automatically replaces objects when you Photoshop them out. This year’s tech demos show off some truly impressive image editing feats, all of which is powered by the machine learning tech Adobe calls Sensei. Here are some of the most impressive.

Scene Stitch

Typically, when you Photoshop an object out of a photo, the algorithm will look around that photo for patterns and textures it can use to fill the hole. This is handy, but the results sometime make it obvious that something is off due to repeating patterns that look unnatural. The next generation of the tech is smarter— it compares the image to an entire database of photos. So, if you cut something off of your lawn, it will look for similar patches of grass in outside images, then pull in the data it needs.

Cloak

Removing a distracting object from a video is difficult. You can rely on finicky software or edit each frame individually, and even then you can end up with inconsistent results unless you meticulously compare each frame as you go. A feature called Cloak tracks an object and automatically replaces it (using a method similar to Scene Stitch) while ensuring that there’s no distracting fluctuation between frames. Versions of this are possible now with other software like After Effects, but this looks dead simple if it works.

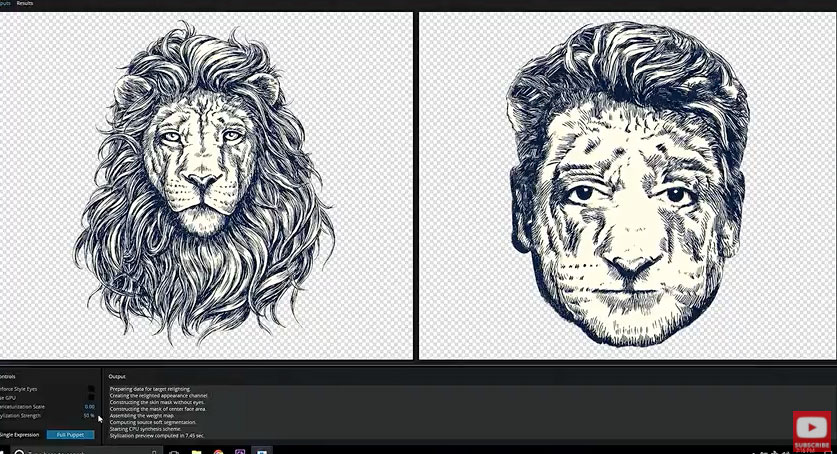

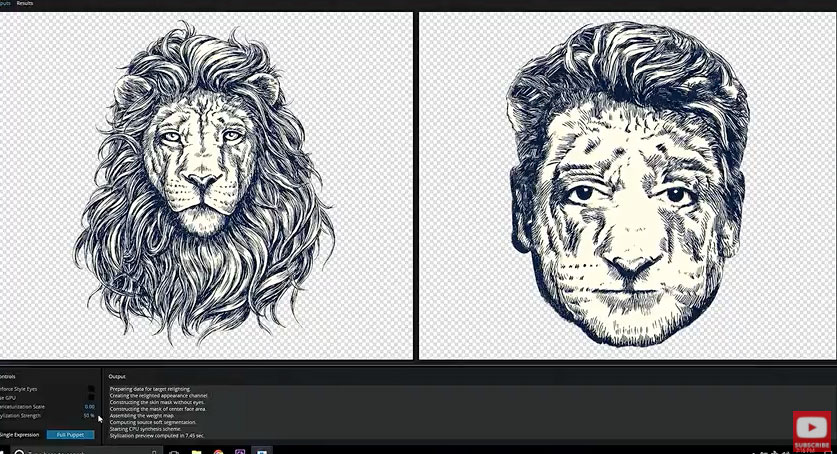

Project Puppetron

Photoshop’s stylized filters have never been very good, but this demo is more about mimicking a real artistic style instead of trying to fake it. The algorithm can analyze a portrait done in line drawing or some other medium, and apply that same look to a regular portrait photograph. There are apps that do similar things now, like Prisma, but the demos shown here are a much more accurate representation of the original.

Project Scribbler

I don’t like the idea of “colorizing” images that started in black and white, but this deep learning-based image generation system does an impressive job with a single click. It analyzes the scene and then applies color with surprising accuracy. It can also apply textures from photos, in case you want to mock up a handbag from a sketch.

SonicScape

Video that shows a 360-degree scene is on the rise, but recording sound to go with it is difficult. SonicScape allows for the sound to adjust appropriately as you move your head around while consuming VR content. It’s literally surround sound, but reactive to your head movements.

PhysicsPak

This demo is geared toward illustrators and designers who want to fit a lot of objects into a specific shape. It’s a narrow use case, but the animation that happens roughly two-minutes into the video of cats forming the letter A is mesmerizing and worth watching even if you can’t draw more than a stick figure.

One thing that’s very clear from these demos is that Adobe is prioritizing machine learning in its Sensei platform. Artificial intelligence touches every part of these demos and is already baked into just about every piece of photo, video, and illustration editing software in use. That’s good news for bad artists—or good artists who just want to save time spent staring at selection tools or rendering progress bars.