Stanford’s Artificial Neural Network Is The Biggest Ever

It's 6.5 times bigger than the network Google premiered last year, which has learned to recognize YouTube cats.

Last summer, in conjunction with Stanford researchers, Google[x], the R&D arm where ideas like Project Glass are born, built the world’s largest artificial neural network designed to simulate a human brain. Now Andrew Ng, who directs Stanford’s Artificial Intelligence Lab and was involved with Google’s previous neural endeavor, has taken the project a step further. He and his team have created another neural network, more than six times the size of Google’s record-setting achievement.

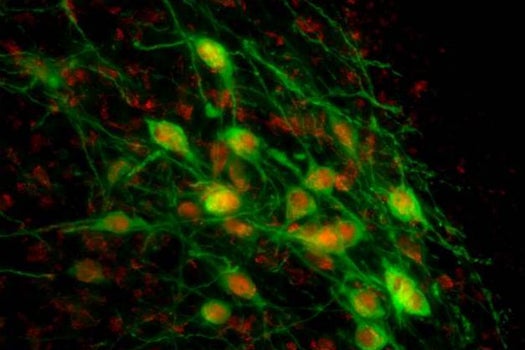

Artificial neural networks can model mathematically the way biological brains work, allowing the machine to learn to think in the same ways that humans do–making them capable of recognizing things like speech, objects and even cats like we do.

The model Google developed in 2012 was made up of 1.7 billion parameters, the digital version of neural connections. It successfully taught itself to recognize cats in YouTube videos. (Because, what else is the human brain good for?)

Since then, Ng and other Stanford researchers have created an even bigger network, with 11.2 billion parameters, that only requires the computational power of 16 servers with graphics processing unit, or GPU, computing–compared to the 16,000 CPU processors Google’s network required. The technology is being presented at the International Conference on Machine Learning in Atlanta this week.

From an AI standpoint, that means we’re getting a smidgen closer to being able to give our robots (or drones) human-level intelligence. On the other hand–we should probably just accept the fact that we’re that much closer to the sentient-robot takeover. Looking on the bright side, compared to what you’re lugging around on top of your neck, 11 billion neural connections isn’t that many–the human brain boasts some 100 trillion connections.