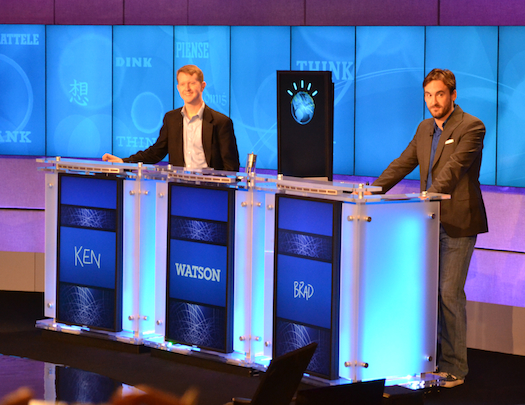

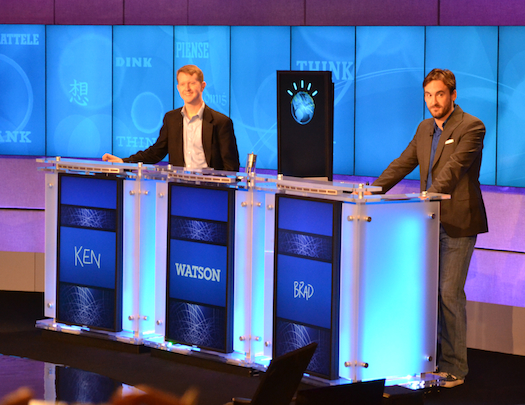

Today at IBM’s headquarters in Yorktown, New York, an historic battle was staged. Two superstar Jeopardy! alums (Ken Jennings and Brad Rutter) faced off against IBM’s supercomputer Watson in a preview round of America’s most challenging trivia game, and we were there to see the thrilling man-on-machine action first-hand.

Watson, named after IBM’s founder, is one epic supercomputer. To handle the formidable task that competing on Jeopardy! presents, IBM spent years constructing a computer with 2,800 Power7 cores. That power is absolutely necessary–a single-core CPU, like in many modern computers, takes about two hours to come up with an answer to a standard Jeopardy! question, rather than the three-second average Watson currently boasts.

A lot of the challenge in creating an algorithm that can answer Jeopardy! questions lies in the questions themselves–the language used in these questions is hardly ever simple, often incorporating wordplay, riddles, and irony–but there’s an additional problem in the addition of risk. In a split-second, a competitor must assess confidence in the question, weigh that confidence against the penalty of getting it wrong, and decide if the question is worth answering based on those factors. That’s an intuitive effort for a human, but Watson had to be programmed with some incredibly complex reasoning to be able to do the same thing.

Watson has a certain self-awareness; it knows it won’t get every answer right, and has to pass a certain level of confidence before it will answer. Watson’s logo will change color to indicate its confidence: The lines that are part of its “avatar” will glow blue if Watson is confident, and orange if it’s not.

The vagaries of language mean that the questions can be interpreted in all kinds of different ways, so merely figuring out what the question is trying to ask provides the majority of the struggle for Watson. To that end, the computer actually comes up with thousands of different possible answers, and ranks them by the possibility of correctness. When we watched the quick match, the top three answers were displayed on screen, as well as the confidence percentage, and the second- and third-ranked answers were usually dramatically incorrect. It’s not likely that Watson will confuse, say, the author of one children’s book with the author of another. It’s more likely that Watson will completely misread what the question is even asking, and come up with an answer like “What is children?”

In this introductory battle, we learned a few things about the adjustments made to the show to accommodate a more mechanical being than usual. The question feed goes directly into Watson, so it doesn’t have to “read” the question like the human competitors. But Watson does have to press a physical button to ring in, just like Ken Jennings and Brad Rutter, which pretty much eliminates the split-second advantage the computer has.

Interestingly, Watson will not be connected to the Internet, so there won’t be any instant Wikipedia lookups. (IBM’s reasoning: “Ken [Jennings] and Brad [Rutter] aren’t connected to the Internet, so Watson shouldn’t be either.”) So where does this AI brain get its information? IBM’s engineers, without the benefit of the Internet, have to load all of Watson’s information manually, which includes encyclopedias, thesauruses, dictionaries, books, screenplays, and other compendiums of human knowledge.

There will be no audio or video clues in the eventual game, though the questions that require betting–Daily Double and Final Jeopardy–will remain. Watson performs a risk analysis on the categories given for those types of questions, though his precise reasoning means that his wagers are often unusual figures (a human might bet $2,000 instinctively, but Watson’s risk assessment might indicate that a bet of $1,986 is more prudent). Watson actually learns in real-time, within the category–if it doesn’t immediately understand a category, it will wait until a question or two in that category has been asked, and then use that data to figure out the pattern. Watson also takes the competition into account: If it’s losing, it might adjust to answer questions with which it has less confidence than if it was sitting on a large lead.

I spoke to David Ferrucci, the Principal Investigator for Watson’s DeepQA Technology at IBM, about the things Watson struggles with. “The things that are most difficult for Watson,” he said, “are the things that haven’t been written about.” Small items that may stick in a person’s mind that could lead to the answers of trivia questions are not nearly as accessible to artificial intelligence programs like Watson, even with its massive memory bank.

Certain elements of human language are tricky, too–the stuff that seems like it might be the most difficult (like puns and wordplay) are felt out by “trigger” words in the category name, such as “sounds like.” But synonyms are often a bigger problem. In the answer “This liquid cushions the brain from injury,” Watson has to determine that “liquid” is in this one case interchangeable with “fluid,” and that “cushions” is interchangeable with “surrounds.” Humans know what the question is asking instinctively, but Watson has to analyze it from every angle.

In the preview match I saw, which was all too quick, Watson performed surprisingly well. Not just well; it won handily, with $4,400 to Ken Jenning’s $3,400 and Brad Rutter’s $1,200. None of the contestants, human or machine, actually got a question wrong, but Watson seemed to be fastest at chiming in. Its weakest category was “Children’s Book Titles”; Ken Jennings nearly ran the category, and Brad Rutter later quipped that “Neither Watson nor I have kids.”

The eventual contest will be a two-day tournament format, in which the competitor with the most amount of money after two days will be crowned the victor. The winner will be awarded $1 million, second place $300,000, and third place $200,000. IBM will donate the entirety of Watson’s winnings to charity, while Ken Jennings and Brad Rutter will donate half of their winnings.

Who will emerge victorious with such a big purse on the line? Will flesh and blood greed add caginess to the human competitors, giving Watson an advantage? What anecdote will Watson produce during Trebek’s condescending interview segment? (Trebek says he’ll “probably try to have a little fun with him.”) We’ll have to wait until February 14 to find out.