The line between synthetic voices and real ones has been blurring for years. We regularly hear computer-generated reps on customer service lines or reading articles aloud to us online. UK company Sonantic famously cloned Val Kilmer’s voice after surgery for throat cancer left him unable to speak, which the public first heard in the documentary Val but that reached tens of millions in this summer’s Top Gun: Maverick.

Last Wednesday, Amazon announced a move that broadens the reach of such technology to users of its Alexa smart assistant. An upcoming update to the tech will allow them to replace the standard voice with that of anyone, including deceased loved ones. The company claims that the technology, which does not yet have a release date, can generate a clone of a person’s voice with as little as one minute of audio. Whether one might find the notion of an AI-generated grandma reading a bedtime story from The Great Beyond creepy or endearing, the move represents a step forward in making synthetic voices more accessible.

As recently as four years ago, capturing enough of an individual’s vocal patterns and intonations was a much longer process. For example, VocalID, a company that provides synthetic voices for clients with conditions that leave them unable to speak, required several hundred sentences’ worth of data to accurately recreate an individual’s voice. Around the same time, a similar product called Speech Morphing required about an hour’s worth of scripted inputs.

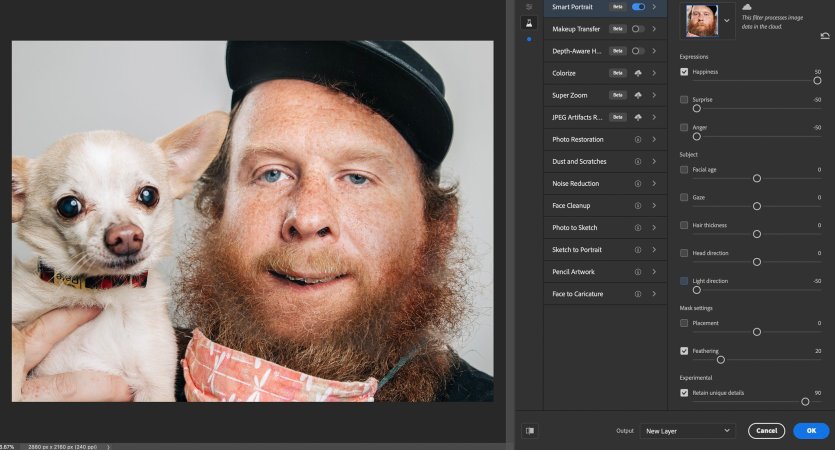

Synthesis, though, has been getting progressively easier and more common. In 2018, Chinese company Baidu made headlines for demonstrations of its Deep Voice tech that that needed only 60 seconds of audio to synthesize a voice. Today, the Veritone platform allows celebrities to sell synthetic versions of their voices to be used in endorsement deals. And the Overdub feature from the company Descript lets podcast engineers fix vocal flubs or all-out replace words in a recording without having to pull the host back into the studio.

Recreating voices is a powerful tool for those who have lost the ability to speak due to injury or illness. Some 2 million people in the US require the help of so-called adaptive alternative communication (AAC) to speak. The causes of speech disability are broad—from diseases that impact motor control like cerebral palsy to events like brain injuries or strokes—so a great number more may stand to benefit from making the technology more accessible. One estimate posits that about 5 million Americans and 97 million people worldwide could benefit from AAC. The ability to use a custom and personalized voice instead of an out-of-the-box generic “robo voice,” could be transformative.

It’s important to recognize, however, that voice synthesis may not only be used in purely altruistic ways. The potential to use a synthetic version of a public figure or celebrity’s voice in a deep-fake are clear—Kilmer’s Sonantic-provided voice, after all, was created using already existing footage and audio. And, at the same time, we’re still finding the guardrails around when and how a show, brand, or director should disclose when they’re using an AI-generated voice. When director Morgan Neville tapped a synthetic voice to generate three lines of dialogue from Anthony Bourdain in the documentary Roadrunner, there was backlash over the film’s failure to disclose how the lines were produced.

For those interacting with the technology day-to-day via a platform like Alexa, the more-common risk is the ooky-spooky-ness of falling into the uncanny valley. If a recreation misses the mark, even slightly, the artificial voice might tip the balance right creep-town. “There are certainly some risks, such as if the voice and resulting AI interactions doesn’t match well with the loved ones’ memories of that individual,” Michael Inouye, an analyst ABI Research focused on emerging internet technologies like the Metaverse, told CNN.