Earlier this year, we learned that the Mars Opportunity rover had officially ended its service. It traveled more than a marathon’s distance in its time on our neighboring planet, which far exceeded its original mission objective. During Opportunity’s tenure, it sent back some amazing images of Mars that provided useful scientific data as well as an opportunity to marvel at the majesty of the planet’s terrain.

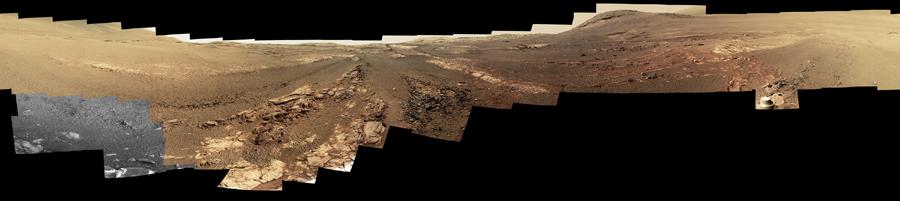

The final image it took is a massive panorama that took 29 days to shoot. It gives us a view of the Perseverance Valley, where the rover now sits. The panorama itself contains image data from 354 individual photos that were “stitched” together using software.

If you look carefully at the final photo, you’ll notice that a small piece in bottom left of it is still in black-and-white, while the rest is in color. This isn’t an artistic choice, but rather a technical detail with a surprisingly sad explanation. Opportunity shot the final necessary photos to fill in that section in color, but never got the chance to transmit them.

The Charged Coupled Device camera sensors in the rover’s panoramic camera (referred to as Pancam only take black and white images. A pair of cameras set nearly a foot apart helped calculate distances for the rover’s travels and precisely locate objects in its field of view and so it could accurately position its robotic arm.

According to Jim Bell, the Pancam Payload Element Lead and Arizona State University professor, the color comes from a wheel of filters that rotates in front of the camera lenses. Once a filter is in place, the sensor captures an image that’s restricted to specific wavelengths of light. There are eight total color filters on each wheel, but one is dedicated specifically to taking pictures of the sun, so it severely cuts the amount of light that gets in.

So why, then is part of the Opportunity’s final panorama in monochrome? While the rover shot the necessary images to provide color information, it never had the required bandwidth to send them back to earth before the fateful storm arrived that eventually ended the rover’s mission. The color version of the image combines pictures shot through three of the filters centered around the following wavelengths: 753 nanometers (near-infrared), 535 nanometers (green) and 432 nanometers (blue). That’s similar to what you’d find in a regular digital camera.

Unfortunately, the final frames needed to figure out the last bits of color in the panorama never made it back to earth.

This method for capturing photos sounds complex, but this process is actually extremely similar to the way in which almost all modern digital cameras work. Each pixel on the sensor inside your smartphone camera, for instance, sits behind a filter that’s either red, green, or blue. Those filters are arranged in a pattern typically known as the Bayer pattern. When you snap a photo, the camera knows how much light each image received and what color filter it passed through and it uses that information to “debayer” the image and give it its colors. Rather than using pixels on one sensor, the Pancam takes multiple pictures representing different wavelengths of light and the combines them later in a similar fashion.

Unlike your digital camera, however, the Pancam captures wavelengths beyond what you’d want to record. According to Bell, the cameras go further into both the red and blue ends of the spectrum to gain access ultraviolet and infrared light that’s outside the scope of human vision.

While the image is amazing—and a little sad—it’s a truly amazing tribute to what the Pancam really accomplished. The cameras have a resolution of just one megapixel, but as Bell says, the device was designed back in 1999 and 2000 when “a megapixel meant something.”