The second in a series of posts about the major myths of robotics, and the role of science fiction role in creating and spreading them. Previous and former topics, respectively: Robots are strong, the myth of robotic hyper-competence, and robots are evil, the myth of killer machines.

In 1993, Vernor Vinge wrote a paper about the end of the world.

“Within thirty years, we will have the technological means to create superhuman intelligence,” writes Vinge. “Shortly after, the human era will be ended.”

At the time, Vinge was something of a double threat—a computer scientist at San Diego State University, as well as an acclaimed science fiction writer (though his Hugo awards would come later). That last part is important. Because the paper, written for a NASA symposium, reads like a brilliant mix of riveting science fiction, and secular prophecy.

“The Coming Technological Singularity” outlines a reckoning to come, when ever-faster gains in processing power will blow right past artificial intelligence—systems with human-like cognition and sentience—and give rise to hyper-intelligent machines.

This is what Vinge dubbed the Singularity, a point in our collective future that will be utterly, and unknowably transformed by technology’s rapid pace. The Singularity—which Vinge explores in depth, but humbly sources back to the pioneering mathematician John von Neumann—is the futurist’s equivalent of a black hole, describing the way in which progress itself will continue to speed up, moving more quickly the closer it gets to the dawn of machine super intelligence. Once artificial intelligence (AI) is accomplished, the global transformation could take years, or mere hours. Notably, Vinge cites a SF short story by Greg Bear as an example of the latter outcome, like a prophet bolstering his argument for the coming end-times with passages from scripture.

But the point here is simple—the Singularity will arrive through momentum. Smart AIs will beget genius AIs, cybernetically enhanced post-humans will form vast hiveminds, and these great digital powers will not only learn and share every bit of available data, but redefine how information is learned and shared. “The physical extinction of the human race is one possibility,” writes Vinge, though he also allows for a future overseen by “benevolent gods.” He paints a vivid array of options, discussing the psychological pitfalls of digital immortality (citing yet another SF story, this one by Larry Niven), and the prospect the Internet suddenly waking up into collective, nightmarish sentience. Whatever the outcome, the world as we know it will have ended.

To believe in the Singularity, you have to believe in one of the greatest myths ever told by science fiction—that robots are smart.

The most urgent feature of the Singularity is its near-term certainty. Vinge believed it would appear by 2023, or 2030 at the absolute latest. Ray Kurzweil, an accomplished futurist, author (his 2006 book “The Singularity is Near” popularized the theory) and recent Google hire, has pegged 2029 as the year when computers will match and exceed human intelligence. Depending on which luminary you agree with, that gives humans somewhere between 9 and 16 good years, before a pantheon of machine deities gets to decide what to do with us.

If you’re wondering why the human race is handling the news of its impending irrelevance with such quiet composure, its possible that the species is simply in denial. Maybe we’re unwilling to accept the hard truths preached by Vinge, Kurzweil and other bright minds.

Just as possible, though, is another form of denial. Maybe no one in power cares about the Singularity, because they recognize it as science fiction. It’s a theory that was proposed by a SF writer. Its ramifications are couched in the imagery and language of SF. To believe in the Singularity, you have to believe in one of the greatest myths ever told by SF—that robots are smart, and always on the verge of becoming smarter than us.

More than 60 years of AI research indicates otherwise. Here’s why the Singularity is nothing more, or less, than the rapture for nerds.

* * *

For all their attention to detail, SF writers have a strange habit of leaving the room during the birth of machine sentience.

Take Vernor Vinge’s 1981 novella, True Names, and its power-hungry AI antagonist. It’s called the Mailman, because of its snail-mail-like interaction with other near-future hackers (it initially masquerades as one of them), requiring a day or more to craft a single response. When the rapacious software finally battles a small group of hackers in cyberspace, Vinge’s description stretches for pages, as both sides commandeer the collective processing power of the entire networked globe, leaving widespread outages and market crashes in their wake.

And yet, the origins of the Mailman—the world’s first truly self-aware computer system—are relegated to a few paragraphs during the novella’s final scene. Created a decade earlier by the NSA, the AI was an unthinking security program, an automated sleeper agent designed to sit in a given computer network and gradually gather data and awareness. The government killed the project, but accidentally left a shred of code. As one hacker explains, “Over the years it slowly grew—both because of its natural tendencies and because of the increased power of the nets it lived in.”

That’s it. The greatest achievement in the field of AI occurs by accident, and unobserved.

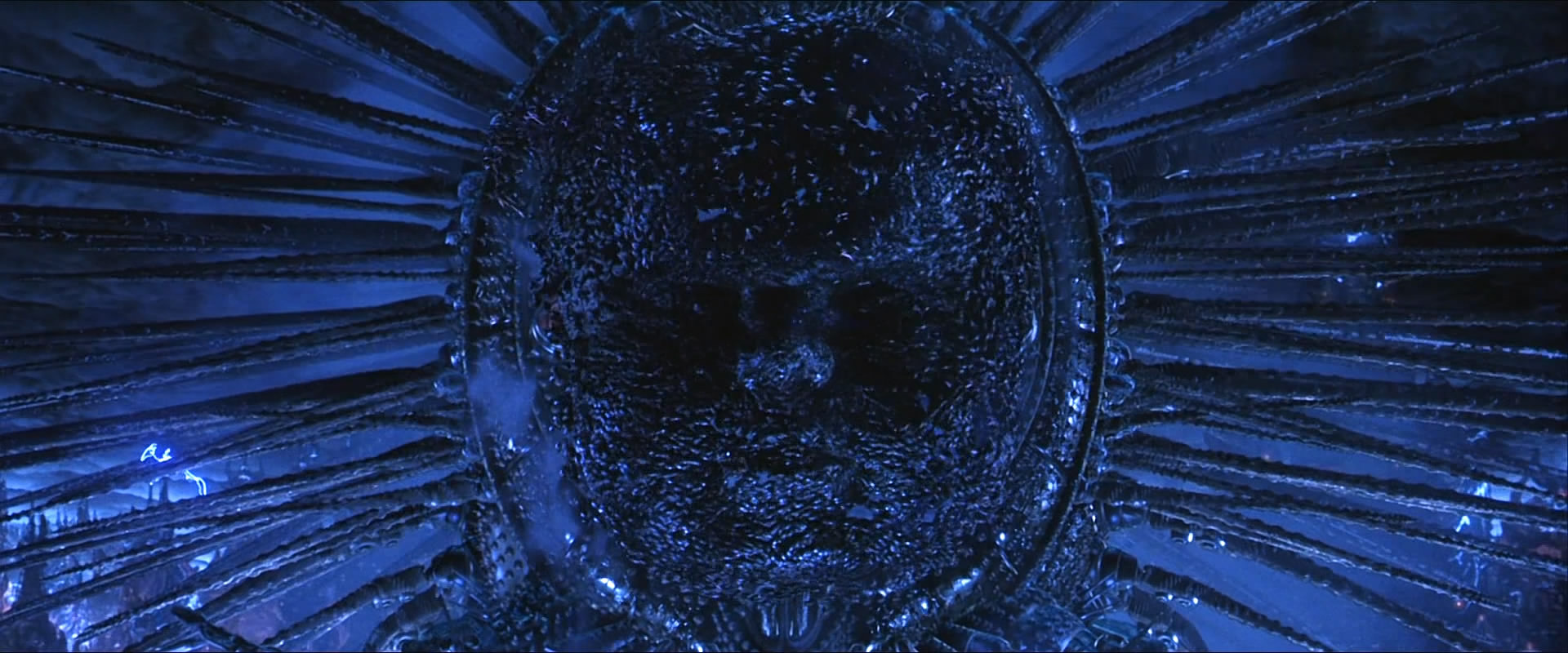

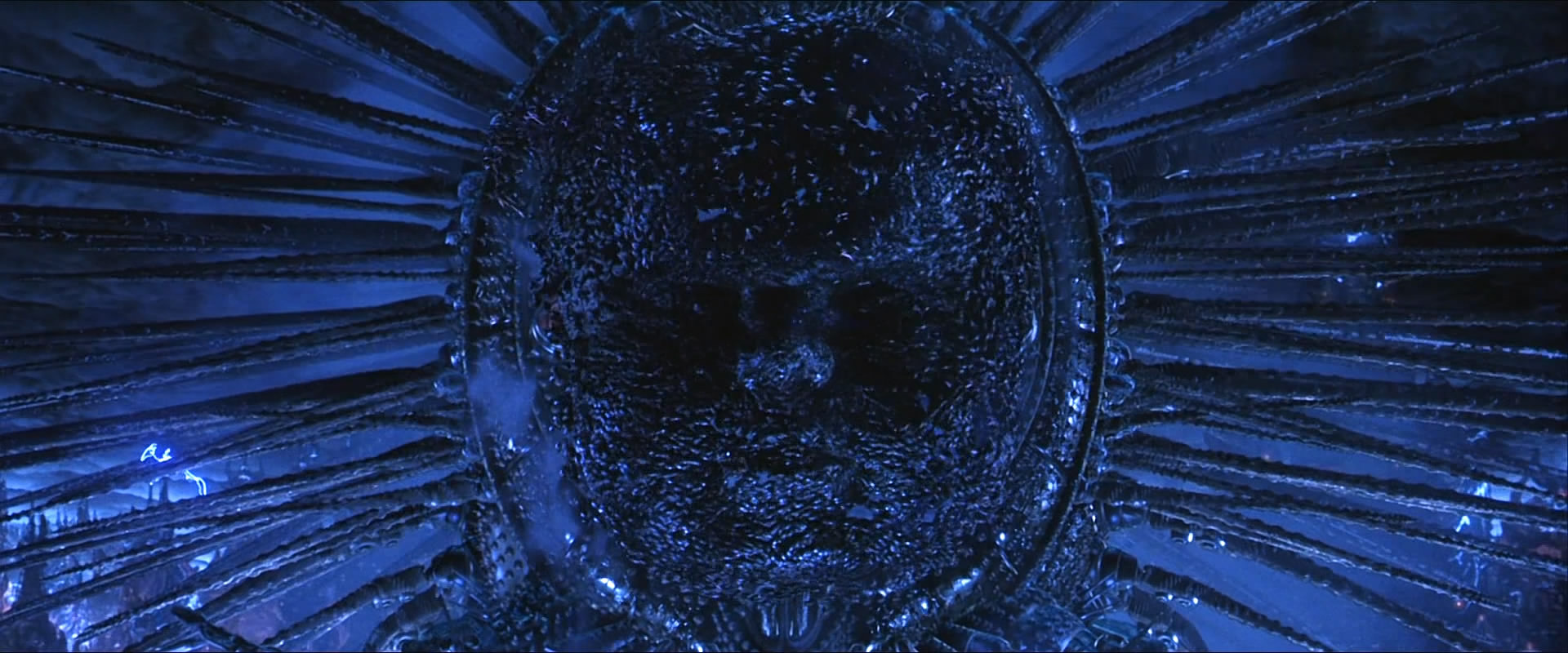

In fairness to Vernor Vinge, this is one of the most common dodges in robot SF. In the 1970 movie Colossus: The Forbin Project, as well as the 1966 novel it was based on, true AI is the spontaneous byproduct of two near-AIs meeting. Colossus, an automated defense system put in charge of America’s nuclear arsenal, starts sharing information with its Soviet counterpart, Guardian. But whatever the two programs have to say, the audience never sees or hears it, and neither do the human characters. The systems mingle, and, within minutes, emerge as a single, omniscient entity. The same goes for Colossus’ spiritual successor, Skynet. In 1984’s The Terminator, we learn that the genocidal military system simply “got smart.” Case closed. The 1991 sequel adds a few more details, stipulating that Skynet became self-aware roughly 25 days after being turned on. Later, less influential Terminator sequels would elaborate on the system’s sudden ascension, and prove that, from a narrative perspective, some villains are better left in the shadows. But the first two films are determined to look away from the fictional mechanics of machine sentience.

In all of these stories, though, the message is identical: the arrival of AI is predestined. Logic demands it, based on the simple, irrefutable observation that computers are improving so very quickly. With all that momentum, it’s inevitable that automated systems will barrel headlong into sentience. It’s such a foregone conclusion, that any cursory Web search will produce a battery of similar claims from non-fictional AI researchers.

Unfortunately, those same search results reveal just how terrible AI researchers are at predicting the future. The worst offenders are two of the field’s giants. In 1957, computer scientist and future Nobel-winner Herbert Simon predicted that, by 1967, psychology would be a largely computerized field, and that a computer would beat a flesh-and-blood world champion at chess. He was off by 30 years with the latter claim, and the former is as mystifying today as it was in 1957. But Simon was on a roll, and announced in 1965 that “machines will be capable, within twenty years, of doing any work a man can do.”

Marvin Minsky, the co-founder of MIT’s Artificial Intelligence Laboratory, was even more bullish about artificial general intelligence (AGI), the most common term within AI research for human-level machine smarts. In 1970, Minsky estimated its arrival in just three to eight years.

Rest assured, AGI did not secretly appear in 1978, or in 1985, and begin gobbling data by the terrabyte. But that hasn’t stopped the likes of Vinge and Kurzweil from issuing their own deadlines. It also hasn’t fully exposed the Singularity as a reiteration of the boundless, unsupported optimism that drained AI research of funding for decades (starting, coincidentally enough, soon after Minsky’s 1970 prediction). Like characters caught in The Terminator‘s time loop, we seem doomed to repeat the same cycle of prophecy and embarrassment, and to repeat Simon and Minsky’s central mistake.

To see AGI is unavoidable, you have to do as SF so often does, and imagine robots as monstrous or messianic geniuses waiting to happen, and humans as a bunch of dummies.

* * *

If you’re one of the Singularity’s true believers, you’re probably armed with a shocking amount of jargon borrowed from the intersection of AI research and neuroscience. Like all great prophecy language, these words ignite the mind, even without elaboration. Neural networks. Neuromorphic systems. Computer brains are functioning like our own, and, on some level, have been for decades. There are promising real-life projects to cite, as well, like the EU’s $1.3 billion Human Brain Project, whose ambitious goals include creating a full, digital simulation of a biological brain, and using that model to drive physical robots. Surely, the Singularity is upon us.

But with few exceptions, the path to machine hyper-intelligence passes directly through the human brain. And anyone who claims to understand biological intelligence is a fool, or a liar.

Neuroscience has made incredible progress in directly observing the brain, and drawing connections between electrical and neurochemical activity and some forms of behavior. But the closer we zoom in on the mind, the more complex its structures and patterns appear to be. The purpose of the Human Brain Project, as well as the United States’s BRAIN Initiative, is to address the fact that we know astonishingly little about how and why human beings think about anything. These projects aren’t signs of triumph over our biology, or indications that the finish line is near. They’re admissions of humility.

Meanwhile, the Singularity’s proponents still believe that intelligence can be brute-forced into existence, through sheer processing power. Never mind that Simon and Minsky thought the same, and were proven utterly, embarrassingly wrong, or that the only evidence of this approach working isn’t evidence at all, but science fiction. In those stories, AGI is so feasible, it practically wishes itself into being.

“Anyone who’s done real science, whether it’s bench science in a lab, or coding, knows that it’s not like you hit compile, and suddenly your program is working perfectly, and bootstrapping itself to godhood,” says Ramez Naam, a SF writer, technologist, AI patent holder, and ironically enough, lecturer at the Singularity University. Though Naam’s own novels include the networking and uploading of human consciousness, he’s become one of the most vocal critics of the Singularity. The theory’s assumption of AGI inevitability, he argues, is no different than our most outdated AI expectations.

“If you asked someone, 50 years ago, what the first computer to beat a human at chess would look like, they would imagine a general AI,” says Naam. “It would be a sentient AI that could also write poetry and have a conception of right and wrong. And it’s not. It’s nothing like that at all.” Though it outplayed Garry Kasparov in 1997, IBM’s Deep Blue is no closer to sentience than a ThinkPad laptop. And despite its Jeopardy prowess, “IBM’s Watson can’t play chess, or drive one of Google’s robotic cars,” says Naam. “We’re not actually trending towards general AI in anyway. We’re just building better and better specialized systems.”

Even if you were to awkwardly graft various specialized systems onto one another, they wouldn’t add up to AGI. Actually, that might work in SF, where AGIs seem to play by the same oversimplified rules as Hollywood’s version of hackers, able to brush aside stark differences in programming languages and data protocols, and start siphoning and wielding information like some sorcerer’s arcane, boundless resource. Provided you could unify an assemblage of smart machines into one system, it still wouldn’t magically spawn creative, adaptive cognition, or an ability to learn (rather than memorize). Though human intelligence has yet to be quantified, we at least know that it’s profoundly non-linear. Synaptic activity can flow across vast, seemingly unrelated regions of the brain, sacrificing speed and efficiency for a looping, free-ranging flexibility. And our memories are malleable and incomplete, like nothing in the coded or mechanical world. Are these design bugs, begging to be fixed? Or are they features, and proof that intelligence is a black box that we still don’t have the tools to properly crack open? These are the questions that still have to be answered, before anyone can make the first, primitive attempts at building AGI from scratch, or somehow merging humans and machines into a hybrid that amounts to it.

I’m not arguing that machine sentience is an impossibility. Breakthroughs can’t be discounted before they have a chance to materialize out of thin air. But belief in the Singularity should be recognized for what it is—a secular, SF-based belief system. I’m not trying to be coy, in comparing it to prophecy, as well as science fiction. Lacking evidence of the coming explosion in machine intelligence, and willfully ignoring the AGI deadlines that have come and gone, the Singularity relies instead on hand-waving. That’s SF-speak for an unspecified technological leap. There’s another name for that sort of shortcut, though. It’s called faith.