Consider the verb “removing.” As a human, you understand the different ways that word can be used—and you know that visually, a scene is going to look different depending on what is being removed from what. Pulling a piece of honeycomb from a larger chunk looks different from a tarp being pulled away from a field, or a screen protector being separated from a smartphone. But you get it: in all those examples, something is being removed.

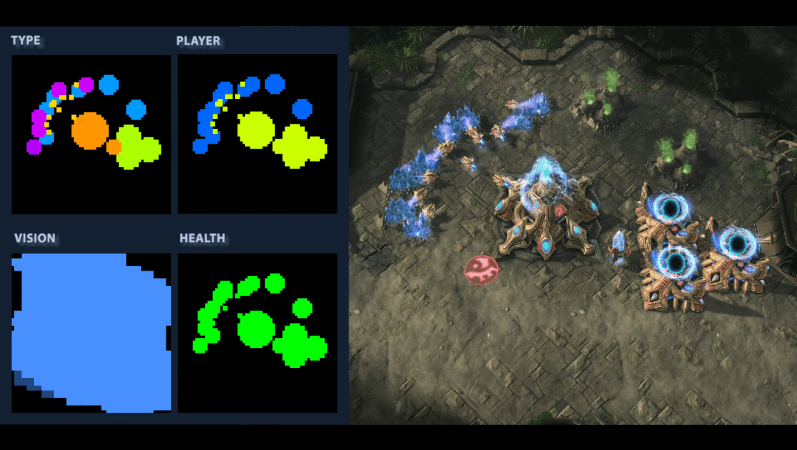

Computers and artificial intelligence systems, though, need to be taught what actions like these look like. In order to help accomplish that, IBM recently published a large new dataset of three-second video clips intended for researchers to use to help train their machine learning systems by giving them visual examples of action verbs like “aiming,” “diving,” and “weeding.” And exploring it (the car video above, and the bee video below, come from the dataset and illustrate “removing”) provides a strange tour of the sausage-making process that goes into machine learning. Under “winking,” viewers can see a clip of Jon Hamm as Don Draper giving a wink, as well as a moment from the Simpsons; there’s plenty more where that came from. Check out a portion of the dataset here—there are over 300 verbs and a million videos in total.

Teaching computers how to understand actions in videos is tougher than getting them to understand images. “Videos are harder because the problem that we are dealing with is one step higher in terms of complexity if we compare it to object recognition,” says Dan Gutfreund, a researcher at a joint IBM-MIT laboratory. “Because objects are objects; a hot dog is a hot dog.” Meanwhile, understanding the verb “opening” is tricky, he says, because a dog opening its mouth, or a person opening a door, are going to look different.

The dataset is not the first one out there that researchers have created to help machines understand images or videos. One called ImageNet has been important in teaching computers to learn to identify pictures, and other video datasets are already out there, too: one is called Kinetics, another focuses on sports, and still another is from the University of Central Florida and contains actions like “basketball dunk.”

But Gutfreund says that one of the strengths of their new dataset is that it focuses on what he calls “atomic actions.” Those include basics, from “attacking” to “yawning.” And breaking things down into atomic actions is better for machine learning than focusing on more complex actions, Gutfreund says, like showing someone changing a tire or tying a necktie.

Ultimately, he says that he hopes this dataset will helps computer models be able to understand simple actions as easily as we humans can.

![Watch This Robot Suck Up Fruit Flies Like A UFO [Video]](https://www.popsci.com/wp-content/uploads/2019/03/18/PU2SL77VDHYYXEOJ7IORL32NZU.jpg?w=600)