Psychology 101 textbooks typically include epic tales of the discipline’s history. They chronicle inventive experiments and dazzling results from days of old. But many of the things we think we know about human mind—like the depth of the parent-child bond, or our inclination to submit to authority even when it feels unethical—come from research that’s since been found flimsy, biased, or too unethical to be repeated today. Here are the troublesome backstories to seven classic psychological studies.

Little Albert

In the early 20th century, many American psychologists were under the influence of “behaviorism,” the belief that our behaviors aren’t so much a matter of free will, but of animal-like reflexes and learned responses guided by past experiences. Russian scientist Ivan Pavlov showed that dogs could be taught to salivate at the sound of a bell. But John B. Watson and his colleagues at Johns Hopkins University wanted to show they applied to humans, too.

To do this, they borrowed a 9-month-old baby they called “Albert” and tried to teach him a specific fear. Every time the baby touched a white rat, the researchers made a harsh sound with a hammer, causing him to cry. Eventually, the baby would cry at the sight of the white rat—or a rabbit, or a Santa Claus mask—alone.

At the time the results were published in February 1920, the study was well-regarded and it even appears to have inspired promising new psychologists to enter the field. But harsh criticism of the research, both ethical and practical in nature, has arisen over the intervening century. Albert could not give informed consent to such experimentation, and likely continued to fear certain stimuli long after the study was finished. At the same time, with just one participant and no control group, Watson’s data was effectively meaningless.

The Monster Study

Wendell Johnson was a life-long stutterer. A psychologist and preeminent speech pathologist at the University of Iowa in the 1930s, he set about researching the origins of his own condition. Like the Little Albert experimenters, Johnson harbored a belief that stuttering “begins not in the child’s mouth but in the parent’s ear.” In other words, it had nothing to do with atypical neurological or muscular patterns, it was learned.

Determined to test this hypothesis, Johnson and his collaborator, Mary Tudor, took a dozen children with normal speech patterns from a Davenport, Iowa orphanage and divided them into two groups. Kids in one group were told they had stutters. Kids in the other group weren’t. Tudor’s experiment had mixed results: two children improved their elocution, two didn’t change, and two lost fluency.

Sixty years later, as the New York Times reported, the orphans—now grown up—sued the state and the university, “citing among other things the infliction of emotional distress and fraudulent misrepresentation.” Needless to say, “the monster study,” as it quickly came to be known, could not be repeated today given its long-term consequences for young participants.

Project MKUltra

From 1953 to 1973, the CIA funded covert research on mind control at dozens of reputable institutions, including universities and hospitals. A source of inspiration to horror-fueled shows like Stranger Things and films like Conspiracy Theory, the covert project is today recognized as torture. In the hopes of revealing strategies by which the government could deprogram and reprogram spies or prisoners of wars, unwitting civilians were drugged, hypnotized, submitted to electroshock therapy, and shut away, sometimes for months, in sensory deprivation tanks and isolation chambers.

One of the proponents and practitioners of this research was psychiatrist Donald Ewen Cameron of McGill University’s Allan Memorial Institute. There, patients who entered with everyday conditions like mild anxiety would be submitted to the full bevy of “psychic driving” techniques, which left many of them amnesic, incontinent, child-like in their dependence, and deeply traumatized. His work led to dozens of lawsuits, new legislation, and large settlements to the victims. But at the time he was widely regarded, heading up the American, Canadian, and World psychiatric associations, and even participating in the Nuremberg doctors’ trial that censured experimentation and mass murder by Nazi physicians.

Of course, Cameron’s practices would never pass muster with an ethics review board today… but they probably wouldn’t have passed then, either.

Robbers Cave

The same year Lord of the Flies was published (1954), married psychologists Muzafer and Carolyn Wood Sherif took a group of 22 boys into the woods of Robbers Cave State Park in Oklahoma for a better look at realistic conflict theory.

Over the course of three weeks, the researchers planned to divide the children into two groups; ask them to compete at camp games with the secret hope of creating negativity between the groups; and then force them to come together and cooperate in a crisis. Along the way, the researchers manipulated the circumstances, encouraging the boys to fight and supplying them with tools to fuel their infighting.

The research made Muzafer Sherif a hero in his field, and the study remains important to conflict theorists. But a closer inspection suggests the results are unreliable (though not meaningless). Worse, the whole thing was unethical: Neither the boys nor, it seems, their parents, were informed about the true nature of the summer camp, according to Gina Perry, author of the new book The Lost Boys, which documents Sherif’s work.

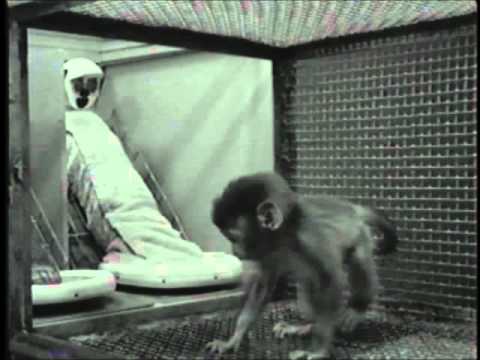

Harlow’s monkeys

In the 1950s, University of Wisconsin psychologist Harry Harlow constructed a series of experiments to study the impact of isolation, separation, and neglect on children. To his credit, he didn’t use human babies (as some of his predecessors might have), but the effect on his long-established rhesus macaque colony is historically harrowing.

While his studies spanned decades and took many forms, his most famous experiment forced baby monkeys to choose between two fake surrogates. The “iron maiden” was made only of wire, but had bottles full of milk protruding from its metal chest. The other was covered in a soft cloth, but entirely devoid of food.

If Harlow’s behaviorist theories were right—that parents were there to provide resources, not comfort—the babies should have chosen the surrogate who offered them food over the surrogate who offered them nothing but comfort. But that theory quickly crumbled. The monkeys spent most of their time clinging to the cloth mother, crying out in pain. They visited the iron maiden only when they were too hungry to avoid her metallic frame any longer.

Harlow’s monkey studies were considered foundational to the field of parent-child research, but many contemporary psychologists argue such experiments should never be repeated—on humans or animals.

Milgram experiment

In the 1960s, Yale University psychologist Stanley Milgram devised a way to test the most pressing existential question of the era: Could obedience to authority be enough to persuade ostensibly good people to commit acts generally considered evil?

In the lab, Milgram assigned research participants the role of teacher, then asked them to administer ever-increasing electric shocks to their “student.” As the shocks escalated, the student—secretly a member of the research team and safe from any real harm—cried, yelled, and begged to be freed. Some of the “teachers” refused to continue. But roughly 65 percent administered the most intense voltage anyway.

Controversial since its conception, “the procedure created extreme levels of nervous tension,” Milgram wrote in his original 1963 study. “Profuse sweating, trembling, and stuttering were typical expressions of this emotional disturbance. One unexpected sign of tension—yet to be explained—was the regular occurrence of nervous laughter.” Whether they quit early, or made it to the finish line, participants were riled.

Despite the distress such studies clearly generate, the shock experiment continues to be replicated today, often with similar results. And new research is using Milgram-like methods to study human-robot relationships.

Stanford prison experiment

In 1971, Stanford psychology professor Philip Zimbardo put a group of college students in a fake makeshift jail, randomly assigned them to be guards or prisoners, and watched what unfolded. It appears many of the guards began to act in stereotypically authoritarian ways, though only after some poking and prodding from Zimbardo, the self-appointed prison superintendent. The prisoners, meanwhile, reportedly accepted much of this abuse, though some quit the experiments. Ultimately, the situation escalated to the point that Zimbardo abandoned the project after just six days.

The experiment remains legendary—the stuff of films, documentaries, and textbooks. But scrutiny of Zimbardo’s methodologies, conclusions, and ethics have grown to the point many professors now omit the experiment from their curriculum. “From the beginning, I have always said it’s a demonstration. The only thing that makes it an experiment is the random assignment to prisoners and guards, that’s the independent variable. There is no control group. There’s no comparison group. So it doesn’t fit the standards of what it means to be ‘an experiment,'” Zimbardo said in defense of his work. “It’s a very powerful demonstration of a psychological phenomenon, and it has had relevance.”