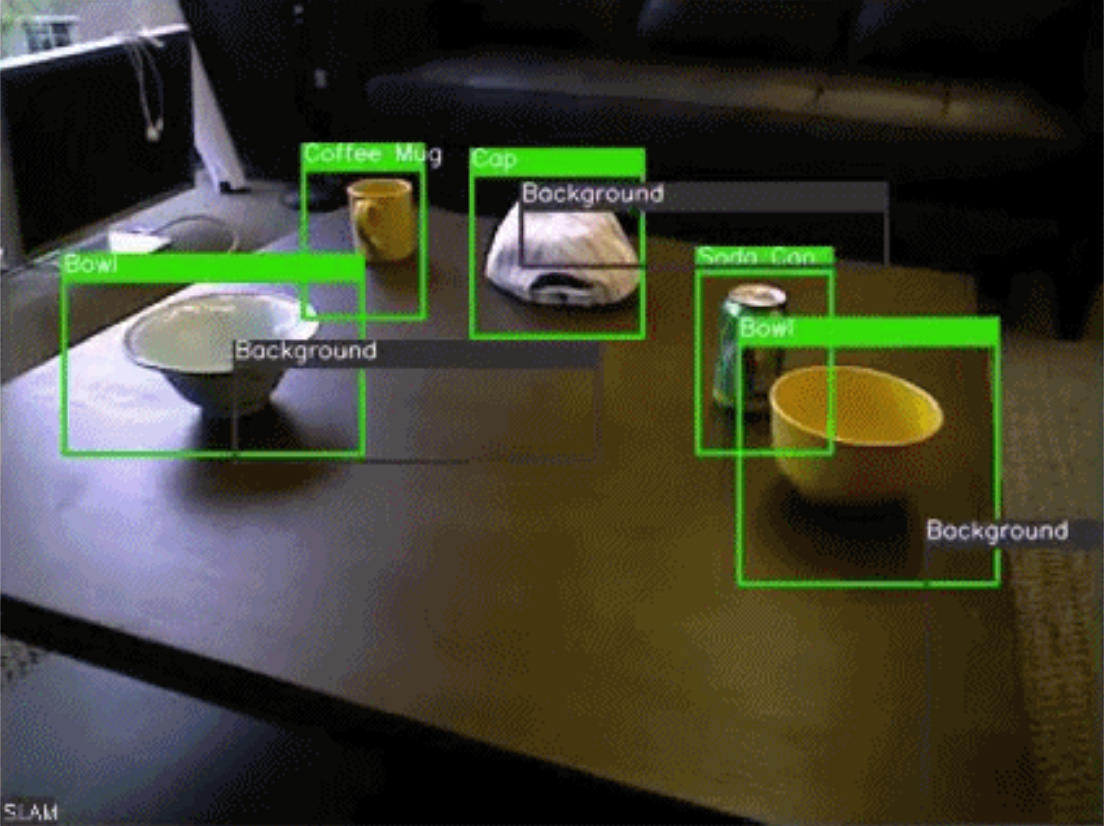

If the above gif looks familiar it’s probably because it looks eerily similar to this:

This, of course, is how the T-800 Terminator sees and recognizes objects in the world upon arrival from the future in Terminator 2: Judgement Day.

Similar to the movie, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory have created an object recognition system that can accurately identify objects using a normal RGB camera (no threatening blood-red color filter required). This system could help future robots interact with objects more efficiently while they navigate our complex world.

“Ideally we want robots to be cleaning our dishes at some point in the future. We want recognition systems where it does in fact see the objects that the robot should care about and manipulate them,” says Sudeep Pillai, lead author of the study.

The system builds upon classical recognition systems as well as another system called “simultaneous localization and mapping” (SLAM), which allows devices like autonomous vehicles or robots to have a three-dimensional spatial awareness. The team’s new “SLAM-aware” system maps out its environment while it collects information about objects from multiple viewpoints. With each new angle, the program is able to predict what the objects are by breaking them down into their more basic components. It then compares this compiled description to a database of existing descriptions of objects. For example, if the SLAM-aware system sees a chair, it may break it down as a seat, four legs, and a back.

Since the SLAM-aware system creates a three-dimensional map of what it is seeing, it can also better pull out one object from the other. Each new viewpoint adds to the descriptive information about each object. This system decreases ambiguity and increases the likelihood of classifying objects correctly and distinguishing one object from the other.

The SLAM-aware system differs from classical image recognition systems that the team calls “SLAM-oblivious,” as these do not create a three-dimensional map and can only detect objects one still-frame at a time. By comparison, SLAM-oblivious systems have far greater difficulty recognizing multiple objects in a cluttered environment than the SLAM-aware system. In the gif below, incorrect predictions flash red.

In one experiment, the SLAM-aware system was able to correctly identify a scene of objects with 86.1 percent accuracy, which is comparable to advanced special-purpose systems that can factor in depth information with infrared light. Although these special-purpose systems can be very accurate, as high as 92.8 percent accuracy, it comes at the cost of time. Some of these systems had a run time of about 4 seconds, where as the SLAM-aware system had a run-time of 1.6 seconds. Systems that use infrared light also have trouble working outside because of the difficult lighting conditions.

“The fact that you cannot use it outdoors makes it kind of impractical from a robotics standpoint, because you want these systems to work indoors and outdoors,” says Pillai.

In the future, Pillai and his team want to build upon their system to also solve a classic robotics challenge called a “loop closure.” This is when robots are not able to recognize locations that they have previously been, which is important for navigating and interacting with the world. The SLAM-aware system may begin to solve this problem by allowing robots to recognize specific objects in different locations and classify them as more important to that particular location.

The researchers presented their study this month at the Robotics Science and Systems conference in Rome, Italy.

“We don’t want to compete against competing recognition systems, we want to be able to integrate them in a nice manner,” Pillai says.

No word on whether the SLAM-aware system makes it any easier to locate and protect John Connor.