Proteins are an essential part of keeping living organisms up and running. They help repair cells, clear out waste, and relay correspondences from one end of the body to the other.

There’s been a great deal of work among scientists to decipher structures and functions of the proteins, and to this end, Meta’s AI research team announced today that they have used a model that can predict the 3D structure of proteins based on their amino acid sequences. Unlike previous work in the space, such as DeepMind’s, Meta’s AI is based on a language learning model rather than a shape-and-sequence matching algorithm. Meta is not only releasing its preprint paper on this research, but will be opening up both the model and the database of proteins to the research community and industry.

First, to contextualize the importance of understanding protein shapes, here’s a brief biology lesson. Certain triplet sequences of nucleotides from genes are translated by a molecule in the cell called a ribosome into amino acids. Proteins are chains of amino acids that have assorted themselves into unique forms and configurations. An emerging field of science called metagenomics is using gene sequencing to discover, catalog, and annotate new proteins in the natural world.

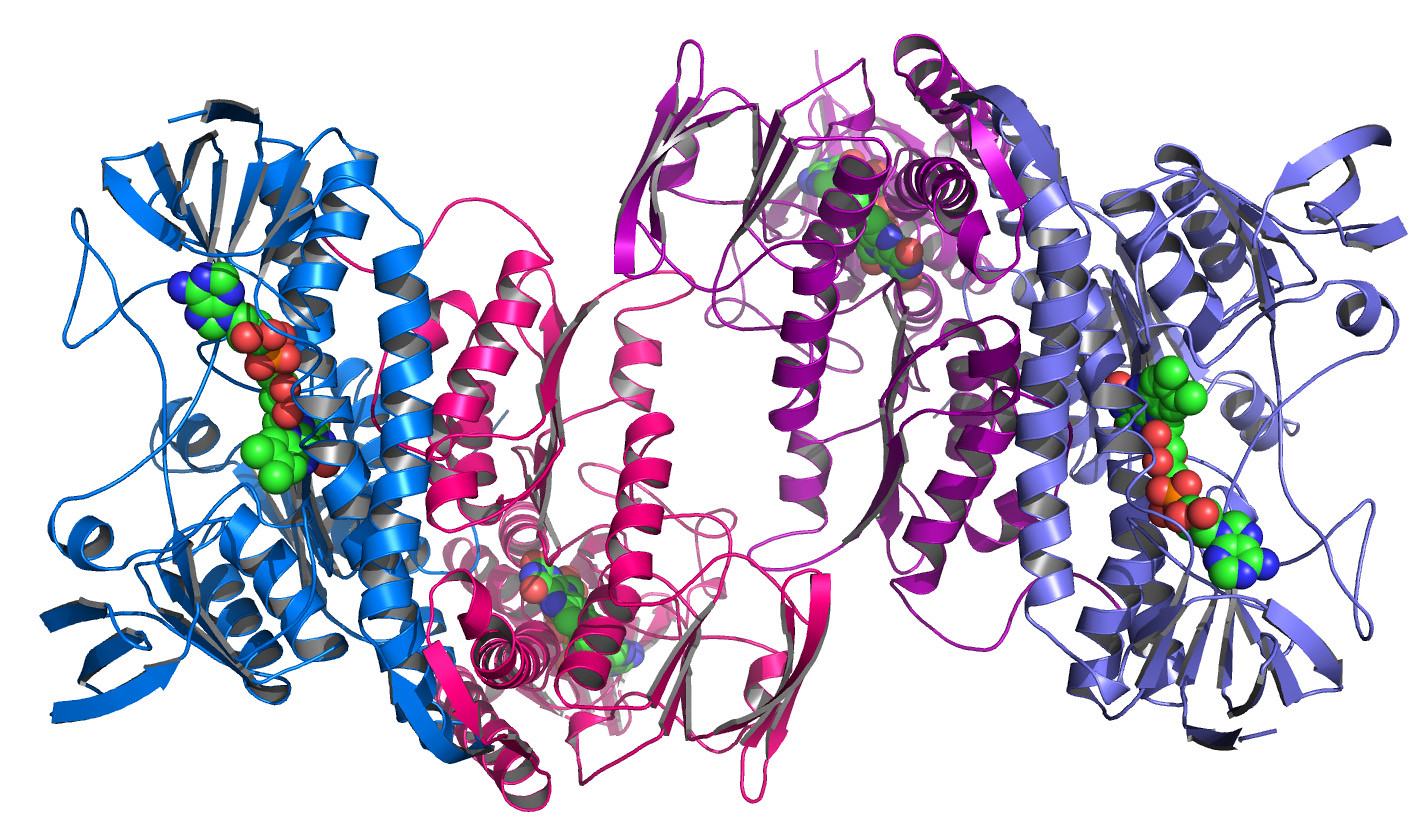

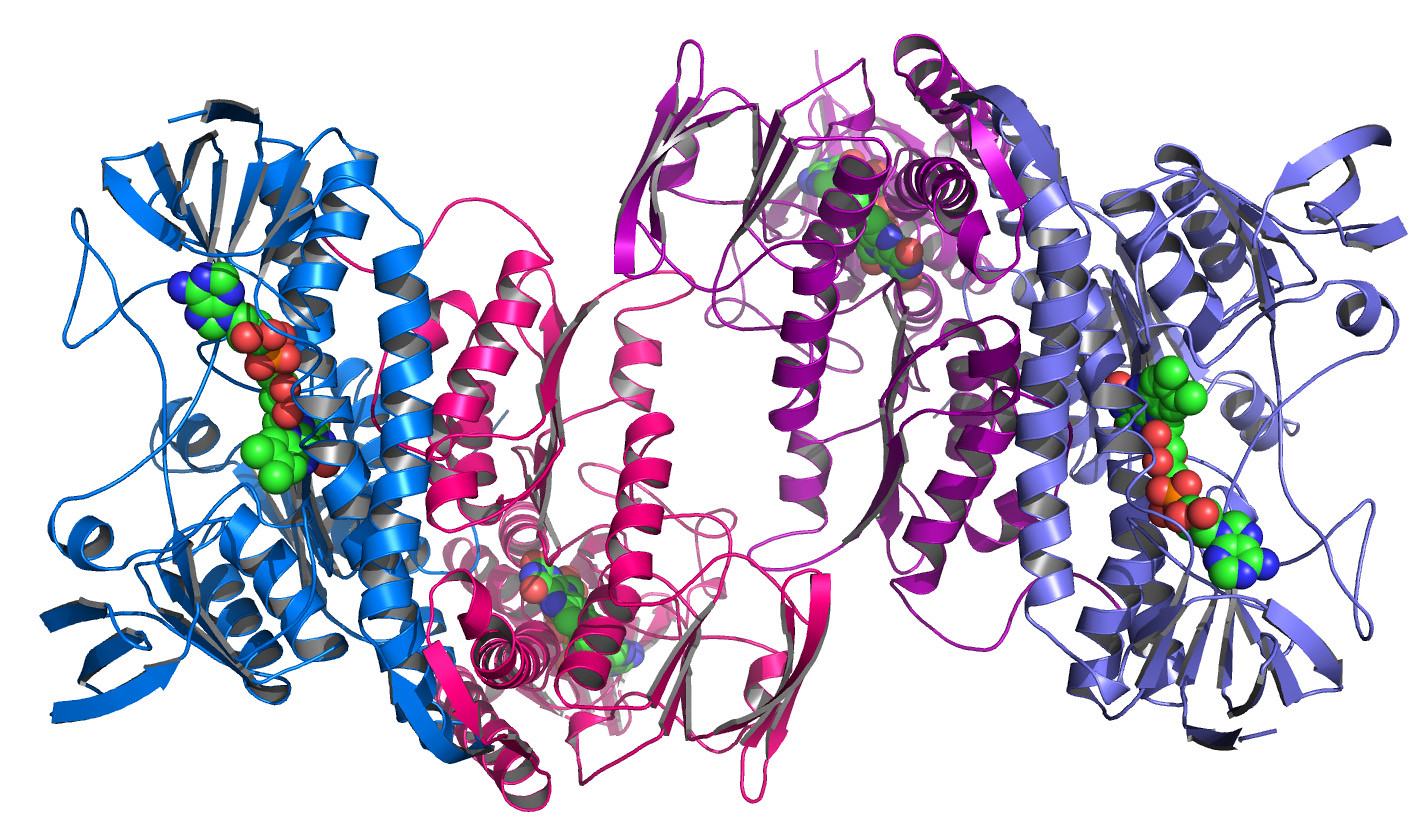

Meta’s AI model is a new protein-folding approach inspired by large language models that aims to predict the structures of the hundreds of millions of protein sequences in metagenomics databases. Understanding the shapes that these proteins form will give researchers clues about how they function, and what molecules they interact with.

[Related: Meta thinks its new AI tool can make Wikipedia more accurate]

“We’ve created the first large-scale characterization of metagenomics proteins. We’re releasing the database as an open science resource that has more than 600 million predictions of protein structures,” says Alex Rives, a research scientist at Meta AI. “This covers some of the least understood proteins out there.”

Historically, computational biologists have used evolutionary patterns to predict the structures of proteins. Proteins, before they’re folded, are linear strands of amino acids. When the protein folds into complex structures, certain sequences that may appear far apart in the linear strand could suddenly be very close to one another.

“You can think about this as two pieces in a puzzle where they have to fit together. Evolution can’t choose these two positions independently because if the wrong piece is here, the structure would fall apart,” Rives says. “What that means then is if you look at the patterns of protein sequences, they contain information about the folded structure because different positions in the sequence will co-vary with each other. That will reflect something about the underlying biological properties of the protein.”

Meanwhile, DeepMind’s innovative approach, which first debuted in 2018, relies chiefly on a method called multiple sequence alignment. It basically performs a search over massive evolutionary databases of protein sequences to find proteins that are related to the one that it’s making a prediction for.

“What’s different about our approach is that we’re making the prediction directly from the amino acid sequence, rather than making it from this set of multiple related proteins and looking at the patterns,” Rives says. “The language model has learned these patterns in a different way. What this means is that we can greatly simplify the structure prediction architecture because we don’t need to process this set of sequences and we don’t need to search for related sequences.”

These factors, Rives claims, allow their model to be speedier compared to other technology in the field.

[Related: Meta wants to improve its AI by studying human brains]

How did they train this model to be able to do this task? It took two steps. First, they had to pre-train the language model across a large number of proteins that have different structures, come from different protein families, and are taken all across the evolutionary timeline. They used a version of the Masked Language Model, where they blanked out portions of the amino acid sequence and asked the algorithm to fill in those blanks. “The language training is unsupervised learning, it’s only trained on sequences,” Rives explains. “Doing this causes this model to learn patterns across these millions of protein sequences.”

Then, they froze the language model and trained a folding module on top of it. In the second stage of training, they use supervised learning. The supervised learning dataset is made up of a set of structures from the protein databank that researchers from across the world have submitted. That is then augmented with predictions made using AlphaFold (DeepMind’s technology). “This folding module takes the language model input and basically outputs the 3D atomic coordinates of the protein [from the amino acid sequences].” Rives says. “That produces these representations and those are projected out into the structure using the folding head.”

Rives imagines that this model could be used in research applications such as understanding the function of a protein’s active site at the biochemical level, which is information that could be very pertinent for drug development and discovery. He also thinks that the AI could even be used to design new proteins in the future.