Night vision is typically monotone—everything the wearer can see is colored in the same hue, which is mostly shades of green. But by using varying wavelengths of infrared light and a relatively simple AI algorithm, scientists from the University of California, Irvine have been able to bring back some color into these desaturated images. Their findings are published in the journal PLOS ONE this week.

Light in the visible spectrum, similar to an FM radio, consists of many different frequencies. Both light and radio are part of the electromagnetic spectrum. But light, unlike radio waves, is measured in nanometers (characterizing its wavelength) instead of megahertz (characterizing its wave frequency). Light that the average human eye can perceive ranges from 400 to 700 nanometers in wavelength.

The typical security camera equipped with night vision makes use of a single color and wavelength of infrared light, which is longer than 700 nanometers, to create a scene. Infrared light is part of the electromagnetic spectrum that’s invisible to the naked eye. These waves have been used by scientists to study thermal energy; infrared light signals are also what some remote controls use to communicate with the television screen.

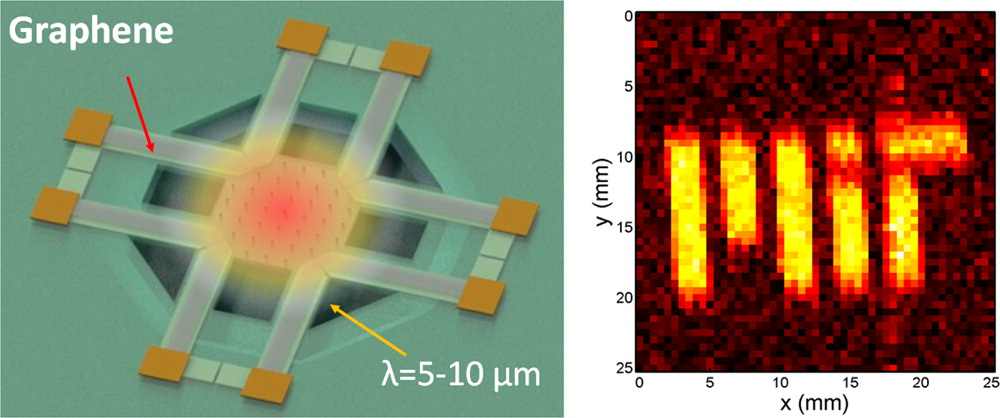

Previously, to teach night vision cameras how to see in color, researchers would take a picture of the same scene with an infrared camera and a normal camera, and train the machine to predict the color image from the infrared image from these two types of inputs. But in this experiment, the team from UC Irvine wanted to see if night vision cameras using multiple wavelengths of infrared light could help an algorithm make better color predictions.

To test this, they used a monochrome camera that responded to light from the visible and infrared spectrum. Most color cameras capture three different colors of light: red (604 nm), green (529 nm) and blue (447 nm). In addition to capturing the sample set of images with those colors of light shone on them, the experimental apparatus also took pictures in the dark under three different wavelengths of infrared light at 718, 777, and 807 nm.

“The monochromatic camera is sensitive to whatever photons are reflected from the scene that it’s looking at,” explains Andrew Browne, a professor of ophthalmology at UC Irvine and an author on the PLOS ONE paper. “So, we used a tunable light source to shine a light onto the scene and a monochromatic camera to capture the photons that were reflected off that scene under all the different illumination colors.”

[Related: Stanford engineers made a tiny LED display that stretches like a rubber band]

The scientists then used the three infrared pictures paired with color pictures to train an artificial intelligence neural network to make predictions about what the colors in the scene should be. The neural net was able to reconstruct color images from the three infrared pictures that looked pretty close to the real thing after the team trained it and improved its performance.

“When we increase the number of infrared channels, or infrared colors, it provides more data and we can make better predictions that actually look pretty close to what the real image should be,” says Browne. “This paper demonstrates the feasibility of this approach to acquire an image in three different infrared colors—three colors that we cannot see with the human eye.”

For this experiment, the team only tested their algorithms and the technique on printed color photos. However, Browne says that they are looking to apply this to videos, and eventually, real world objects and human subjects.

“There are certain situations where you can’t use visible light, either because you don’t want to have something that can be seen, or visible light can be damaging,” says Andrew Browne, a professor of ophthalmology at UC Irvine. This can apply, for example, to people who work with chemicals that are sensitive to light, researchers who want to study the eye, or military personnel. “The ability to see in color vision, or something that looks like our normal vision, could be of value in low light conditions.”