We may earn revenue from the products available on this page and participate in affiliate programs. Learn more ›

When we use virtual reality on phones now, the input is awkward. The best way to control the experience (which isn’t saying much) is by pointing your face towards the item you want to select.

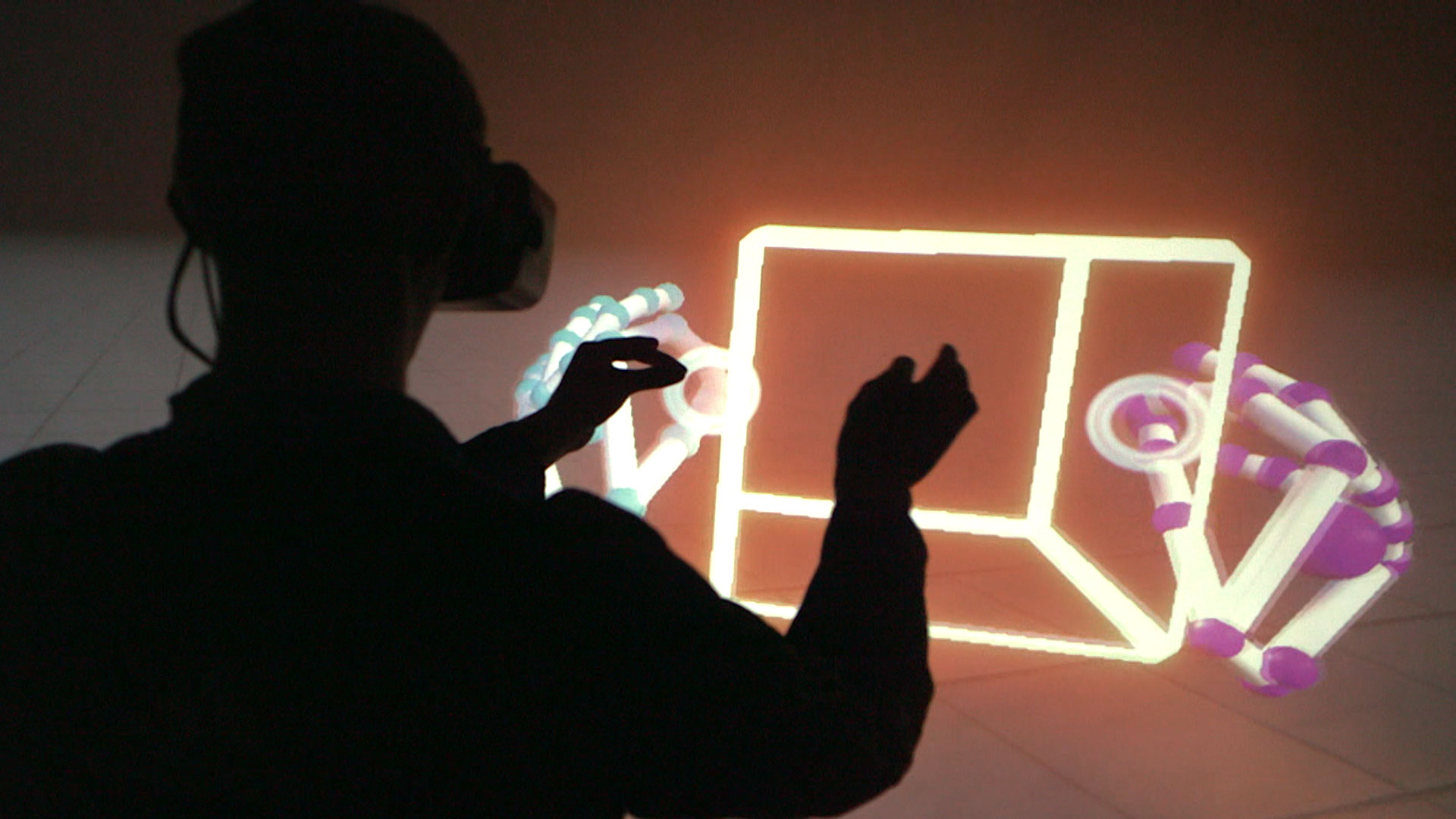

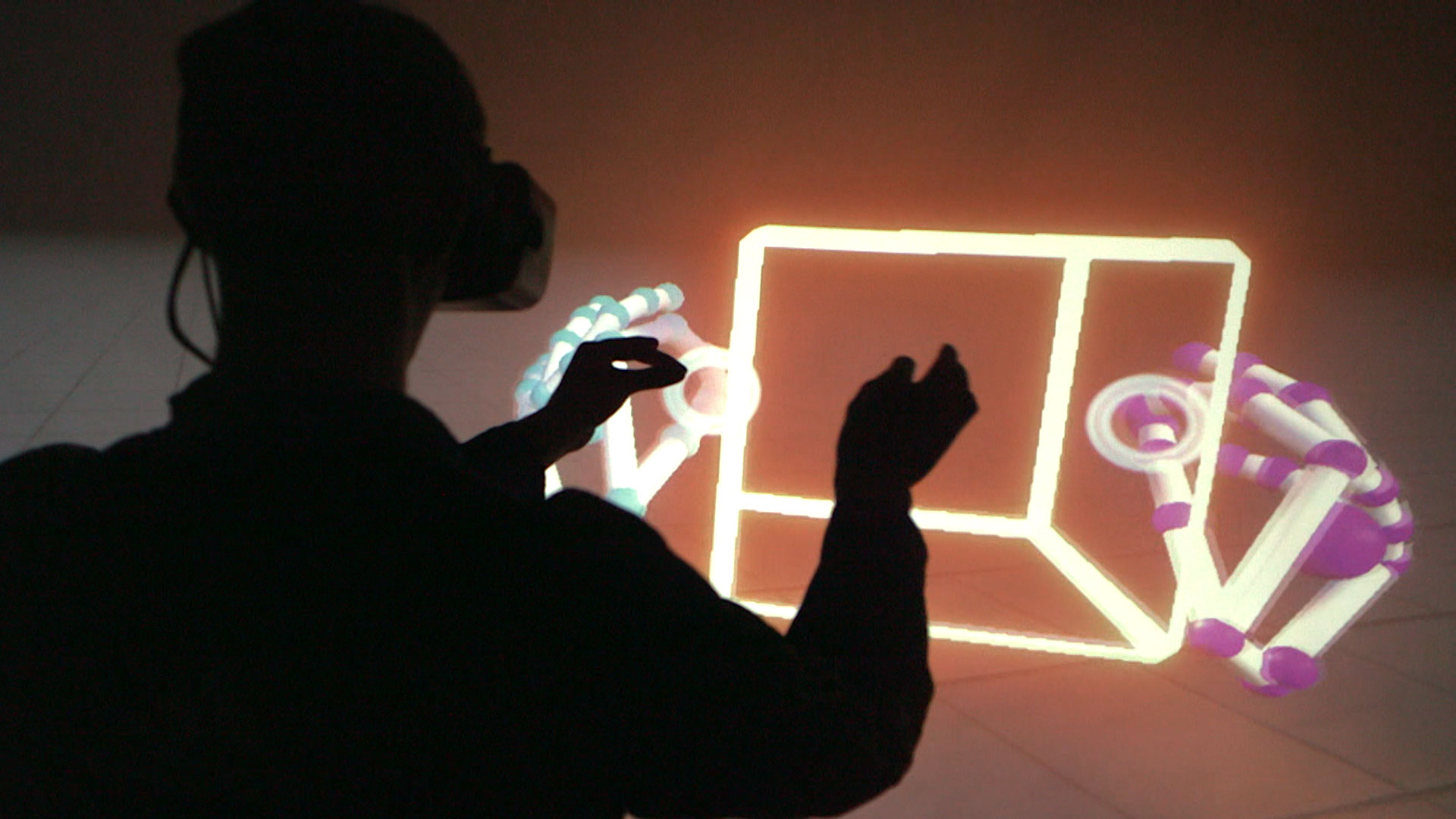

Leap Motion, a company that makes hand-tracking devices and software, wants users to be able to use finger gestures in the air to select items instead, to more seamlessly interact with objects in a virtual space.

“Everybody knows how to use their hands,” says Leap Motion CEO Michael Buckwald. “Right now all input is binary. Fingers can be the solution to that problem.”

Leap Motion has been tracking hands and fingers with a desk-based module since 2012, but its technology hasn’t really made perfect sense until the dawn of virtual reality. In 2014, the company released a mount so their original module could be stuck to the front of a VR headset, which has enabled tons of hacked-together VR and AR projects. Most upcoming desktop virtual reality headsets, like Oculus or the HTC Vive, will have their own controllers or use gaming controllers instead of tracking hands. Leap Motion sees this as an opportunity for mobile VR.

Their new Orion module actually isn’t that different than the original Leap Motion controller. Both have two optical CMOS sensors, as well as infrared LEDs (the Orion has 2 LEDs and the original has 3). The biggest change is the distance between the two CMOS sensors, which has been widened to 64 millimeters. Buckwald says that 64mm is the average space between humans’ eyes, which means the sensors can track hands a lot closer to the way our eyes would. The Orion mobile software can also be used with the original module, but it isn’t as effective.

Consumers won’t be able to buy this module, which is specifically meant to be built into virtual reality headsets.

The Orion software is an overhaul of Leap Motion’s gesture control system. They had to solve a bunch of problems they were seeing repeated with the original Leap Motion engine, like difficulties detecting hands at the ends of long arms. They also struggled with the simple gesture of grabbing an item, and then releasing it. The problem with this motion, which seems simple, is that your hand obscures the view of your fingers. Try picking something up while maintaining eye contact with every finger. It’s tough.

So the Leap Motion team actually had to model thousands of different ways to grab objects, so the software could model different kinds of grabs correctly.

In their view, this is the only way for VR to actually feel real. “Hands are the only input worthy of virtual reality,” Buckwald said.