If there’s one thing that was made clear during Facebook’s developer conference, F8, it’s that Live Video is incredibly important to the platform’s future. Not only does every Facebook user now have the ability to broadcast from their smartphone to the entire world, but soon other cameras will have the ability to produce high-quality broadcasts as well. On top of that, Facebook is committed to monetizing all this Live Video, as an incentive to stream on the world’s largest social network.

But Live Video magnifies a problem that Facebook already has with video: even if a user wants to watch something, they usually can’t find it. Most videos we watch are shared by friends or Pages that we follow.

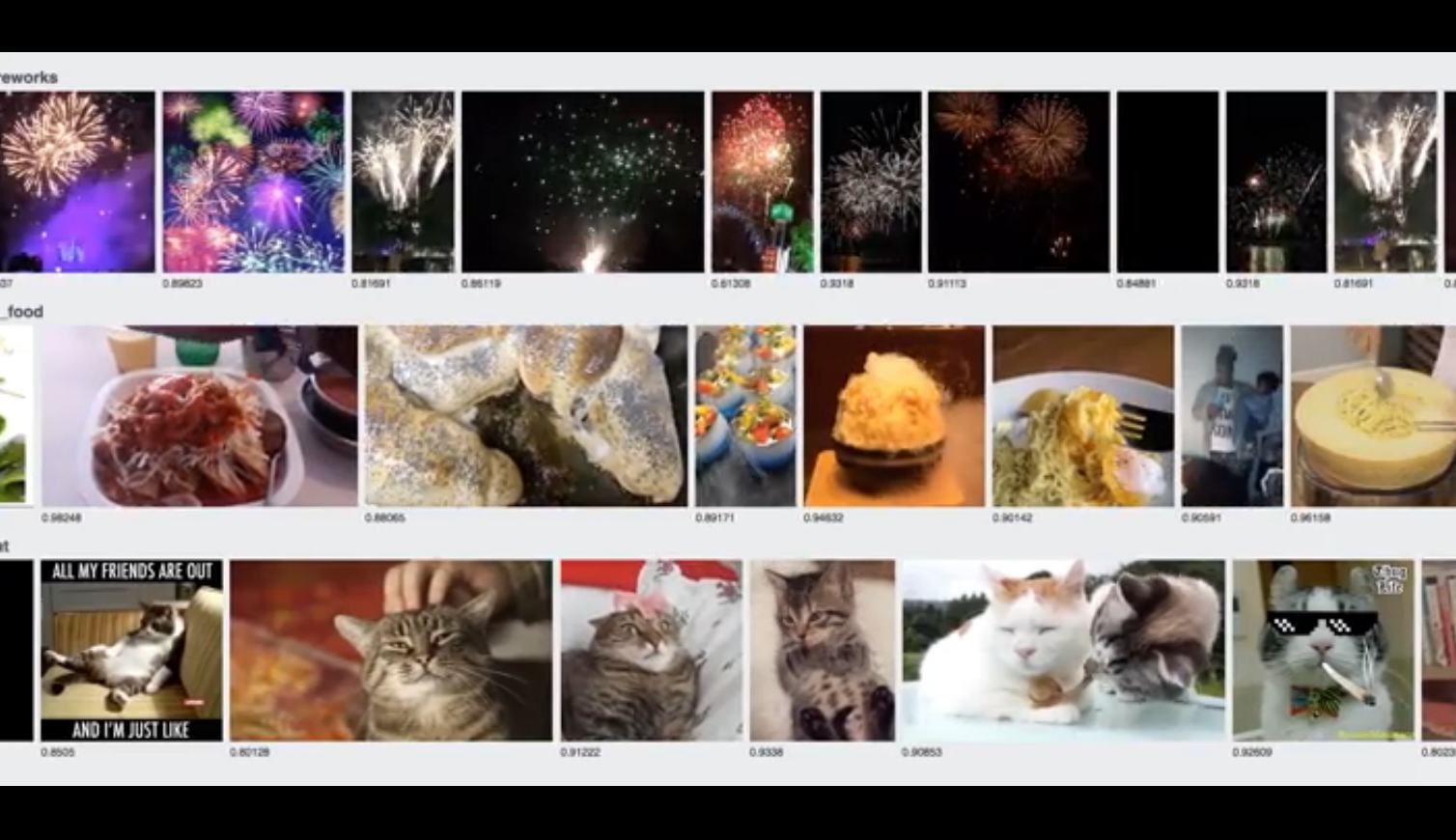

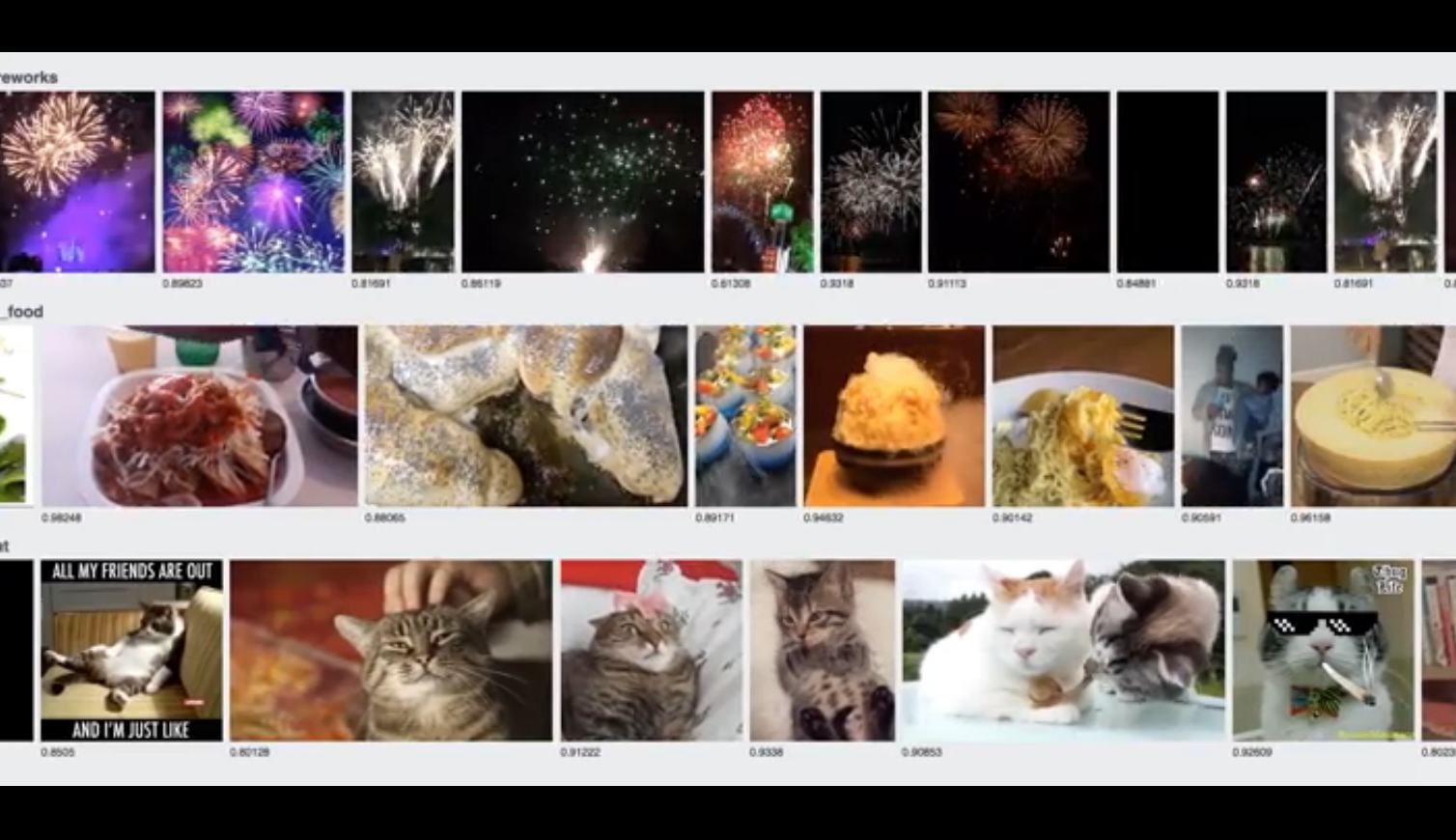

This is where artificial intelligence comes in. In the future, A.I. would be watching all the Live Video being produced in the world, understand what’s happening, and make it searchable, said Joaquin Quiñonero Candela, Facebook’s head of Applied Machine Learning, in a conversation with Popular Science today. Even more, Facebook might be able to cut these streams together, making a personalized channel of the content that you want to see.

“As this thing takes off, we’re going to have to make amazing tools for people to curate,” Candela said. “You can imagine someone who is a retired snowboarder, if I had the tools to scan through millions of livestreams can I combine professionally-shot streams cut together with stuff like people on the mountain. Imagine if I could cut and mix that, I could make my own kind of TV station. We need to build these tools if we want this to happen.”

But getting there isn’t easy. Right now, Facebook is still working on how to identify objects in images, and now they’re just starting on video.

The main issue is something called semantic segmentation. There are three tiers to artificial intelligence understanding what’s in an image or video. There’s classification; a cup is in the photo. Then, there’s segmentation (splitting the image into pieces based on the content); a cup and a table are in the photo. The final, semantic segmentation, is the hardest, and where Candela says we need the most work; the cup is on the table.

For the A.I. to know that cups go on tables means that it needs to understand the relationships between the two objects, that humans understand naturally from observing the world. This is difficult for most systems to understand because they need lots and lots of examples to both recognize that the two objects are separate, but also share this relationship. Cups go on tables, tables rarely go on cups.

And since video is just a barrage of images played very quickly, for an A.I. to identify things and understand them in any video, let along Live Video, is a huge feat. Candela’s dream is far from reality, based on current systems that are being deployed, but his team is making headway. During his presentation at F8’s keynote today, Candela announced that Facebook is working on processing video for facial recognition. With this technology, the A.I. is able to tell when someone is in a video, what time they enter, and for how long. The technology is based on the A.I.-based automatic tagging feature that Facebook has used with great accuracy for years.

However, analyzing Live Video can also be a major win for the strength of Facebok’s artificial intelligence, says Candela. A major selling point for artificial intelligence is that it learns as it works—and a nearly-unlimited stream of video of varied topics from around the world is the perfect opportunity to learn about new things.