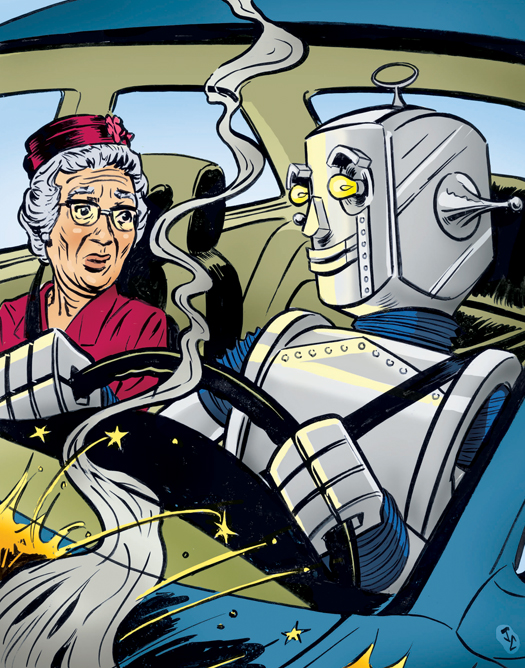

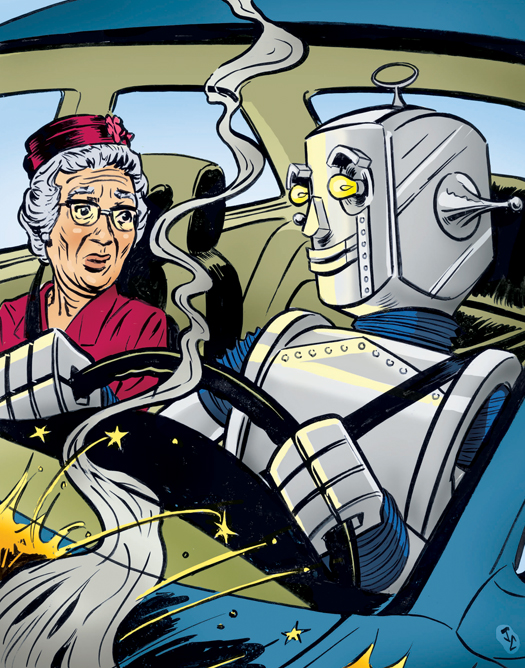

Society must make two big leaps in order to enable truly self-driving cars. The first is technological. Engineers need to improve today’s cars (which can warn a driver that he’s drifting out of his lane) beyond current Google and Darpa prototypes (which maintain the lane on their own) to the point where automobiles can edge forward through a construction zone while their owners sleep inside.

The technological leap will be good for everyone. Machines are incredibly reliable. Humans are not. Most car crashes are caused by human error (a 2004 World Health Organization report put the figure at 90 percent). As safety technologies like antilock brakes and traction-control systems have taken hold, the number of fatal accidents has dropped 35 percent between 1970 and 2009, even though cars drive more than a trillion miles farther annually. “Robots have faster reaction times and will have better sensors than humans,” says Seth Teller, a professor of computer science and engineering at MIT. “The number of accidents will never reach zero, but it will decrease substantially.” Don’t think of self-driving cars as a convenience—they’re a safety system.

The other leap that society has to make is from driver liability to manufacturer liability. When a company sells a car that truly drives itself, the responsibility will fall on its maker. “It’s accepted in our world that there will be a shift,” says Bryant Walker Smith, a legal fellow at Stanford University’s law school and engineering school who studies autonomous-vehicle law. “If there’s not a driver, there can’t be driver negligence. The result is a greater share of liability moving to manufacturers.”

The liability issues will make the adoption of the technology difficult, perhaps even impossible. In the 1970s, auto manufacturers hesitated over implementing airbags because of the threat of lawsuits in cases where someone might be injured in spite of the new technology. Over the years, airbags have been endlessly refined. They now account for a variety of passenger sizes and weights and come with detailed warnings about their dangers and limitations. Taking responsibility for every aspect of a moving vehicle, however—from what it sees to what it does—is far more complicated. It could be too much liability for any company to take on.

The government could step in, though. In a few instances, federal law has overridden state law to protect the public. Under the 1986 National Childhood Vaccine Injury Act, for example, vaccine makers have special protection. Consumers can file injury claims through a dedicated office of the U.S. Court of Federal Claims, and vaccine makers pay out without admitting fault. The act seeks to protect the small number of people hurt by vaccines while encouraging vaccine makers to keep producing the drugs, because to prevent disease an unvaccinated person must be surrounded by thousands of vaccinated ones. Autonomous technology is similar: It won’t make us safer until it’s in most vehicles. Maybe it deserves special treatment to get it on the road.