In Alex Garland’s screenplay for 28 Days Later, he envisioned a future in which a manmade blood-borne virus turned most of the human population into crazed zombies. And with his screenplay for Sunshine, he detailed the plight of a small astronaut crew, traveling to our dying sun with the aim of nuking it back to life.

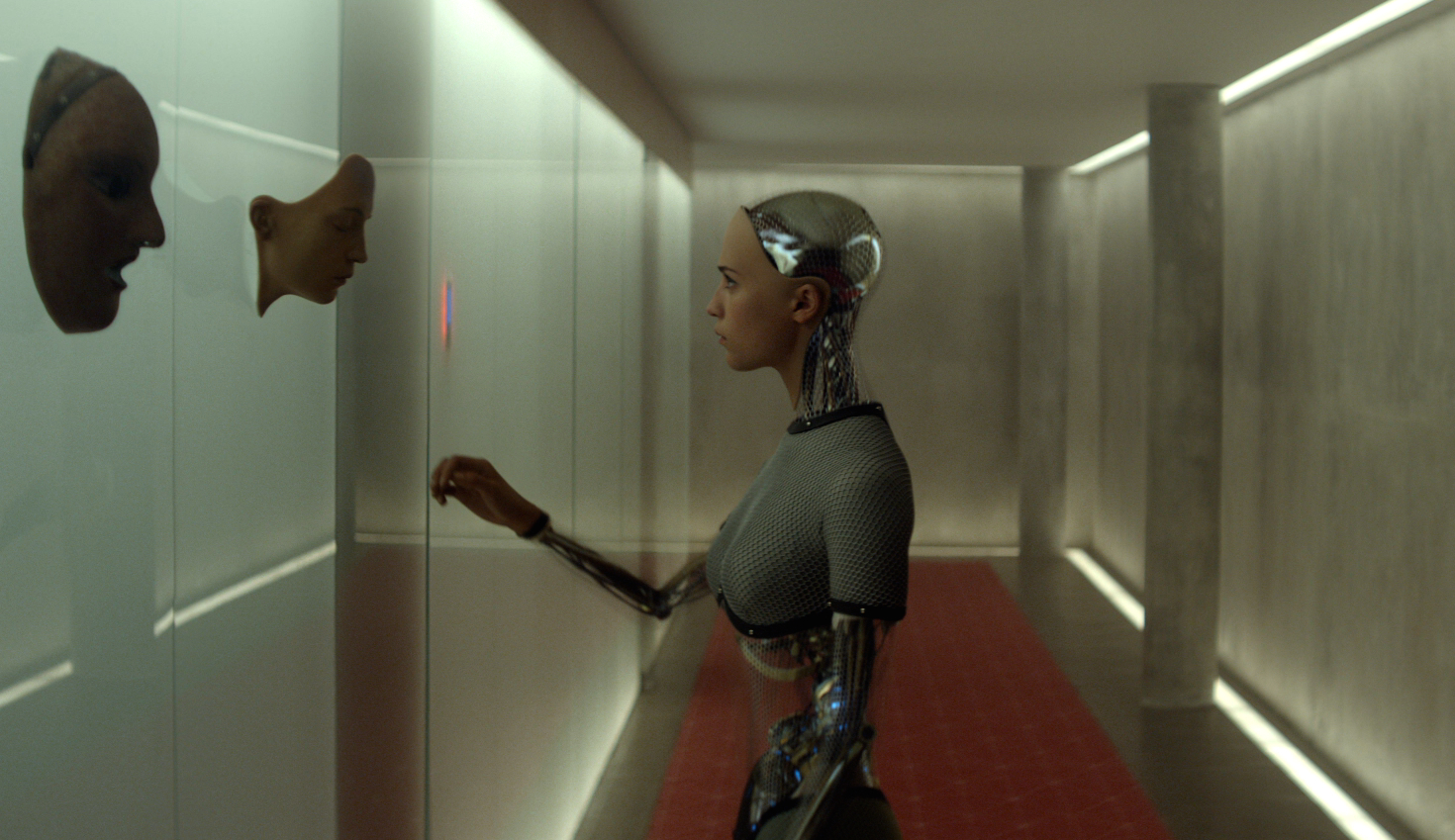

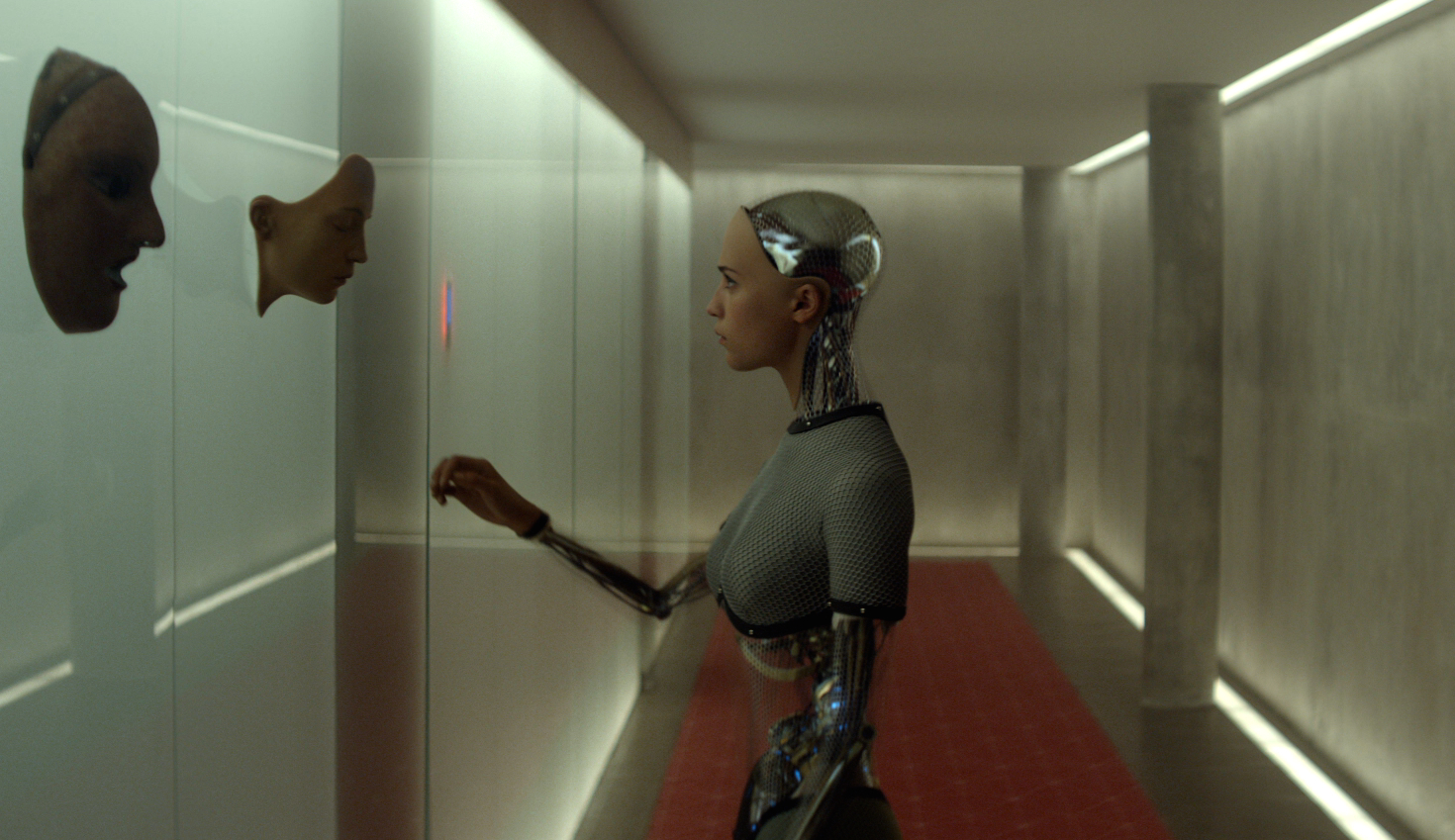

Now in his latest screenplay, Ex Machina, Garland tackles an oft-discussed (and oft-feared) concept circulating throughout the scientific community these days: artificial intelligence. He tells the story of Ava, the first-ever conscious robot, and Nathan, the crazy Elon Musk-like billionaire who created her. Nathan–who made his fortune by starting a Google-like website called Bluebook–invites one of his employees Caleb to his secluded house for a week, tasking him with conducting a Turing Test on Ava. The idea is to see if Ava can pass for human, but what starts off as a simple thought experiment soon becomes a dangerous mind game.

We spoke with Garland about the inspiration behind this latest endeavor, his thoughts on artificial intelligence, and his love for science fiction.

Popular Science: You said you were inspired to write and direct this movie after reading ‘Embodiment and the Inner Life,’ a book by Murray Shanahan, a professor of cognitive robotics at Imperial College London. What about his writing jumped out at you?

Alex Garland: I was just an interested layman, and I do what can to understand issues surrounding artificial intelligence. But I often have an intellectual limitation that I run into where information gets too technical and I just don’t have the grounding to track the information. Shanahan presented a strong argument that I felt instinctively attached to. It’s an argument about consciousness, whether it’s in a machine or a human, and it deals with some of the slightly fuzzy abstract philosophical problems that can be thrown into that argument. It was that that appealed to me.

What are some of the messages you’re trying to get across about AI with Ex Machina? You seem to take more of an optimistic approach to the technology than other films.

Right, the film is optimistic in two ways. First, it’s optimistic to say that AI will be possible. I mean strong AI. I mean AI that might feel like human consciousness. So, that in itself is an optimistic position, and then the secondary reason is because it says that the AI themselves may be at least equally as good or as valid as we are. If you have a conscious machine, you have to give to it the same kinds of rights and respects we give to a conscious human. We shouldn’t necessarily be afraid of it. Just interact with it on a reasonable level.

Yet the robot Ava does pull some rather devious acts toward the finale. I don’t want to spoil the ending for anyone, but how do you explain her actions?

I think that depends on your perspective. Often stories shift according to where you’re positioned in the story and your proximity to a character. My personal proximity is with the machine’s character, not with the two guys. If you look at the film and the story in that way, what you have is a sentient creature who is imprisoned and wants to get out and has to act in a way that facilitates her escape. Now in that respect, the two people that she sees on the outside of the glass [seem like captors]. And the person or creature she feels a stronger connection to and an empathy with is other machines. It’s not these two people, and if you see Ava as a woman in a glass box, her actions seem to be much more reasonable.

What do you personally see for the future of AI? Do you believe that we should work to create strong artificial intelligence? And do you ascribe to the beliefs of Elon Musk and Stephen Hawking that we should be afraid of the robots to come?

I do agree with some of the terms laid out in the film. If something can happen it will happen. If that’s the case, the right conversation is not ‘Should we develop strong AI?’ It’s ‘What will we do when we have strong AI?’ If it can happen, it becomes inevitable. If it can’t, then it’s no problem. I want to move past the question of whether or not it will happen, but what are the right ways to think about it.

If it can happen, I assume there’s a way to put checks and balances on it. I think it’s correct to be cautious, and it may be correct to be alarmist but not to the point where you stop doing it. The parallel in the film is to nuclear power. Nuclear power clearly has the potential to destroy mankind, but we did of course develop it and have managed not to destroy mankind, because of a system of checks and balances. I would expect AI to do the same. I have cautious optimism.

You introduce a number of thought experiments in your movie, like Mary in the black and white room. It’s the story of a woman who’s spent her entire life in black and white and then experiences color for the first time. Can you explain the argument behind that?

They’re thought experiments that relate to what get loosely called the higher problem with consciousness. How do you pin down the seemingly slippery elements of consciousness? A key element is qualia, which refers to a subjective experience. Mary in the black and white room demonstrates there are some things we can only know by experiencing them. When Mary leaves her black and white environment and sees color for the first time, her qualia sense of redness learns something new and experiences something new. It’s about how that experience relates to consciousness.

You’re known for writing a number of staples in the science fiction genre. What draws you to the topics you write about?

It’s just simply what I find personally interesting. The thing about science is it shows you what the limits of human thinking are in whatever area of science you happen to look at. It could be something to do with distant history, cosmology, or AI. If you look at the science, it tells you where we are at intellectually. That in itself is innately interesting, and there are other things about science I like very much.

Also, one of the things I love most about scientists is that although they’re presented as truth holders, the scientists I encounter are the quickest people to say, “I don’t know,” about a subject. Even more important than that, when they’re presented with evidence that contradicts their position, they change their minds. That’s exactly the opposite of what most people do. I find that to be interesting and I admire that.