Google’s servers drive the much of the world’s data, and apparently, they dream as well, according to a Google blog post by two Google software engineers and an intern.

Google’s artificial neural networks (ANNs) are stacked layers of artificial neurons (run on computers) used to process Google Images. To understand how computers dream, we first need to understand how they learn. In basic terms, Google’s programmers teach an ANN what a fork is by showing it millions of pictures of forks, and designating that each one is what a fork looks like. Each of network’s 10-30 layers extracts progressively more complex information from the picture, from edges to shapes to finally the idea of a fork. Eventually, the neural network understands a fork has a handle and two to four tines, and if there are any errors, the team corrects what the computer is misreading and tries again.

The Google team realized that the same process used to discern images could be used to generate images as well. The logic holds: if you know what a fork looks like, you can ostensibly draw a fork.

This showed that even when shown millions of photos, the computer couldn’t come up with a perfect Platonic form of an object. For instance, when asked to create a dumbbell, the computer depicted long, stringy arm-things stretching from the dumbbell shapes. Arms were often found in pictures of dumbbells, so the computer thought that sometimes dumbbells had arms.

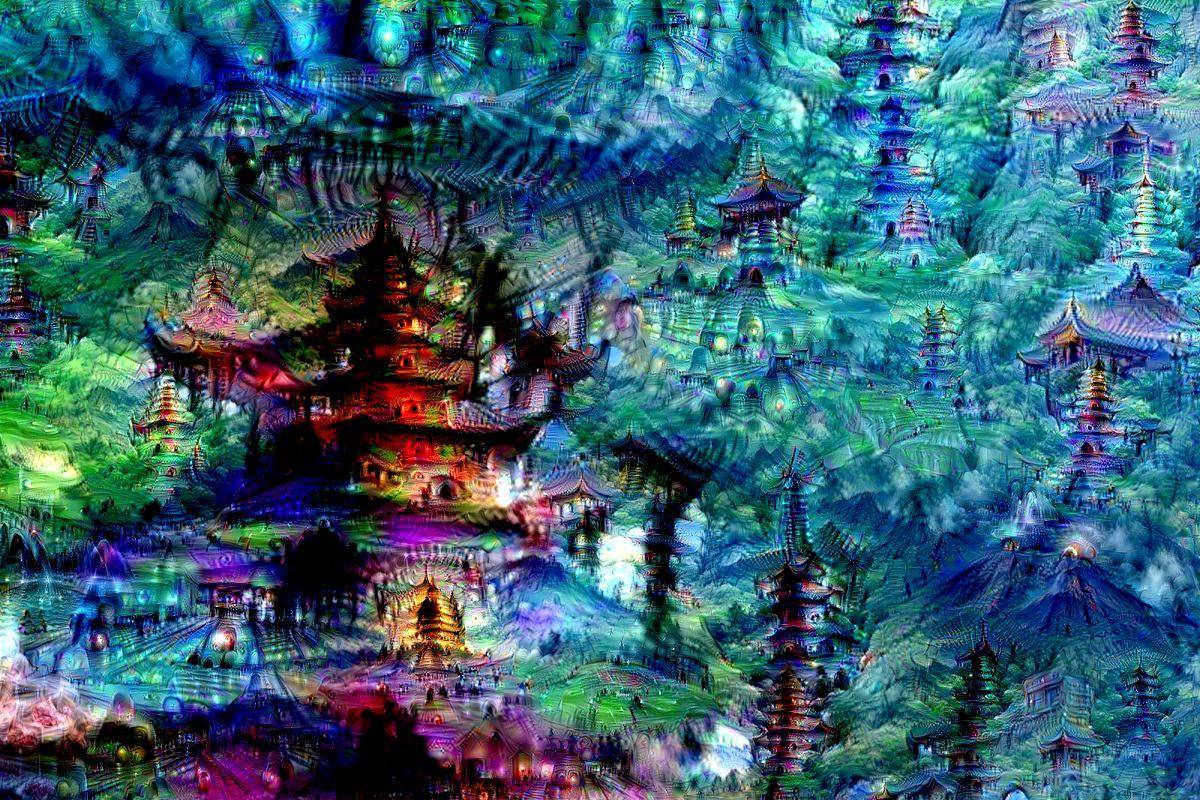

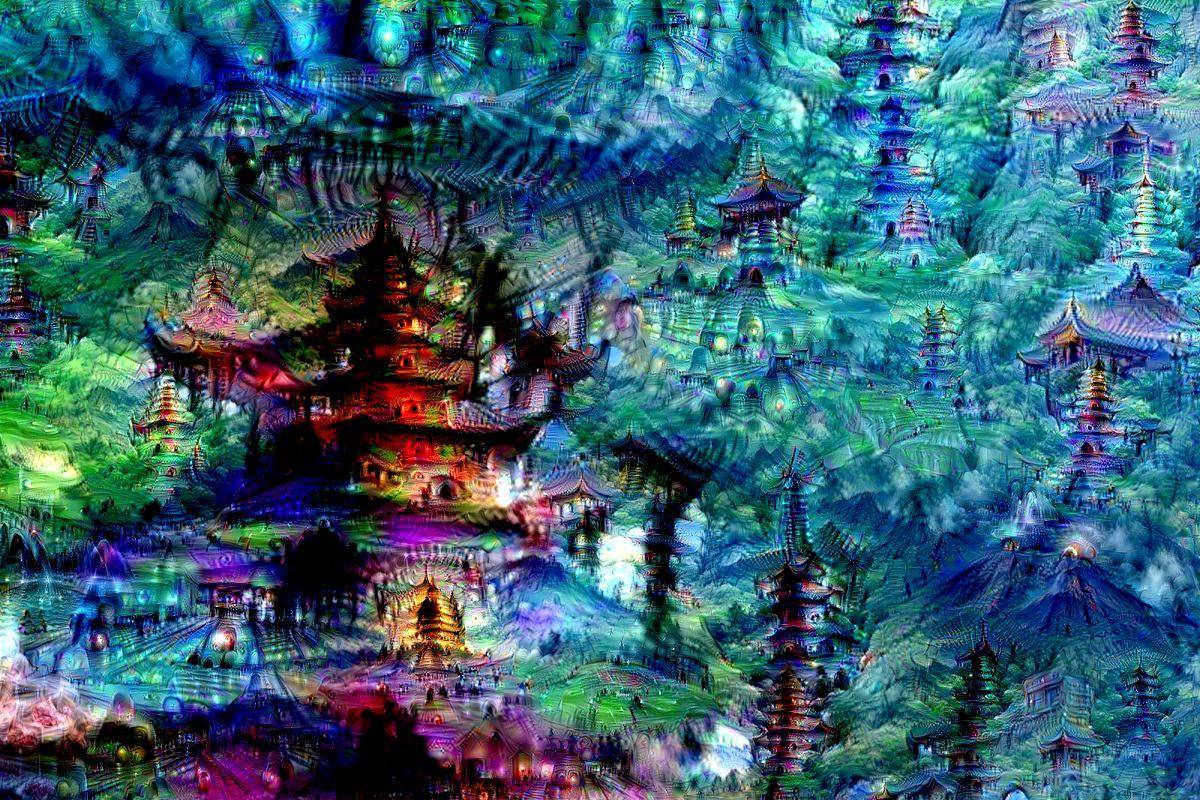

This helped refine the company’s image processing capabilities, but the Google team took it further. Google used the ANN to amplify patterns it saw in pictures. Each artificial neural layer works on a different level of abstraction, meaning some picked up edges based on tiny levels of contrast, while others found shapes and colors. They ran this process to accentuate color and form, and then by told the network to go buck wild, and keep accentuating anything it recognizes. So if a cloud looks similar to a bird, the network would keep applying its idea of a bird in small iterations over and over again.

Oddly enough, the Google team found patterns. Stone and trees often became buildings. Leaves often became birds and insects.

Researchers then set the picture the network produced as the new picture to process, creating an iterative process with a small zoom each time, and soon the network began to create a “endless stream of new impressions.” When started with white noise, the network would produce images purely of its own design. They call these images the neural network’s “dreams,” completely original representations of a computer’s mind, derived from real world objects.

Google continues to use these techniques to find out what their artificial neural networks are learning, and even ponders if it could shed light on the “roots of the creative process in general.”