A new AI called “DeepNash” has mastered Stratego, one of the few iconic boardgames where computers don’t regularly trounce human players, according to a paper published this week. It’s a huge and surprising result—at least to the Stratego community.

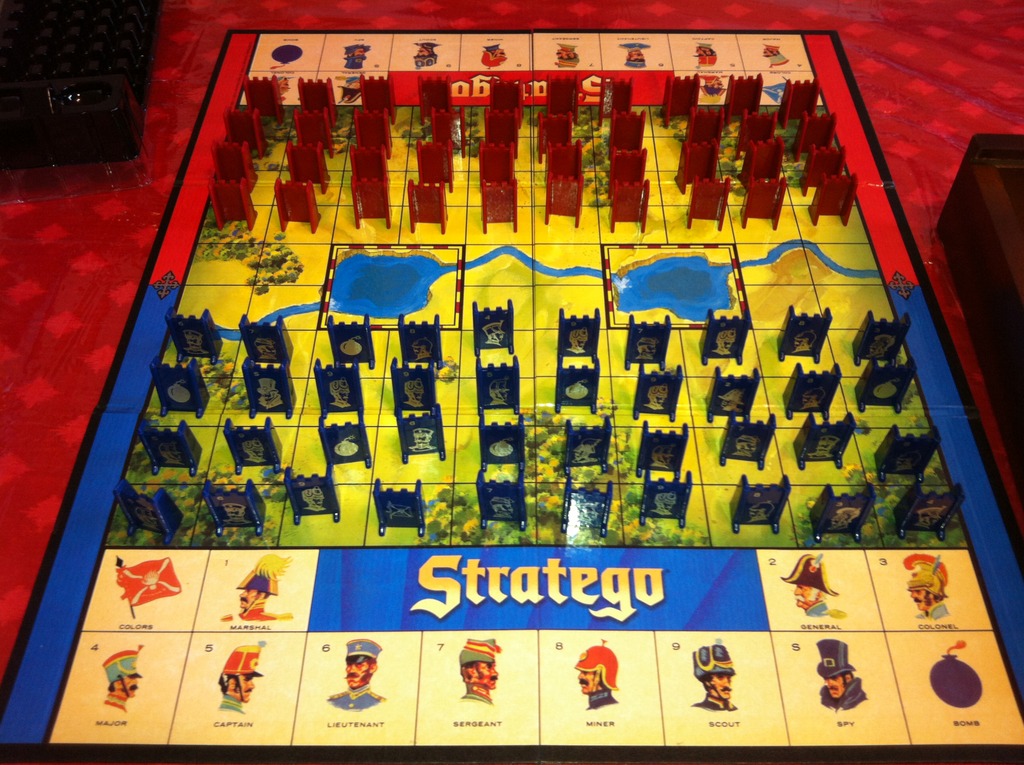

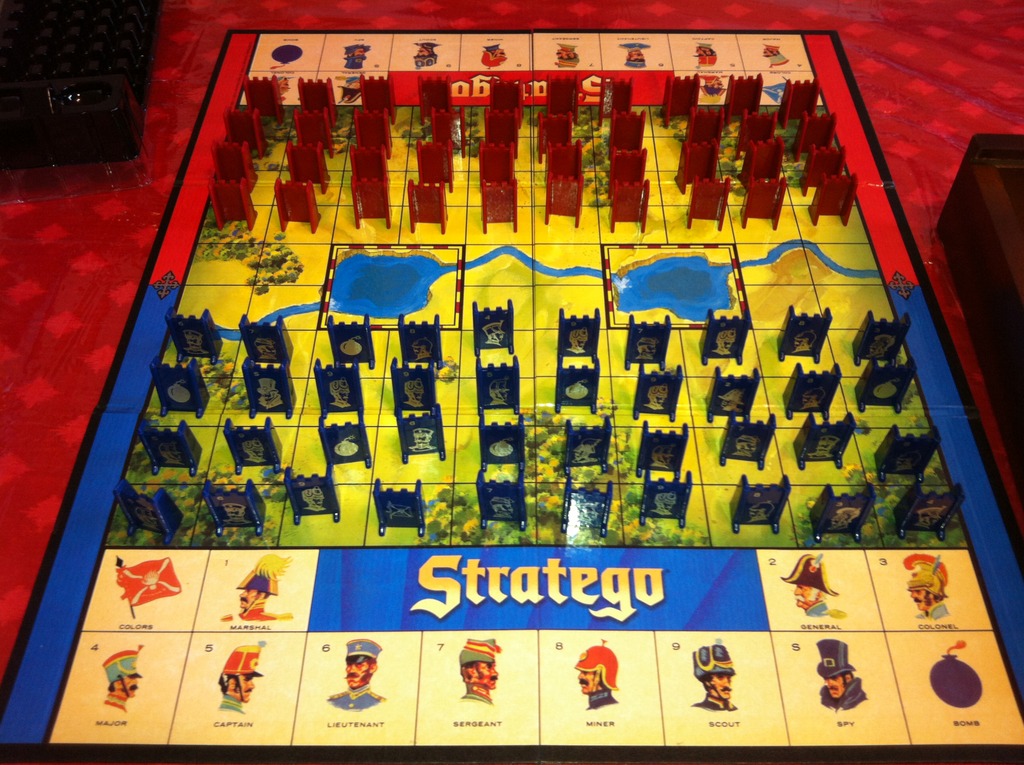

Stratego is a game with two distinct challenges: it requires long-term strategic thinking (like chess) and also requires players to deal with incomplete information (like poker). The goal is to move across the board and capture the other player’s flag piece. Each game takes place over a 10 x 10 gridded board with two 2 x 2 square lakes blocking the middle of the board. Both players have 40 pieces with different tactical values that can are deployed at the start of the game—the catch is that you can’t see what your opponent’s pieces are and they can’t see what yours are. When you are planning an attack, you don’t know if the defender is a high-ranked Marshal that will beat almost all your pieces or a lowly Sergeant that can be taken out by a Lieutenant or Captain. Some of the other playable pieces include bombs (powerful but immobile), scouts (that can move more than one square at once), and miners (who can defuse bombs) which all add to the tactical complexity. The game only ends when one player’s flag piece is captured or they can no longer make any legal moves.

All this is to say that Stratego creates a unique challenge for computers to solve. Chess is relatively easy because all the information is visible to everyone—in game theory, it’s called a “perfect information game”. A computer can look at your defences, simulate 10 or so moves ahead for a few different options, and pick the best one. It gives them a serious strategic advantage over even the best human players. It also helps that chess is a game that tends to be won or lost by in a few key moments rather than by gradual pressure. The average chess game takes around 40 moves while Stratego takes more than 380. This means each move in chess is far more important (and for humans, warrants a lot more consideration) whereas Stratego is more fast paced and flexible.

[Related: Meta’s new AI can use deceit to conquer a board game world]

Stratego, on the other hand, is an “imperfect information game.” Until an opponent’s piece attacks or is attacked, you have no way of knowing what it is. In poker, an imperfect information game that computers have been able to play at a high level for years, there are 10^164 possible game states and each player only has 10^3 possible two-card starting hands. In Stratego, there are 10^535 possible states and more than 10^66 possible deployments—that means there’s a lot more unknown information to account for. And that’s on top of the strategic challenges.

Combined, the two challenges make Stratego especially difficult for computers (or AI researchers). According to the team, it’s “not possible to use state-of-the-art model-based perfect information planning techniques nor state-of-the-art imperfect information search techniques that break down the game into independent situations.” The computer has to be able to make strategic plans that incorporate the imperfect information it has available to it.

But DeepNash has been able to pull it off. The researchers used a novel method that allowed the AI to learn to play Stratego by itself while developing its own strategies. It used a model-reinforcement learning algorithm called Regularized Nash Dynamics (R-NaD) combined with a deep neural network architecture that seeks a Nash equilibrium—“an unexploitable strategy in zero-sum two-player games” like Stratego—and by doing so, it could learn the “qualitative behavior that one could expect a top player to master.” This is an approach that has been used before in simple Prisoners Dilemma-style games, but never with a game as complex as this.

DeepNash was tested against the best existing Stratego bots and expert human players. It beat all other bots and was highly competitive against the expert humans on Gravon, an online board games platform. Even better, from a qualitative standpoint, it was able to play well. It could make trade-offs between taking material and concealing the identity of its pieces, execute bluffs, and even take calculated gambles. (Though the researchers also consider that terms like “deception” and “bluff” might well refer to mental states that DeepNash is incapable of having.)

All told, it’s an exciting demonstration of a new way of training AI models to play games (and maybe perform other similar tasks in the future)—and it doesn’t rely on computationally heavy deep search strategies which have previously been used to play other games like chess, Go, and poker.