In the most public matchup of man against machine since Garry Kasparov played chess against IBM’s Deep Blue computer in 1997, Google DeepMind will start the first of a 5-game tournament of Go tonight, against the world champion of the game.

AlphaGo, Google DeepMind’s artificial intelligence algorithm, will face Lee Se-dol, who has retained the title of top Go player in the world for the last decade.

AlphaGo previously bested European champion Fan Hui in an tournament in October 2015. However, Sedol is a much more formidable opponent. Go rankings are confusing and slightly convoluted, but based on calculations done by Michael Fu on Quora, Hui would statistically have a 25 percent chance of beating Se-dol, based on previous game rating scores.

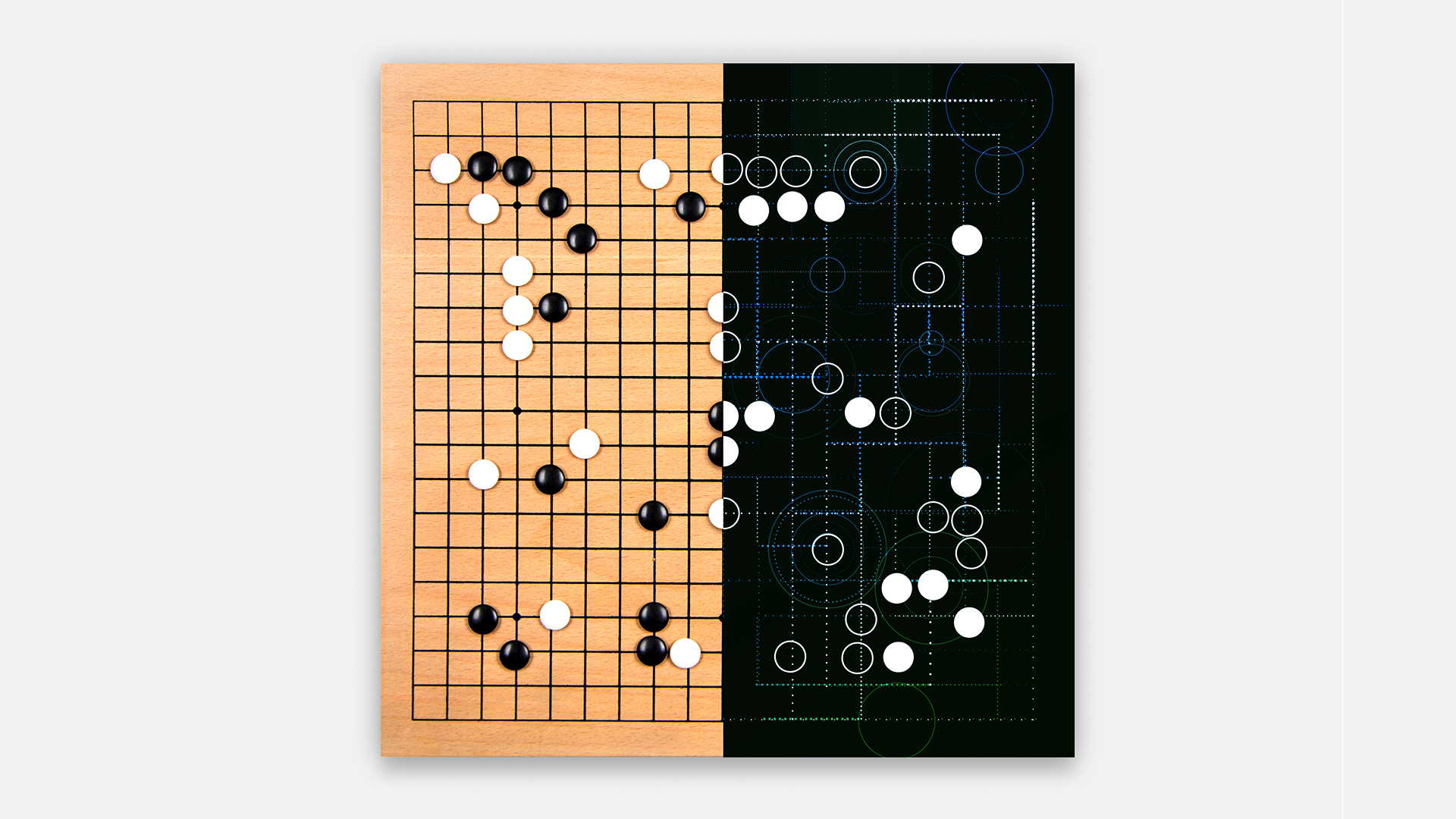

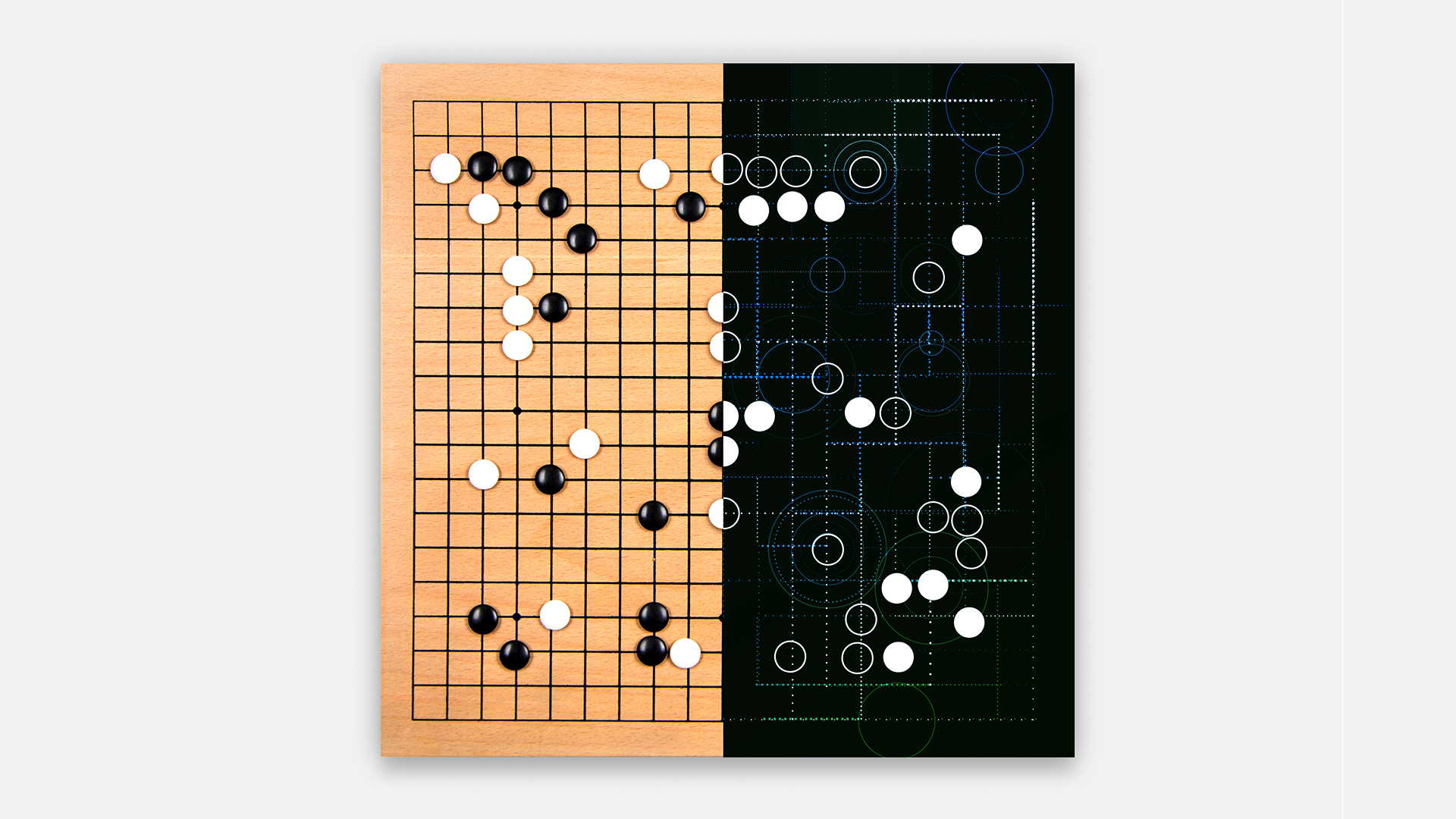

Go is a two-person game played on a 19×19 board. Each turn a player places a round tile, called a stone, to strategically capture the other player’s stones. One player is represented by black stones, the other white. This 19×19 grid creates nearly endless possibilities for each move, and for each outcome of the game. When Deep Blue played chess against Garry Kasparov, it was possible for the machine to compare 200 million moves per second, to see which one would be most advantageous. However, in Go that’s not a possibility, because the sheer number of possible outcomes is too vast.

In chess, there are about 20 potential moves each turn, DeepMind head Demis Hassabis told The Verge, but Go has about 200 potential moves per turn, vastly complicating any computations.

To make these decisions AlphaGo relies on two deep neural networks, layers of mathematic equations have analyzed tens of millions of previous Go moves. One network predicts the next best short term move that it’s seen before, and the other decides if that move is favorable to win the entire game. If so, AlphaGo makes the move. But since AlphaGo is only an algorithm, it still relies on a human proxy to actually place the stones.

AlphaGo winning these matches would be validation of the work Google DeepMind is doing in game-based artificial intelligence. They believe that by mastering games, with set parameters and rules, much like the rules of physics or imposted laws, we can train artificial intelligence algorithms in controlled environments. The value in exercises like the match against Se-dol lie in the algorithm’s ability to solve endlessly complex problems in a constrained time.

The same kind of computation that drives AlphaGo’s decision engine could be the one driving your car one day. By expanding our knowledge and prowess of deploying these networks, even in limited board games, AlphaGo winning would show the world that A.I. is ready for serious implementation.

The match is for a prize of $1 million, and if AlphaGo wins, the money will be donated to UNICEF, STEM and Go charities.

The matchup will start streaming at on YouTube at 11 p.m. EST (8 p.m. PST). Each game is expected to last 4-5 hours.

American Go player Michael Redmond, who has more than 500 competitive wins, will act as an English announcer for each match.

The five matches will take place March 9, 10, 12, 13, and 15, each starting at 1pm Korea time (11 pm EST the previous day). For Western viewers, that’s 11 pm March 8, 9, 11, 12, and 14.