Stanford researchers want to give digital cameras better depth perception

A modulator created by Stanford engineers can give a regular digital camera some LiDAR capabilities.

Digital and smartphone cameras can take pictures with better resolution than ever before. However, these cameras, which use something called CMOS sensors, cannot perceive depth the way that another type of device can: lidar, which stands for light detection and ranging.

Lidar sensors emit pulses of laser light into the surrounding environment. When these light waves bounce off of objects and come back to the sensor, they provide information on how far away the object is. This type of 3D imaging is useful for directing machines like drones, robots, or autonomous vehicles. But Lidar devices are big, bulky and expensive, not to mention that they need to be built from scratch and customized for each type of application.

Researchers from Stanford University wanted to build a low-cost, three-dimensional sensing device that takes advantage of the best features of both technologies. In essence, they took a component from Lidar sensors and modified it so it could work with a standard digital camera and give it the ability to gauge distance in images. A paper detailing their device was published in the journal Nature Communications in March.

Over the last few decades, CMOS image sensors have become very advanced, very high-resolution, and very cheap. “The problem is CMOS image sensors don’t know if something is one meter away or 20 meters away. The only way to understand that is by indirect cues, like shadows, figuring out the size of the object,” says Amin Arbabian, associate professor of electrical engineering at Stanford and an author on the paper. “The advanced Lidar systems that we see on self-driving cars are still low volume.”

[Related: Superfast Lasers Create A Hologram You Can Touch]

If there was a way to cheaply add 3D sensing abilities via an accessory or an attachment to a CMOS sensor, then they could deploy this tech at scale in places where CMOS sensors are already being used. The fix comes in the form of a simple contraption that can be placed in front of a normal digital camera or even a smartphone camera. “The way you capture in 3D is by adding a light source, which is already present in most cameras as the flash, and also modulators that we invented,” says Okan Atalar, a doctoral candidate in electrical engineering at Stanford and the first author on the paper. “Using our approach, on top of the brightness and colors, we can also perceive the depth.”

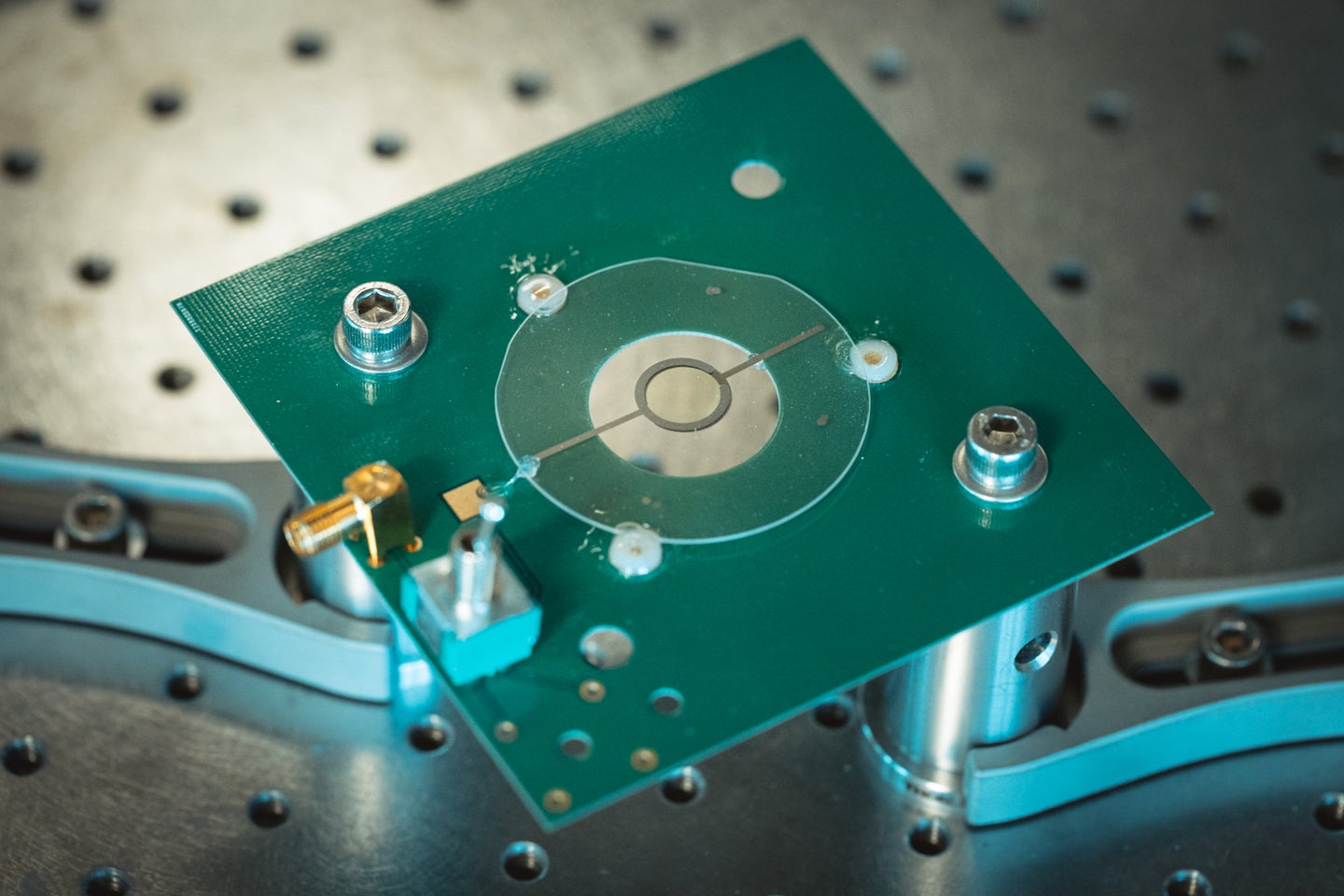

Modulators can alter the amplitude, frequency, and intensity of light waves that pass through them. The Stanford team’s device consists of a modulator made of a wafer of lithium niobate coated with electrodes that’s sandwiched between two optical polarizers. The device measures distance by detecting variations in incoming light.

[Related: Artificial intelligence could help night vision cameras see color in the dark]

In their testing, a digital camera paired with their prototype captured four-megapixel-resolution depth maps in an energy-efficient way. Having demonstrated that the concept works in practice, the team will now try to improve the device’s performance. Currently, their modulator works with sensors that can capture visible light, although Atalar suggests that they might look into making a version that could work with infrared cameras as well.

Atalar imagines that this device could be helpful in virtual and augmented reality settings, and could improve onboard sensing on autonomous platforms like robots, drones, and rovers. For example, a robot working in a warehouse needs to be able to understand how far away objects and potential obstacles are in order to navigate around safely.

“These [autonomous platforms] depend on algorithms to make decisions—the performance depends on the base that’s coming in from the sensors,” Atalar says. “You want cheap sensors, but you also want sensors that have high fidelity in perceiving the environment.”