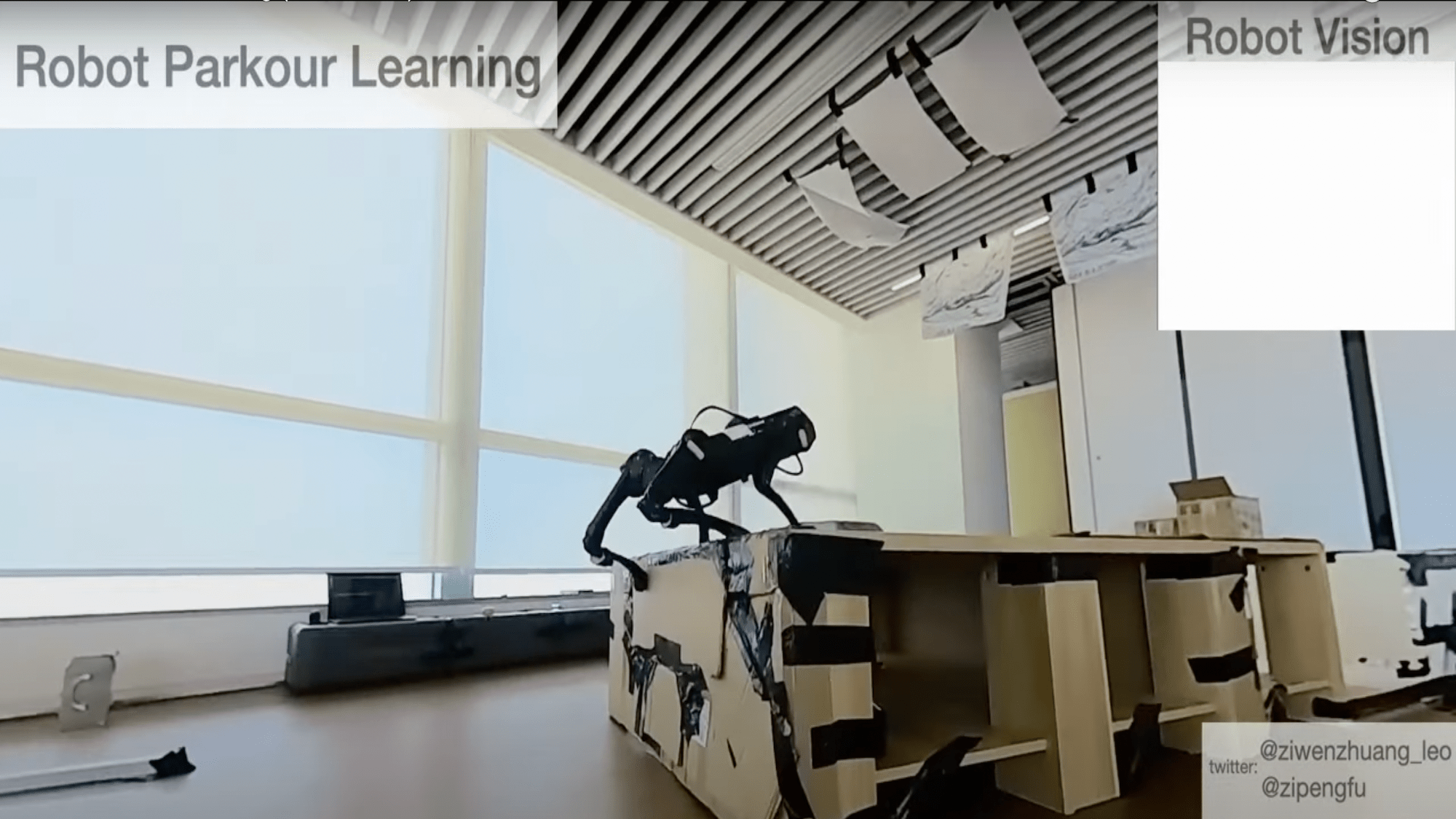

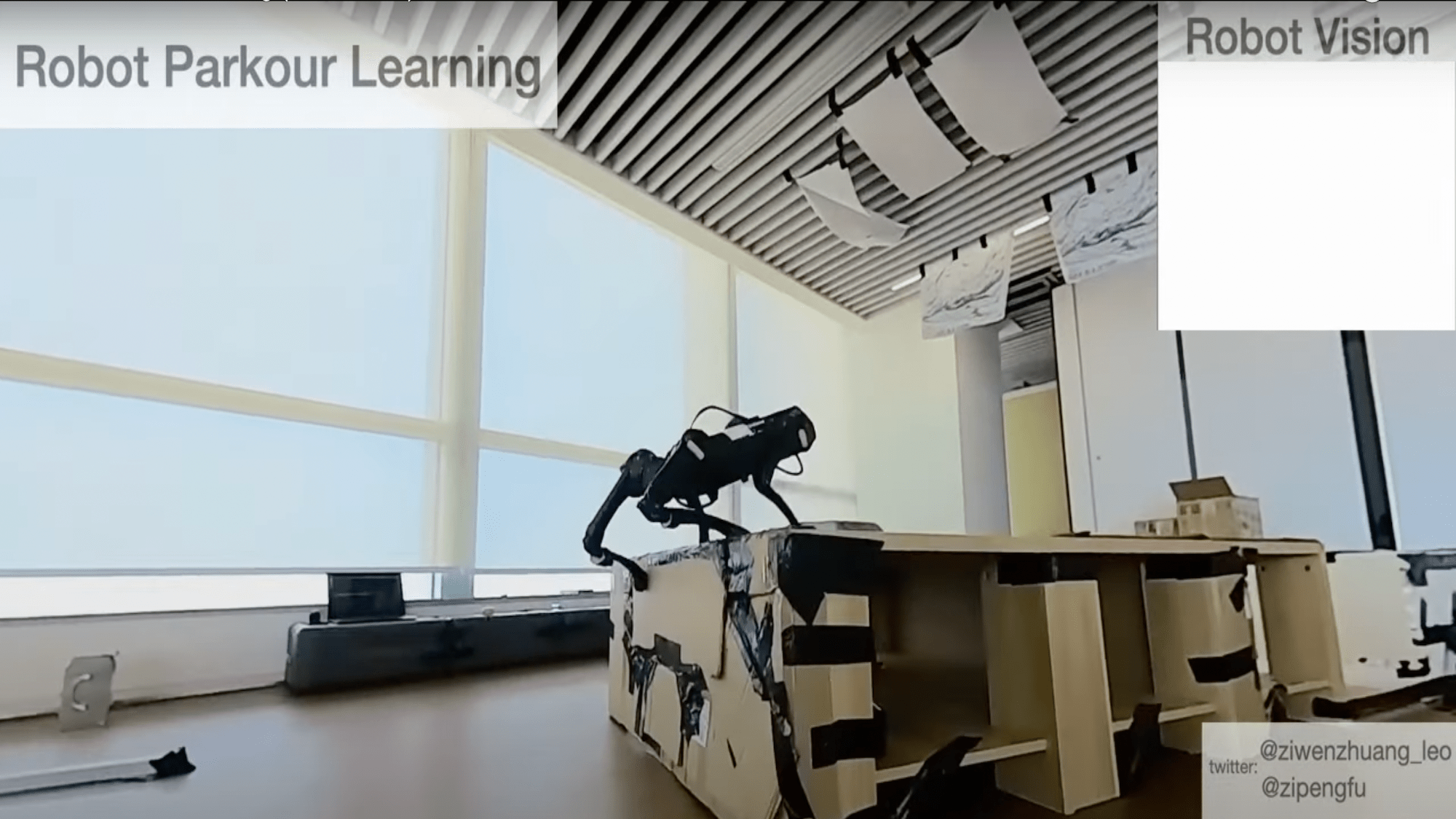

While bipedal human-like androids are a staple of sci-fi movies, for many potential real world tasks, like rescuing people from burning buildings, flooded streets, or the freezing wilds, four-legged “robodogs” are better. In a new paper due to be presented at the Conference on Robot Learning (CoRL) next month in Atlanta, researchers at Stanford University and Shanghai Qi Zhi Institute have proposed a novel, simplified machine learning technique that allows them to train a vision-based algorithm that enables (relatively) cheap, off-the-shelf robots to climb, leap, crawl, and run around the real world. As the researchers claim, they can do “parkour” all by themselves.

Traditionally, teaching robots to navigate the world has been an expensive challenge. Boston Dynamics’ Atlas robots can dance, throw things, and parkour their way around complex environments, but they are the result of more than a decade of DARPA-funded research. As the researchers explain in the paper, “the massive engineering efforts needed for modeling the robot and its surrounding environments for predictive control and the high hardware cost prevent people from reproducing parkour behaviors given a reasonable budget.” However, recent advances in artificial intelligence have demonstrated that training an algorithm in a computer simulation and then installing it in a robot can be cost effective way to train them to walk, climb stairs, and mimic animals, so the researchers set out to do the same for parkour in low-cost hardware.

The researchers used two-stage reinforcement learning to train the parkour algorithm. In the first “soft dynamics” step, the virtual robots were allowed to penetrate and collide with the simulated objects but were encouraged—using a simple reward mechanism—to minimize penetrations as well as the mechanical energy necessary to clear each obstacle and move forward. The virtual robots weren’t given any instructions—they had to puzzle out how best to move forward for themselves, which is how the algorithm learns what does and doesn’t work.

In the second “hard dynamics” fine-tuning stage, the same reward mechanism was used but the robots were no longer allowed to collide with obstacles. Again, the virtual robots had to figure out what techniques worked best to proceed forward while minimizing energy expenditure. All this training allowed the researchers to develop a “single vision-based parkour policy” for each skill that could be deployed in real robots.

And the results were incredibly effective. Although the team was working with small robots that stand just over 10-inches tall, their relative performance was pretty impressive—especially given the simple reward system and virtual training program. The off-the-shelf robots were able to scale objects up to 15.75-inches high (1.53x their height), leap over gaps 23.6-inches wide (1.5x their length), crawl beneath barriers as low as 7.9-inches (0.76x their height), and tilt so they could squeeze through gaps a fraction of an inch narrower than their width.

According to an interview with the researchers in Stanford News, the biggest advance is that the new training technique enables the robodogs to act autonomously using just their onboard computer and camera. In other words, there’s no human with a remote control. The robots are assessing the obstacle they have to clear, selecting the most appropriate approach from their repertoire of skills, and executing it—and if they fail, they try again.

The researchers noted that the biggest limitation with their training method is that the simulated environments have to be manually designed. So, going forward, the team hopes to explore “advances in 3D-vision and graphics to construct diverse simulation environments automatically from large-scale real-world data.” That could enable them to train even more adventurous robodogs.

Of course, this Stanford team isn’t the only research group exploring robodogs. In the past year or two, we’ve seen quadrupedal robots of varying shapes and sizes that can paw open doors, climb walls and ceilings, sprint on sand, and balance along beams. But for all that, we’re still a while away from seeing rescue robodogs out in the wild. It seems labradors aren’t out of a job just yet.

See them in action, below: