Say you wake up and you find that you’ve transformed into a six legged insect. This might be a rather disruptive experience, but if you carry on, you’ll probably want to find out what your new body can and can’t do. Perhaps you’ll find a mirror. Perhaps, with a little bit of time, you might be able to acclimatize to this new shape.

This fantastic concept isn’t too different from the principle that some engineers want to harness to build better robots. For a demonstration, one group has created a robot that could learn, through practice, what its own form can do.

“The idea is that robots need to take care of themselves,” says Boyuan Chen, a roboticist at Duke University in North Carolina. “In order to do that, we want a robot to understand their body.” Chen and his colleagues published their work in the journal Science Robotics on July 13.

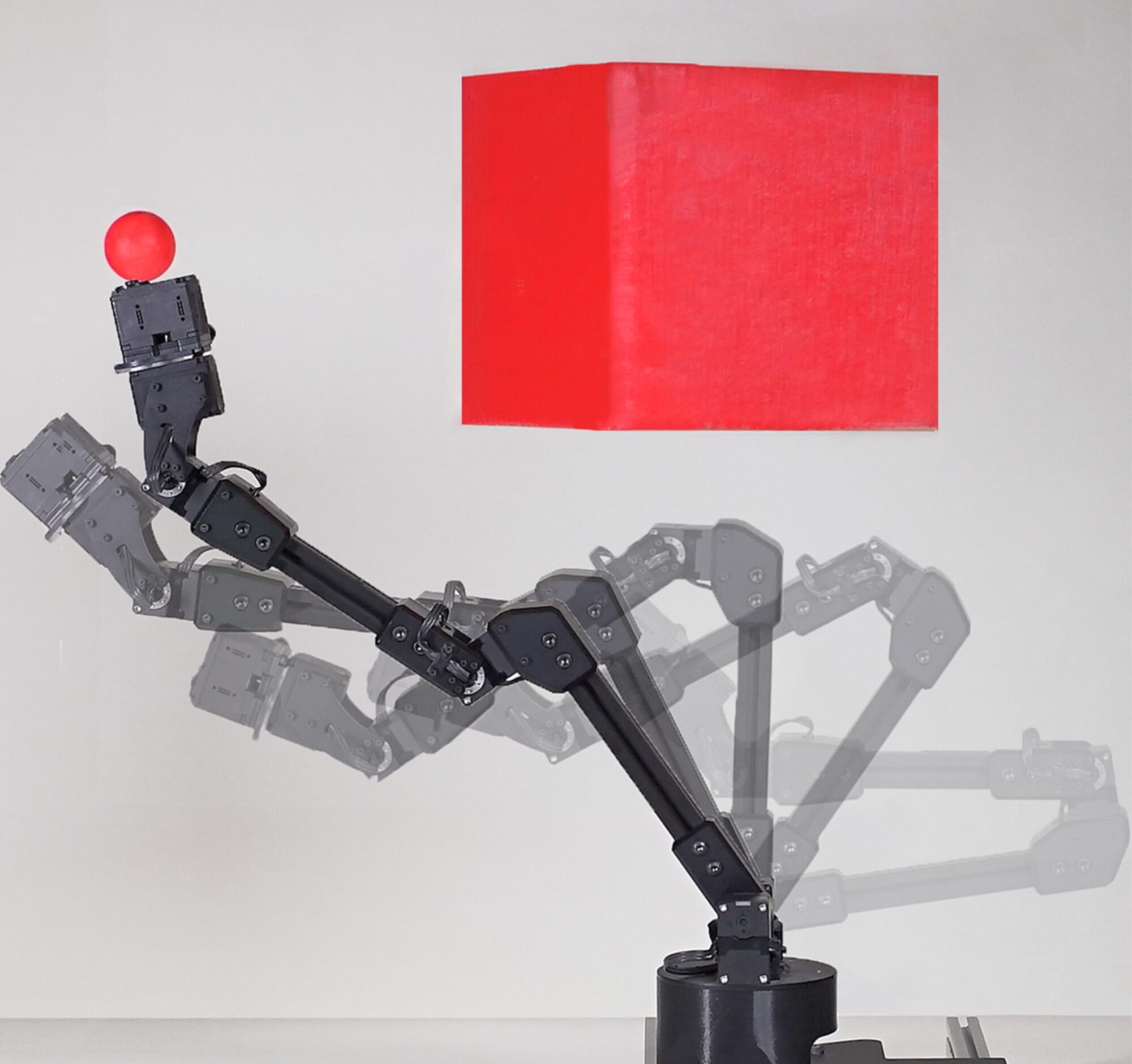

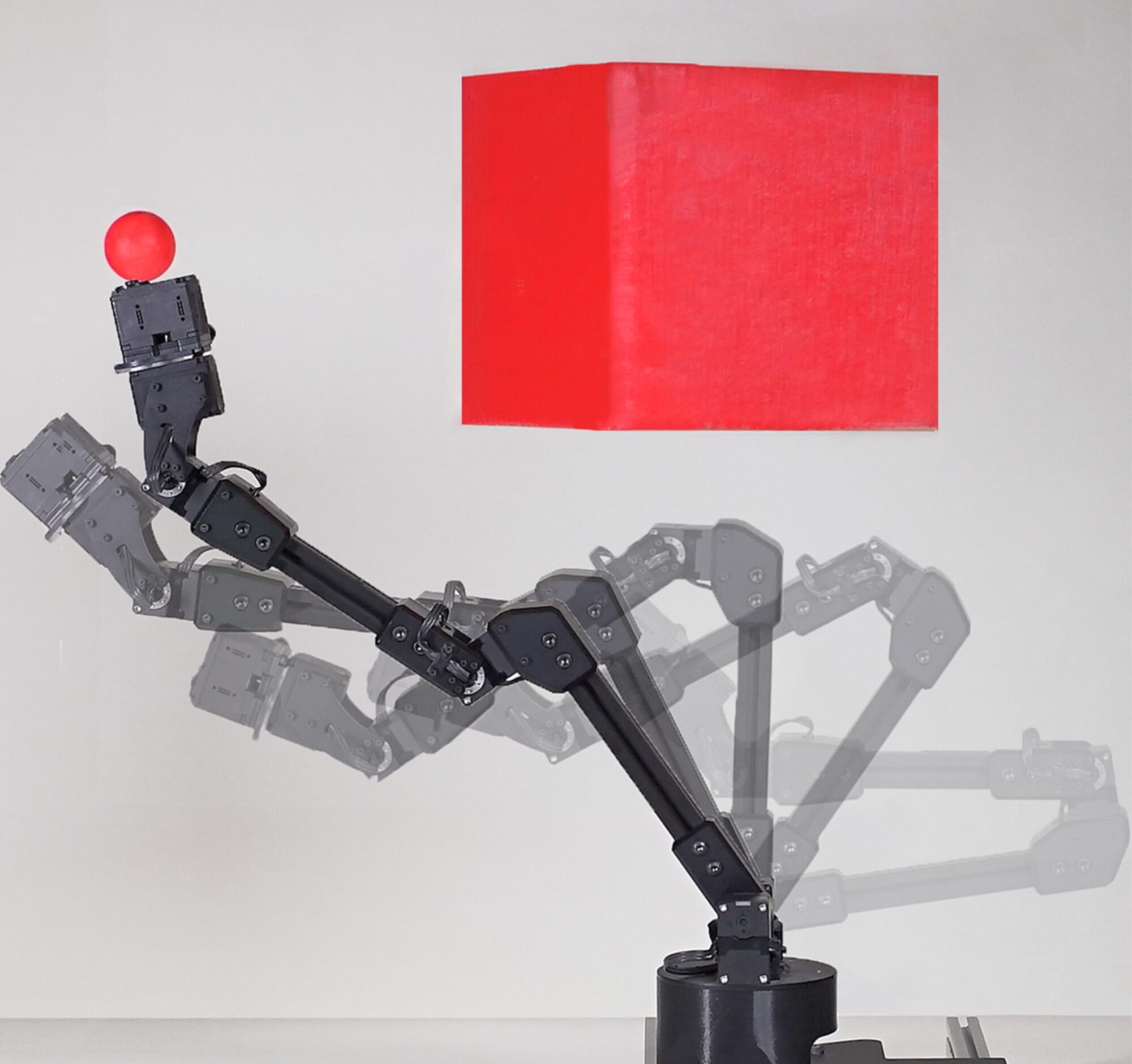

Their robot is relatively simple: a single arm mounted atop a table, surrounded by a bank of five video cameras. The robot had access to the camera feeds, allowing it to see itself as if in a room full of mirrors. The researchers instructed it to perform the basic task of touching a nearby sphere.

By means of a neural network, the robot pieced together a fuzzy model of what it looked like, almost like a child scribbling a self-portrait. That helped human observers, too, prepare for the machine’s actions. If, for instance, the robot thought its arm was shorter than it actually was, its handlers could stop it from accidentally striking a bystander.

Like infants wiggling their limbs, the robot began to understand the effects of its movements. If it rotated its end or moved it back and forth, it would know whether or not it would strike the sphere. After about three hours of training, the robot understood the limitations of its material shell, enough to touch that sphere with ease.

“Put simply, this robot has an inner eye and an inner monologue: It can see what it looks like from the outside, and it can reason with itself about how actions it has to perform would pan out in reality,” says Josh Bongard, a roboticist at the University of Vermont, who has worked with the paper authors in the past but was not an author.

[Related: MIT scientists taught robots how to sabotage each other]

Robots knowing what they look like isn’t, in itself, new. Around the time of the Apollo moon landings, scientists in California built Shakey the Robot, a boxy contraption that would have been at home in an Outer Limits episode. Shakey came preloaded with a model of itself, helping the primitive robot make decisions.

Since then, it’s become a fairly common practice for engineers to program robots with an image of itself or its environment, one that the robot can consult to make decisions. It’s not always advantageous, because the robot won’t be very adaptable. That’s fine if the robot has one or a few preset tasks, but for robots with a more general purpose, the researchers think they can do better.

More recently, researchers have tried training robots in virtual reality. The robots learn maneuvers in a simulation that they can put into practice in meatspace. It sounds elegant, but it isn’t always practical. Running a simulation and having robots learn inside it demands a heavy dose of computational power, like many other forms of AI. The costs, both financially and environmentally, add up.

Having a robot teach itself in real life, on the other hand, opens many more doors. It’s less computationally demanding, and isn’t unlike how we learn to view our own changing bodies. “We have a coherent understanding of our self-body, what we can and cannot do, and once we figure this out, we carry over and update the abilities of our self-body every day,” Chen says.

That process could aid robots in environments that are inaccessible for humans, like deep underwater or outside Earth’s atmosphere. Even robots in common settings might make use of such abilities. A factory robot, say, might be able to determine if there’s a malfunction and adjust its routine accordingly.

These researchers’ arm is but a rudimentary first step to that goal. It’s a far cry from the body of even a simple animal, let alone the body of a human.

The machine, to wit, has only four degrees of freedom, meaning there are only four different motions it can take. The scientists are now working on a robot with twelve degrees of freedom. The human body has hundreds. And a robot with a rigid exterior is a vastly different beast from one with a softer, flexible form.

“The more complex you are, the more you need this self-model to make predictions. You can’t just guess your way through the future,” believes Hod Lipson, a mechanical engineer at Columbia University and one of the paper authors. “We’ll have to figure out how to do this with increasingly complex systems.”

[Related: Will baseball ever replace umpires with robots?]

Roboticists are optimistic that the machine learning that guided this robot can be applied to those with more complex systems. Bongard says that the methods the robots used to learn have already been proven to scale up well—and, potentially, to other things, too.

“If you have a robot that can now build a model of itself with little computational effort, it could build and use models of lots of other things, like other robots, autonomous cars…or someone reaching for your off switch,” says Bongard. “What you do with that information is, of course, up to you.”

For Lipson in particular, making a robot that can understand its own body isn’t just a matter of building smarter robots in the future. He believes his group has created a robot that understands the limitations—and powers—of its own body.

We might think of self-awareness as being able to think about its existence. But as you might know if you’ve been around an infant lately, there are other forms of self-awareness, too.

“To me,” Lipson says, “this is a first step towards sentient robotics.”