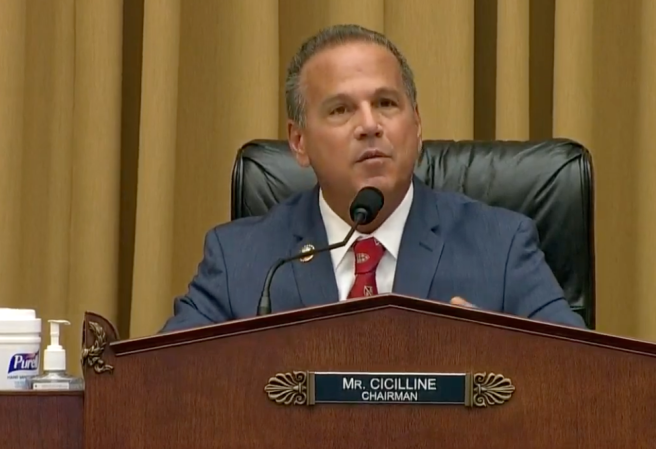

The Federal Trade Commission, generally not known for flowery rhetoric or philosophical musings, took a moment on Monday to publicly ponder, “What exactly is ‘artificial intelligence’ anyway” in a vivid blog post from Michael Atleson, an attorney within the FTC’s Division of Advertising Practices.

After summarizing humanity’s penchant for telling stories about bringing things to life “imbue[d] with power beyond human capacity,” he asks, “Is it any wonder that we can be primed to accept what marketers say about new tools and devices that supposedly reflect the abilities and benefits of artificial intelligence?”

[Related: ChatGPT is quietly co-authoring books on Amazon.]

Although Atleson eventually leaves the broader definition of “AI” largely open to debate, he made one thing clear: The FTC knows what it most certainly isn’t, and grifters are officially on notice. “[I]t’s a marketing term. Right now it’s a hot one,” continued Atleson. “And at the FTC, one thing we know about hot marketing terms is that some advertisers won’t be able to stop themselves from overusing and abusing them.”

The FTC’s official statement, while somewhat out of the ordinary, is certainly in keeping with the new, Wild West era of AI—a time when every day sees new headlines about Big Tech’s latest large language models, “hidden personalities,” dubious claims to sentience, and the ensuing inevitable scams. As such, Atleson and the FTC are going so far as to lay out an explicit list of things they’ll be looking out for while companies continue to fire off breathless press releases on their purportedly revolutionary breakthroughs in AI.

“Are you exaggerating what your AI product can do?” the Commission asks, warning businesses that such claims could be charged as “deceptive” if they lack scientific evidence, or only apply to extremely specific users and case conditions. Companies are also strongly encouraged to refrain from touting AI as a means to potentially justify higher product costs or labor decisions, and take extreme risk-assessment precautions before rolling out products to the public.

[Related: No, the AI chatbots (still) aren’t sentient.]

Falling back on blaming third-party developers for biases and unwanted results, retroactively bemoaning “black box” programs beyond your understanding—these won’t be viable excuses to the FTC, and could potentially open you up to serious litigation headaches. Finally, the FTC asks perhaps the most important question at this moment: “Does the product actually use AI at all?” Which… fair enough.

While this isn’t the first time the FTC has issued industry warnings—even warnings concerning AI claims—it remains a pretty stark indicator that federal regulators are reading the same headlines the public is right now—and they don’t seem pleased.