Earlier this month, Apple announced they would be taking additional steps towards limiting the spread of child sex abuse material (CSAM). But while CSAM is illegal, the moves Apple is taking to fight the scourge have been condemned by a rapidly growing number of tech experts, advocates, activists, academics and lawyers.

What did Apple announce?

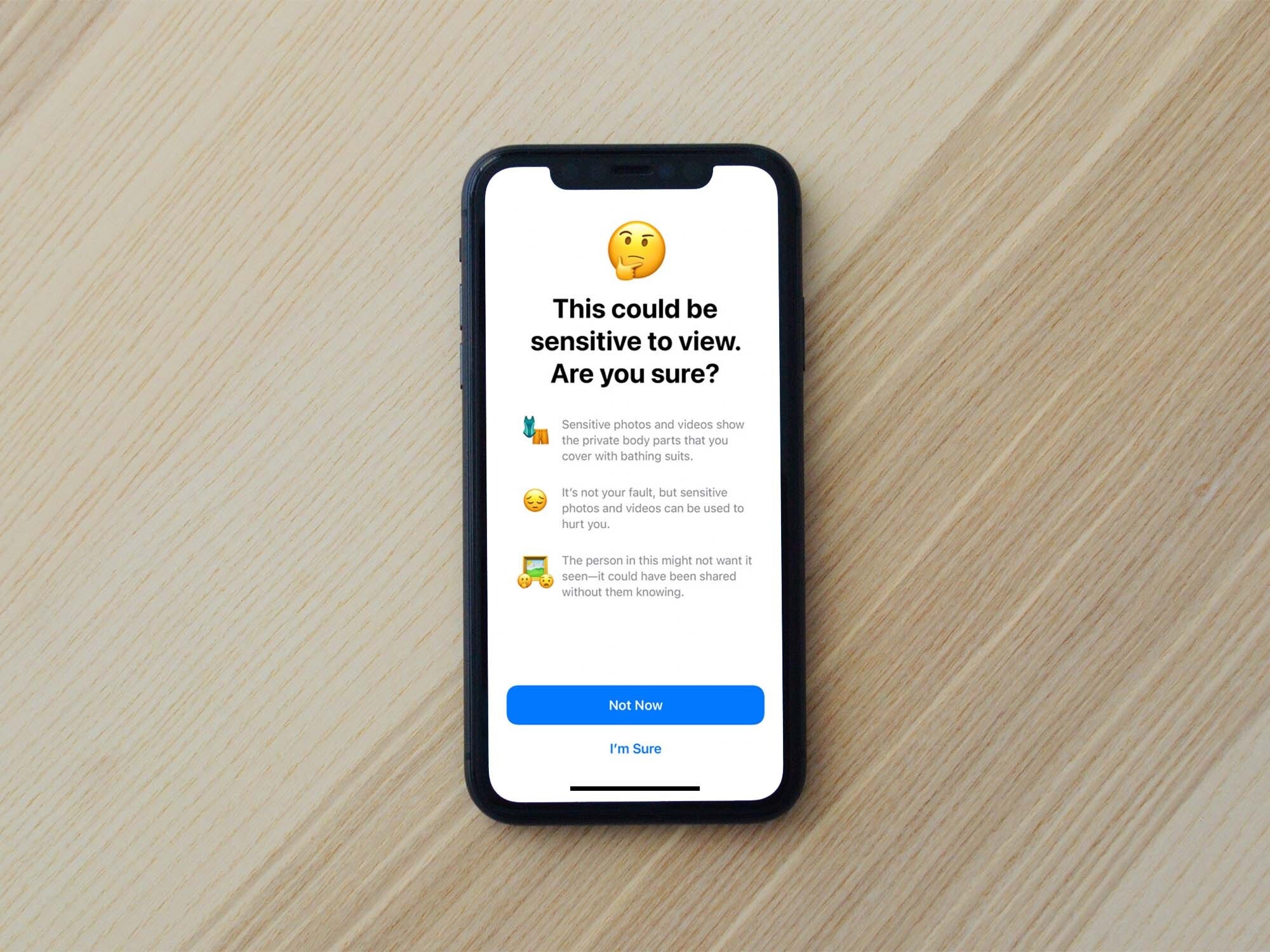

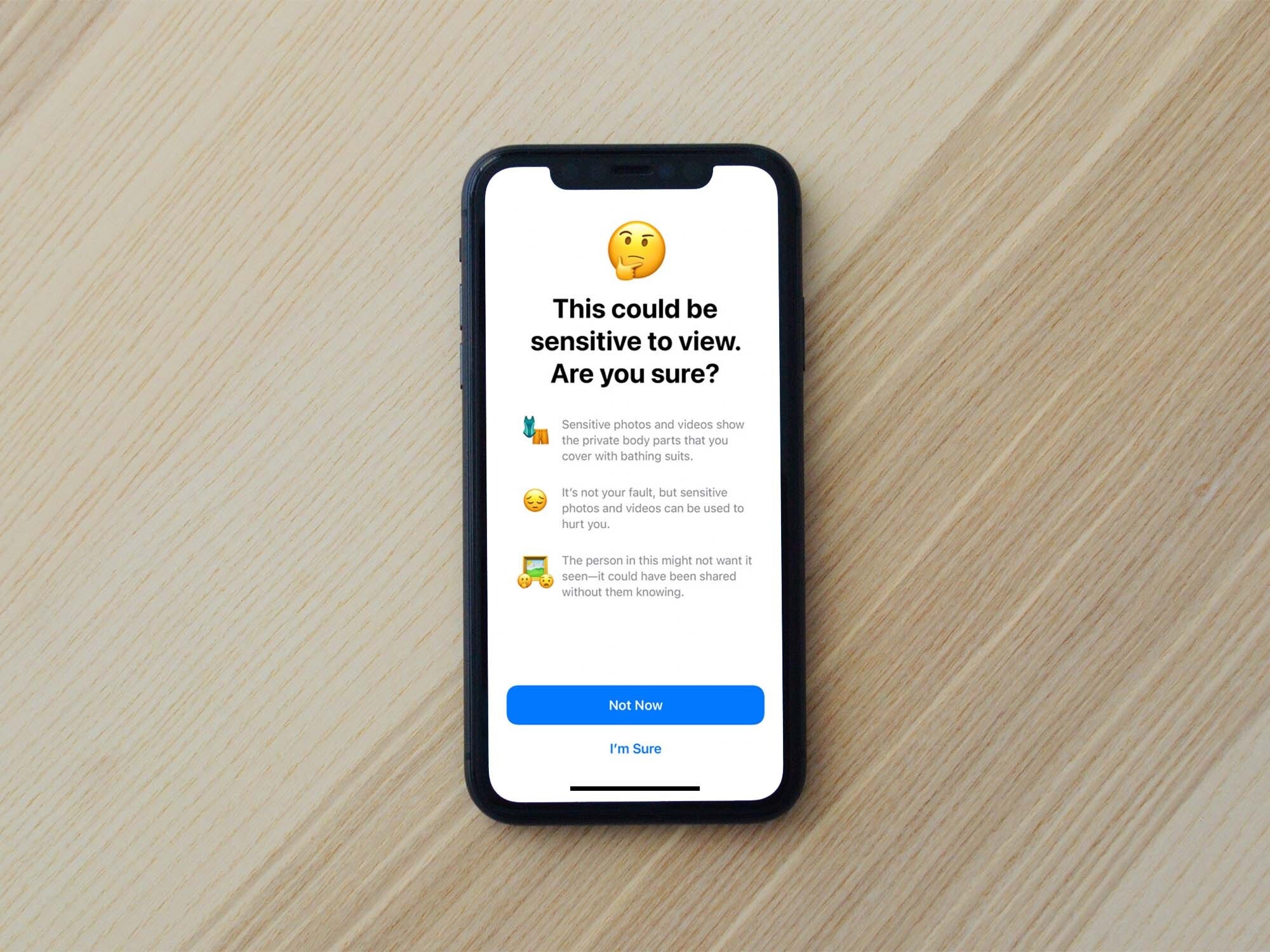

There were three new features, set to be implemented at some point in 2021. One feature involves scanning photos sent on the iMessage app for sexually explicit materials. Parents can opt into a system where they would be warned if their children were sending or receiving sexually explicit materials, like nudes. While the parents would not be able to see the materials, they would be informed if the child decided to view them anyway.

Another feature being rolled out is the ability for Apple to detect CSAM materials on people’s phones if they have their photo library synced with Apple’s cloud service. Photos and videos uploaded to iCloud would be scanned for matches with photos from the National Center for Missing and Exploited Children (NCMEC) database. If there are enough matches, an Apple moderator would be informed and next steps, like disabling the account, will be taken.

Finally, Siri and Search would provide interventions and resources to people searching for CSAM, and would offer guidance to people who asked Siri how to file a report on CSAM.

What has the reaction been like?

Apple’s latest moves have been endorsed by computer scientist David Forsyth and cryptographers Mihir Bellare and Benny Pinkas, and have also garnered praise from child protection groups. “Apple’s expanded protection for children is a game changer,” John Clark, CEO of NCMEC, said in a statement.

But over 7,000 people, among them numerous academic and tech experts, as well as former Apple employees, signed an open letter protesting the move. The “proposal introduces a backdoor that threatens to undermine fundamental privacy protections for all users of Apple products,” the letter said. In a leaked internal memo, an Apple software VP expressed concerns over how the public would receive this, saying “more than a few [people] are worried about the implications.”

There was so much confusion that Apple had to publish an additional FAQ addressing people’s concerns. “Let us be clear, this technology is limited to detecting CSAM stored in iCloud and we will not accede to any government’s request to expand it,” they wrote. Apple has also released an additional document called the Security Threat Model Review of Apple’s Child Safety Features.

Edward Snowden, Epic CEO Tim Sweeney and politician Brianna Wu also vocalized their concerns, as did the Open Privacy Research Society, The Center for Democracy and Technology and The Electronic Frontier Foundation.

“Instead of focusing on making it easy for people to report content that’s shared with them, Apple has built software that can scan all the private photos on your phone,” WhatsApp head Will Cathcart tweeted. “That’s not privacy.”

Previously, WhatsApp and Facebook Messenger took a strong stance against giving law enforcement a way to see encrypted messages.

What are the concerns?

Apple positions itself as vanguard of privacy, with ad campaigns and billboards dedicated to reminding consumers about the ways Apple will help them protect their privacy. They’ve also called privacy a “human right.” But privacy experts say that these new features will constitute a significant about-face for the company. “Apple has in the past said that it’s not in the business of searching the content of its users’ phones,” says David Greene, senior staff attorney and civil liberties director at the Electronic Frontier Foundation (EFF). “The big change is that this will actually be happening now.”

Greene says that Apple providing a means to search a phone for objectionable material, potentially against the will of a user (as it applies to photos uploaded to iCloud), represents “a significant change in Apple’s relationship with iPhone users.” He’s not the only one expressing disappointment in Apple’s latest announcement. “For the first time since the 1990s we were taking our privacy back,” cryptographer Matthew Green, of Johns Hopkins University, tweeted. “Today we’re on a different path.”

While social media sites like Reddit and Facebook also use image scanning software to detect nudity, and are legally required to report any CSAM found on their sites, Apple has long been hesitant to scan people’s phones. (These scans done by social media companies also examine files stored on a server, while Apple’s new venture examines files stored on people’s phones that are uploaded to iCloud.)

In the past, Apple has stated that they cannot search for content on people’s phones in a way that doesn’t compromise user privacy. In legal papers opposing the FBI’s request to create a backdoor into the iPhone of a shooter in the San Bernardino terrorist attack, Apple noted that regardless of how compelling a request is, it would always be followed by another request from the government.

Greene warns that this tool would be a powerful one to hand to both democratic and non-democratic governments, who may have their own agendas about what kind of content they want Apple to detect on user’s phones. He also noted that if these new features get implemented, existing national laws, like Indian laws that require companies to have technological measures to detect other types of content, would be triggered, giving new responsibilities to Apple.

Could the tool be misused?

Despite its consumer-privacy protecting reputation, Apple has made concessions to foreign governments, for example, selling iPhones without Facetime in the United Arab Emirates.

“Once you build these kinds of technologies and tools governments will at best ask nicely, at worst return with a court order, for you to expand the detection program to other types of content,” says Kendra Albert, an instructor at Harvard’s Cyberlaw Clinic. In other words, experts are concerned Apple’s CSAM strategy could represent a slippery slope for user privacy.

And the concern isn’t just about CSAM content. Albert noted that there are multiple reasons, from research to analysis, why someone might have, for example, content associated with terrorism on their devices. This could lay the groundwork “for really significant surveillance on other topics,” they said.

Another issue is the way the machine learning algorithm trained to detect nudity in iMessage photos will function. Historically, when other tech companies attempt to detect sexually explicit content via algorithms, it’s been difficult to implement. After Tumblr implemented their ban, photos of sand dunes were flagged and on Instagram, photos of overweight men and women are often flagged as inappropriate. Facebook’s nudity laws have often been criticized for their inconsistency.

“Apple, by building this, has basically waded into this giant set of problems around deciding what is nudity or sexually explicit material,” says Albert, referring to the iMessage feature. They also noted that these developments could out children to their parents, or could put queer or trans youth at risk, espeically if they didn’t feel safe speaking with their parents on the topic of their sexuality.

“If I had a queer 12-year-old in front of me, I would tell them to use Signal,” they said, referring to the encrypted messaging service that’s garnered accolades for its robust privacy protections.