In one lab, an image of an object is shown to a study participant on a computer screen. The subject wears a cap connected to an electroencephalography (EEG) machine that monitors his brain waves.

Another participant, the asker, sits in another lab on campus. It’s her job to figure out what image the answerer was shown. The asker has a magnetic coil almost touching her skull. She selects a question from a list on a touchscreen to narrow down the object, 20-questions style.

The question she asks appears on the screen in front of the answerer. To the left and right of the screen are two LED lights, flashing at different frequencies. One side is labeled ‘yes’ and the other ‘no’. He answers the question by staring at the correct light. Chantel Prat, a psychologist at the University of Washington and a co-author on the paper, told Popular Science that the computer can distinguish what light he looks at because different frequencies of light trigger different brain patterns in response.

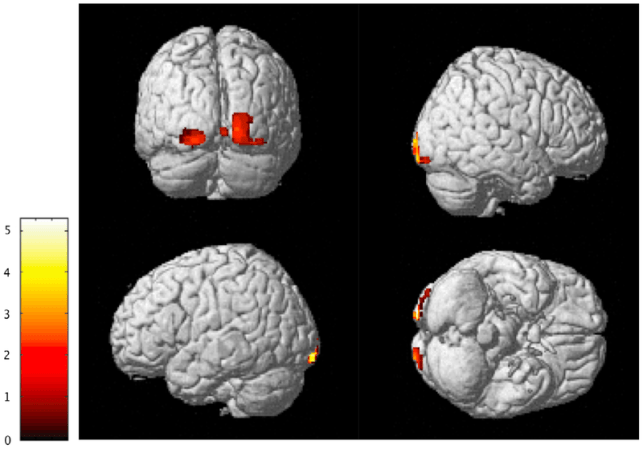

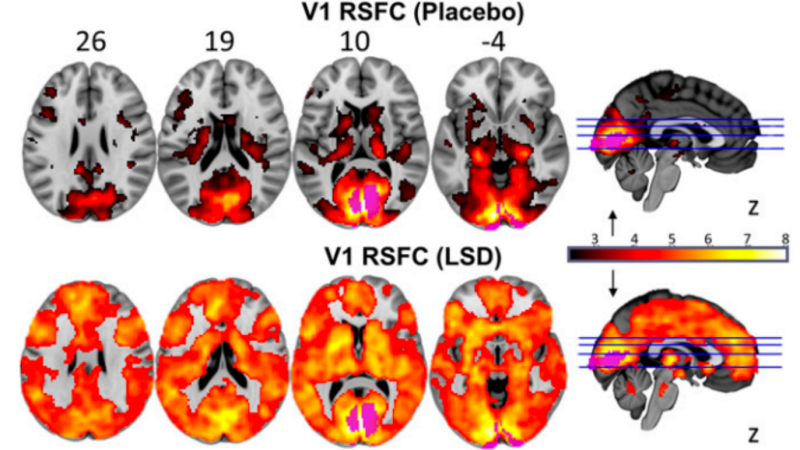

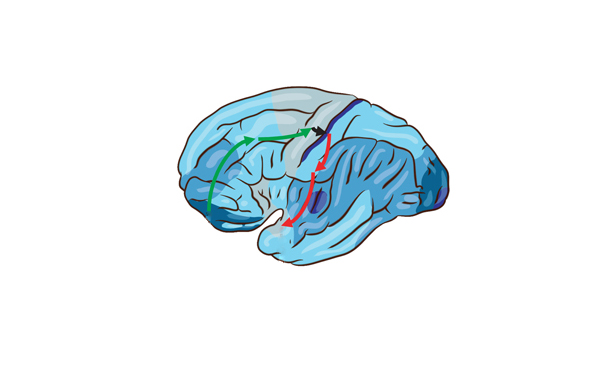

The computer algorithm sends its interpretation of the answerer’s brain patterns to the magnetic coil in the asker’s lab over the Internet. If the answer is yes, the coil releases a pulse that stimulates the asker’s visual cortex in the brain. The stimulation triggers a phosphene, which can look like a flash of light, a blob, a blur, or a wavy line. If it’s no, the coil still makes the same sounds and scalp-tingling sensations, but doesn’t release a pulse strong enough to cause a phosphene.

The asker notes whether they saw the phosphene flash or not, and moves onto the next question. It’s a game of 20 Questions played brain to brain.

In the study, published Wednesday in PLOS One, participants guessed the correct object 72 percent of the time. To make sure it wasn’t due to chance or cheating, the researchers also ran control games where the participants thought they were communicating but no data was being sent. In those conditions, they only guessed correctly 18 percent of the time.

This is a big step forward from the group’s 2014 experiment, in which they successfully transmitted the brain pattern of hand movement to another person’s brain, causing the second person’s hand to move. In this study, the asker consciously acknowledges incoming information from other person’s brain and makes a decision based on it. It’s real communication.

There are limitations. The answerer isn’t just thinking about the word ‘yes,’ the way most of us would imagine mind-to-mind communication, but staring at a light that has been assigned to mean ‘yes.’ And right now, the asker can’t respond brain-to-brain, though that’s something the team is working on. The team is also experimenting with ways to move beyond binary yes/no type answers. Prat said they could use different kinds of stimulations to allow more answer options. They could use quadrants of space or different visual or auditory cues and patterns.

But these are all still symbolic patterns of light or sensation that are translated into meaning. Our spoken language is actually no different. Words and letters still only symbolize intent or emotion, and often fail to capture what we really mean. This type of brain linking could one day change that.

Prat said, “Imagine if you could just transmit the feeling of experiencing something sweet.” She said that already scientists are able to identify what object a person is thinking of, given a list of possibilities. The difficulty is recreating those abstract brain signals in another person, especially when the subject becomes more complicated than an object.

If all this has you reaching for a tin hat, don’t worry. “People get nervous that others could read their thoughts without them being aware,” said Prat. “It’s not possible that someone could be doing this to you without you knowing about it and consenting to it.” The equipment is expensive, bulky and very finicky. Moving the magnetic coil even a quarter inch away from the asker’s skull nullified the effect, and both participants had to be actively focusing on communicating.

The next step for Prat’s team is to test more immediate, practical applications for the technology, like yoking the brains of high-achieving students with those of struggling students. They want to see if they can boost learning by signaling the brain that it is time to pay attention. “Hopefully we will run that experiment in the next six months,” she said.