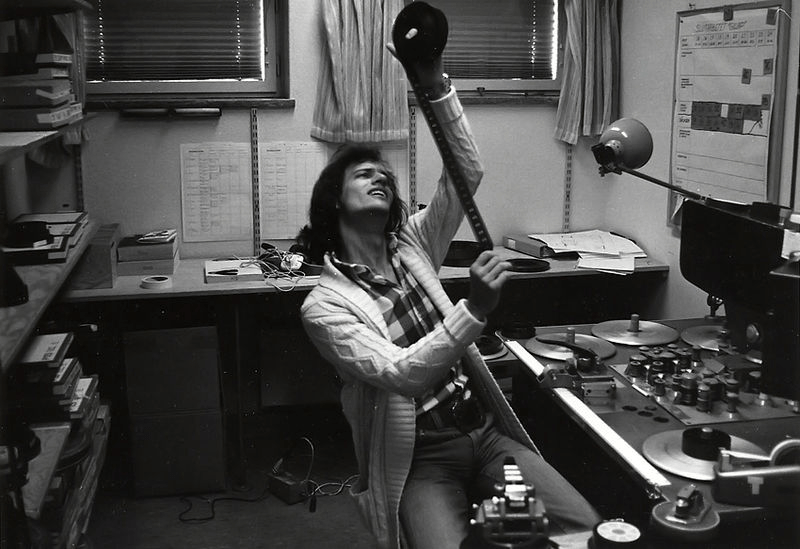

Who needs to see two entire minutes of your kid playing with an iPad, anyway? Computer science doctoral student Bin Zhao claims he never even watches his own recordings. “I have a lot of videos on my phone, but the reality is that I almost never look back to those videos,” he tells Popular Science. “The main reason is that the video itself might be five or 10 minutes long.”

Zhao and his advisor, Eric P. Xing of Carnegie Mellon University, have come up with an interesting solution to this problem. They’ve created an algorithm that recognizes the boring parts of videos and edits them out. The final product is like a little highlight reel. Users can even specify the length of reel they want—say, 30 seconds. Much more digestible. “Our motivation is that people don’t want to look at the original video,” Zhao says.

Zhao and Xing aren’t the first computer scientists to try to automatically recognize the interesting or important parts in a video. Many researchers and companies are working to make software that spots unusual activity in surveillance videos while it’s happening. At least one company says it sells a system that can do this, but research is ongoing. An important-scene-recognizing program could also be a boon for social media companies: Imagine being able to make condensed, snappy videos to share with your Internet friends.

“Our motivation is that people don’t want to look at the original video.”

The new algorithm works by creating a “dictionary” to explain what it sees as it processes a video. Then, at every moment, it asks itself, “Can I explain what’s happening now with my dictionary?” If the answer is no, that’s an indication something new and exciting is happening in the video, so the algorithm makes note of that. The algorithm doesn’t need to see the whole video before it starts putting together its highlight reel. That, along with coding techniques Zhao and Xing used, helps the algorithm work faster.

Zhao says his new algorithm is unusually fast and human-like in what scenes it decides to excerpt. It processes a one-hour video in one to two hours, versus the 10 to 20 hours similar algorithms published in the scientific literature require. To test whether the algorithm chooses “interesting” scenes like a person would, Zhao and Xing asked three people to watch videos and choose segments to highlight from the videos. The computer scientists then checked how closely the human and algorithmic choices matched. For 18 out of 20 personal videos, Zhao and Xing’s algorithm made more human-like choices than the three other competing algorithms they tested. The pair also checked five security-type videos, showing situations such as people entering a subway station. They found their algorithm, plus one other, outperformed the rest. Zhao is presenting their results this week at a conference hosted by the Institute of Electrical and Electronics Engineers.

Zhao now plans to launch a startup, PanOptus, to commercialize his software. A PanOptus iPhone app and API are in the works.

Check out the algorithm at work on a video of Xing’s son: