Imagine standing in an open field with a bucket of water balloons and a couple of friends. You’ve decided to play a game called “Mind.” Each of you has your own set of rules. Maybe Molly will throw a water balloon at Bob whenever you throw a water balloon at Molly. Maybe Bob will splash both of you whenever he goes five minutes without getting hit — or if it gets too warm out or if it’s seven o’clock or if he’s in a bad mood that day. The details don’t matter.

That game would look a lot like the way neurons, the cells that make up your brain and nerves, interact with one another. They sit around inside an ant or a bird or Stephen Hawking and follow a simple set of rules. Sometimes they send electrochemical signals to their neighbors. Sometimes they don’t. No single neuron “understands” the whole system.

Now imagine that instead of three of of you in that field there were 86 billion—about the number of neurons in an average brain. And imagine that instead of playing by rules you made up, you each carried an instruction manual written by the best neuroscientists and computer scientists of the day—a perfect model of a human brain. No one would need the entire rulebook, just enough to know their job. If the lot of you stood around, laughing and playing by the rules whenever the rulebook told you, given enough time you could model one or two seconds of human thought.

Here’s a question though: While you’re all out there playing, is that model conscious? Are its feelings, modeled in splashing water, real? What does “real” even mean when it comes to consciousness? What’s it like to be a simulation run on water balloons?

These questions may seem absurd at first, but now imagine the game of Mind sped up a million times. Instead of humans standing around in a field, you model the neurons in the most powerful supercomputer ever built. (Similar experiments have already been done, albeit on much smaller scales.) You give the digital brain eyes to look out at the world and ears to hear. An artificial voice box grants Mind the power of speech. Now we’re in the twilight between science and science fiction. (“I’m sorry Dave, I’m afraid I can’t do that.”)

Is Mind conscious now?

Now imagine Mind’s architects copied the code for Mind straight out of your brain. When the computer stops working, does a version of you die?

These queries provide an ongoing puzzle for scientists and philosophers who think about computers, brains, and minds. And many believe they could one day have real world implications.

A lot of people might agree that a mind simulated in a computer is conscious, especially if they could speak to it, ask it questions, and develop a relationship with it.

Scott Aaronson, a theoretical computer scientist at MIT and author of the blog Shtetl-Optimized, is part of a group of scientists and philosophers (and cartoonists) who have made a habit of dealing with these ethical sci-fi questions. While most researchers concern themselves primarily with data, these writers perform thought experiments that often reference space aliens, androids, and the Divine. (Aaronson is also quick to point out the highly speculative nature of this work.)

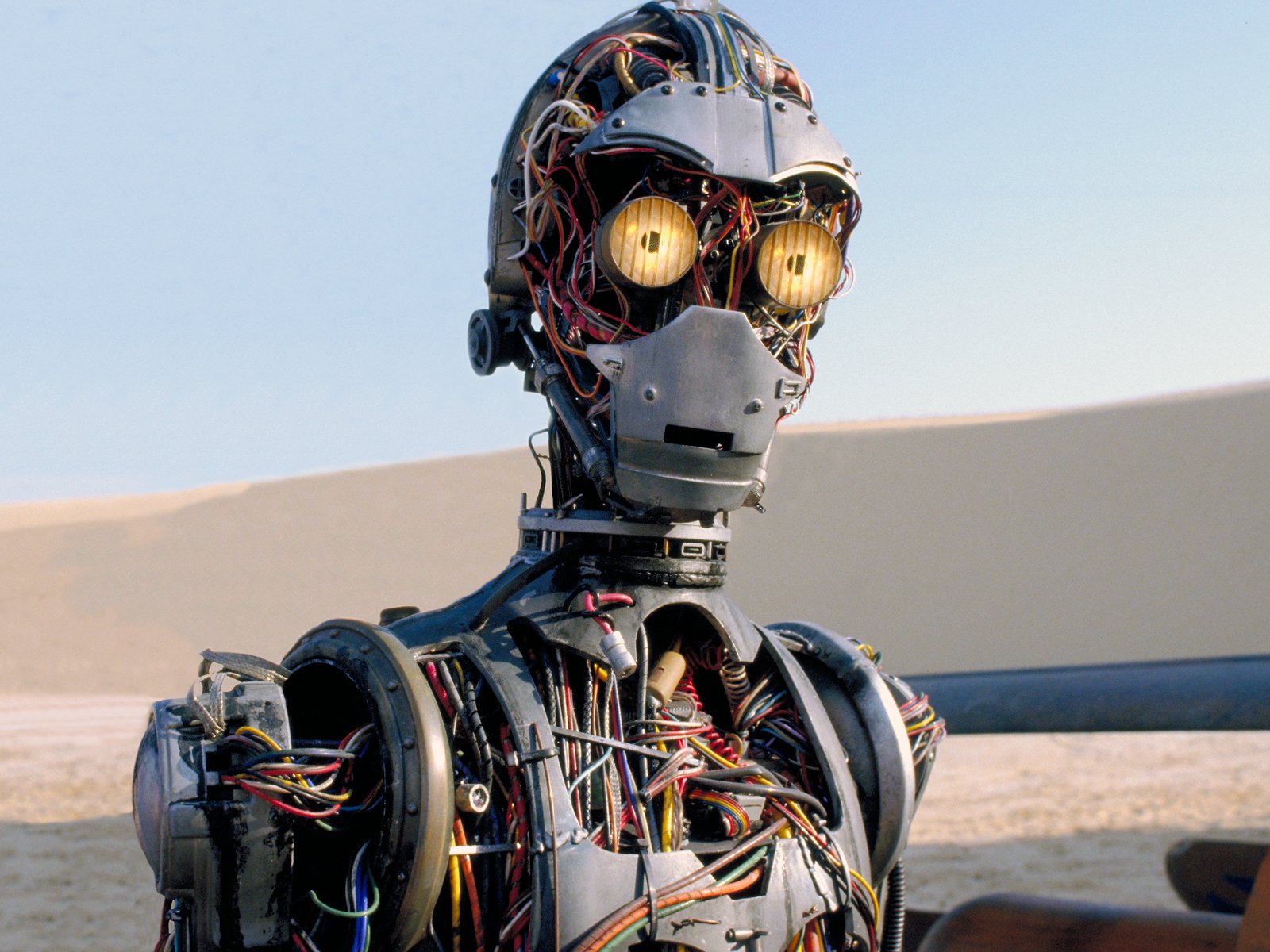

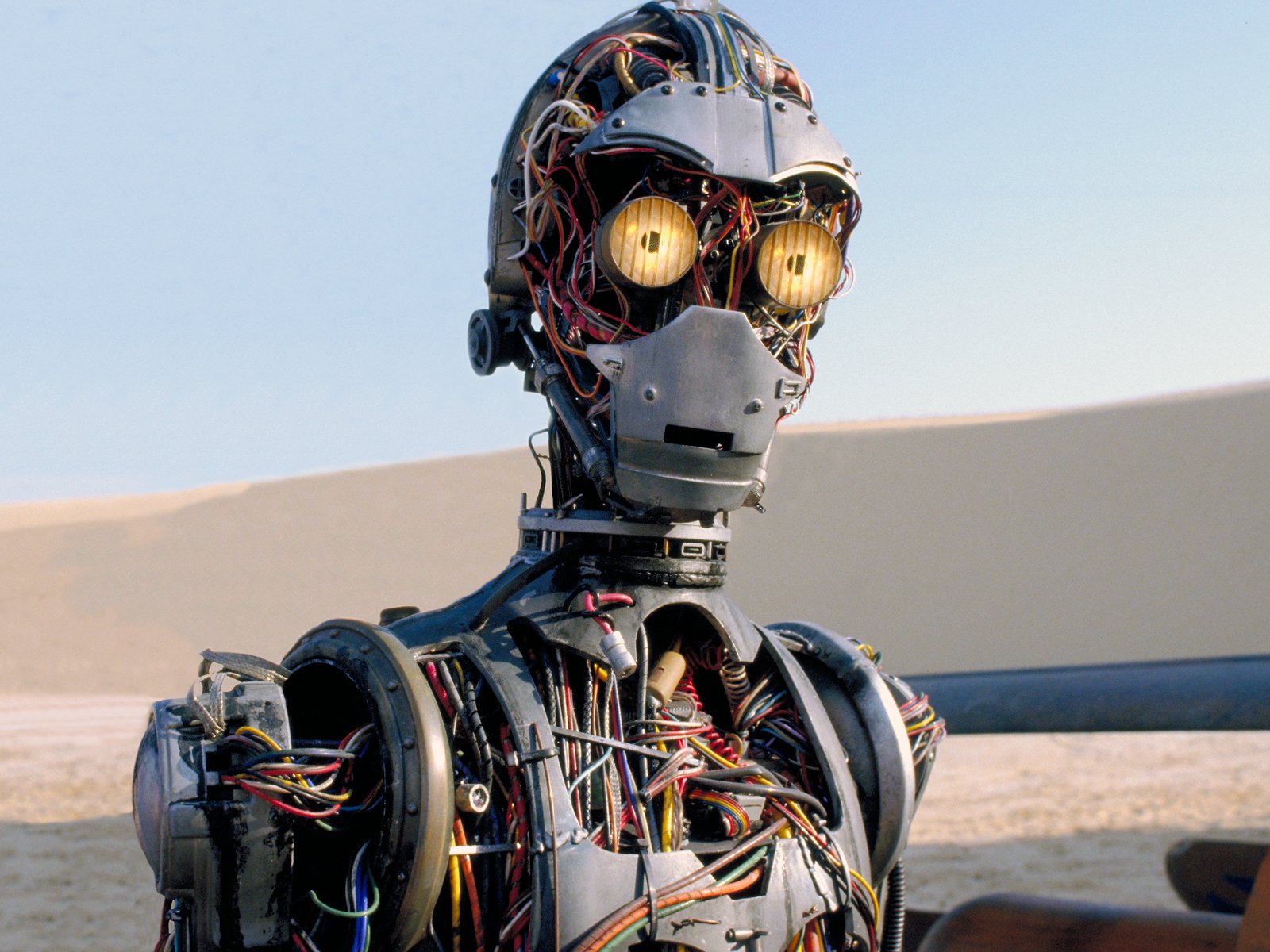

Many thinkers have broad interpretations of consciousness for humanitarian reasons, Aaronson tells Popular Science. After all, if that giant game of Mind in that field (or C-3PO or Data or Hal) simulates a thought or a feeling, who are we to say that consciousness is less valid than our own?

In 1950 the brilliant British codebreaker and early computer scientist Alan Turing wrote against human-centric theologies in his essay “Computing Machinery and Intelligence:”

“I think it’s like anti-racism,” Aaronson says. “[People] don’t want to say someone different than themselves who seems intelligent is less deserving just because he’s got a brain of silicon.”

According to Aaronson, this train of thought leads to a strange slippery slope when you imagine all the different things it could apply to. Instead, he proposes finding a solution to what he calls the Pretty Hard Problem. “The point,” he says, “is to come up with some principled criterion for separating the systems we consider to be conscious from those we do not.”

A lot of people might agree that a mind simulated in a computer is conscious, especially if they could speak to it, ask it questions, and develop a relationship with it. It’s a vision of the future explored in the Oscar-winning film Her.

Think about the problems you’d encounter in a world where consciousness were reduced to a handy bit of software. A person could encrypt a disk, and then instead of Scarlett Johannsen’s voice, all Joaquin Phoenix would hear in his ear would be strings of unintelligible data. Still, somewhere in there, something would be thinking.

Aaronson takes this one step further. If a mind can be written as code, there’s no reason to think it couldn’t be written out in a notebook. Given enough time, and more paper and ink than there is room in the universe, a person could catalogue every possible stimulus a consciousness could ever encounter, and label each with a reaction. That journal could be seen as a sentient being, frozen in time, just waiting for a reader.

“There’s a lot of metaphysical weirdness that comes up when you describe a physical consciousness as something that can be copied,” he says.

The weirdness gets even weirder when you consider that according to many theorists, not all the possible minds in the universe are biological or mechanical. In fact, under this interpretation the vast majority of minds look nothing like anything you or I will ever encounter. Here’s how it works: Quantum physics—the 20th century branch of science that reveals the hidden, exotic behavior of the particles that make up everything — states that nothing is absolute. An unobserved electron isn’t at any one point in space, really, but spread across the entire universe as a probability distribution; the vast majority of that probability is concentrated in a tight orbit around an atom, but not all of it. This still works as you go up in scale. That empty patch of sky midway between here and Pluto? Probably empty. But maybe, just maybe, it contains that holographic Charizard trading card that you thought slipped out of your binder on the way home from school in second grade.

When a consciousness is hurt, or is happy, or is a bit too drunk, that experience becomes part of it forever.

As eons pass and the galaxies burn themselves out and the universe gets far emptier than it is today, that quantum randomness becomes very important. It’s probable that the silent vacuum of space will be mostly empty. But every once in a while, clumps of matter will come together and dissipate in the infinite randomness. And that means, or so the prediction goes, that every once in a while those clumps will arrange themselves in such a way perfect, precise way that they jolt into thinking, maybe just for a moment, but long enough to ask, “What am I?”

These are the Boltzmann Brains, named after the nineteenth-century physicist Ludwig Boltzmann. These strange late-universe beings will, according to one line of thinking, eventually outnumber every human, otter, alien and android who ever lived or ever will live. In fact, assuming this hypothesis is true, you, dear reader, probably are a Boltzmann Brain yourself. After all, there will only ever be one “real’ version of you. But Boltzmann Brains popping into being while hallucinating this moment in your life—along with your entire memory and experiences—they will keep going and going, appearing and disappearing forever in the void.

In his talk at IBM, Aaronson pointed to a number of surprising conclusions thinkers have come to in order to resolve this weirdness.

Instead, Aaronson proposes a rule to help us understand what bits of matter are conscious and what bits of matter are not.

Conscious objects, he says, are locked into “the arrow of time.” This means that a conscious mind cannot be reset to an earlier state, as you can do with a brain on a computer. When a stick burns or stars collide or a human brain thinks, tiny particle-level quantum interactions that cannot be measured or duplicated determine the exact nature of the outcome. Our consciousnesses are meat and chemical juices, inseperable from their particles. Once a choice is made or an experience is had, there’s no way to truly rewind the mind to a point before it happened because the quantum state of the earlier brain can not be reproduced.

When a consciousness is hurt, or is happy, or is a bit too drunk, that experience becomes part of it forever. Packing up your mind in an email and sending it to Fiji might seem like a lovely way to travel, but, by Aaronson’s reckoning, that replication of you on the other side would be a different consciousness altogether. The real you died with your euthanized body back home.

Additionally Aaronson says you shouldn’t be concerned about being a Boltzmann Brain. Not only could a Boltzmann Brain never replicate a real human consciousness, but it could never be conscious in the first place. Once the theoretical apparition is done thinking its thoughts, it disappears unobserved back into the ether—effectively rewound and therefore meaningless.

This doesn’t mean us bio-beings must forever be alone in the universe. A quantum computer, or maybe even a sufficiently complex classical computer could find itself as locked into the arrow of time as we are. Of course, that alone is not enough to call that machine conscious. Aaronson says there are many more traits it must have before you would recognize something of yourself in it. (Turing himself proposed one famous test, though, as Popular Science reported, there is now some debate over its value.)

So, you, Molly, and Bob might in time forget that lovely game with the water balloons in the field, but you can never unlive it. The effects of that day will resonate through the causal history of your consciousness, part of an unbroken chain of joys and sorrows building toward your present. Nothing any of us experience ever really leaves us.