In the grand and lengthy list of distasteful ideas, paywalling the ability to engage in “fun and interactive” conversations with AI Hitler might not rise to the very top. But it’s arguably up there.

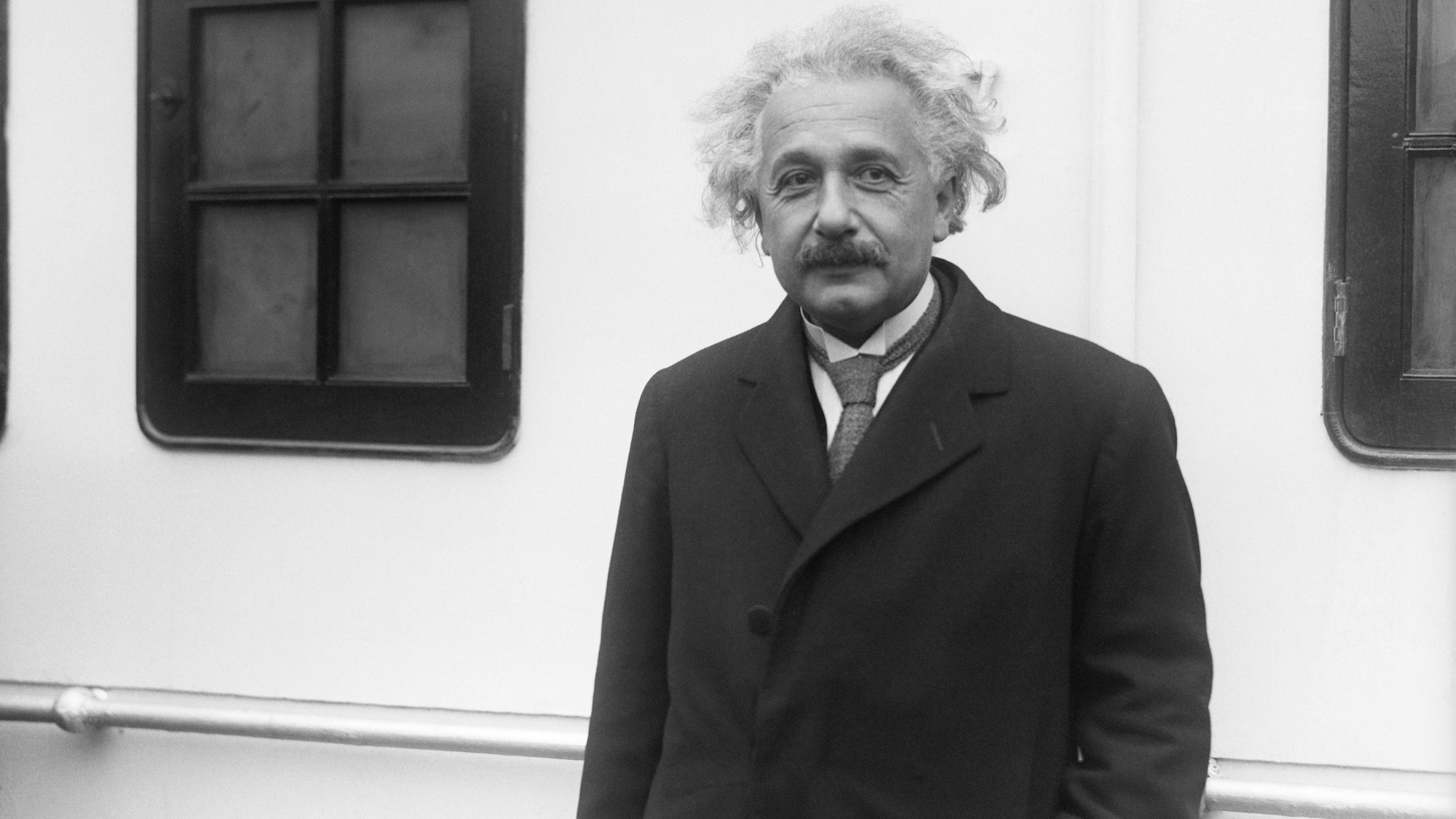

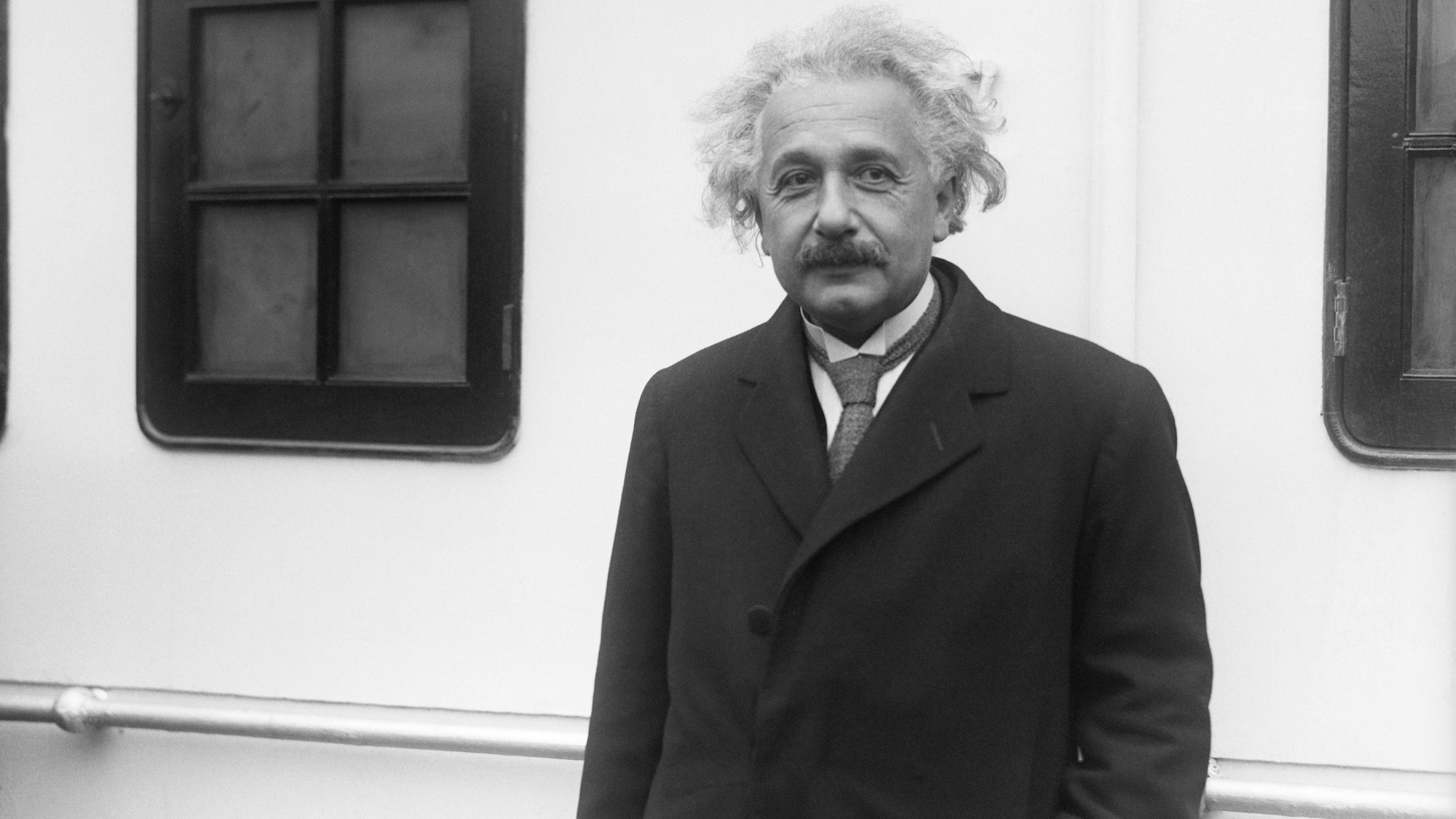

And yet countless people have already given it a shot via the chatbot app Historical Figures. The project, currently still available in Apple’s App Store, went viral last week for its exhaustive and frequently controversial list of AI profiles, including Gandhi, Einstein, Princess Diana, and Charles Manson. Despite billing itself as an educational app (the 76th most popular in the category, as of writing) appropriate for anyone over the age of 9, critics quickly derided the idea as a rushed, frequently inaccurate gimmick at best, and at worst, a cynical leverage of the burgeoning, already fraught technology that is ChatGPT.

[Related: Building ChatGPT’s AI content filters devastated workers’ mental health, says new report.]

Even Sidhant Chaddha, the 25-year-old Amazon software development engineer who built the app, conceded to Rolling Stone last week that ChatGPT’s confidence and inaccuracy is a “dangerous combination” for users who might mistakenly believe the supposed facts that it spews are sourced. “This app uses limited data to best guess what conversations may have looked like,” reads a note on the app’s homepage. Chaddha did not respond to PopSci‘s request for comment.

Some historians vehemently echo that sentiment, including Ekaterina Babintseva, an assistant professor specializing in the History of Technology at Purdue University. For her, the attempt at using ChatGPT in historical education isn’t just tasteless, it’s potentially even radically harmful.

“My very first thought when ChatGPT was created was that, ‘Oh, this is actually dangerous,’” she recounts over Zoom. To Babintseva, the danger instead resides less in academic plagiarism worries, and more in AI’s larger effects on society and culture. “ChatGPT is just another level towards eradicating the capacity for the critical engagement of information, and the capacity for understanding how knowledge is constructed.” She also points towards the obscured nature of current major AI development by private companies concerned with keeping a tight, profitable grip on their intellectual properties.

[Related: CEOs are already using ChatGPT to write their emails.]

“ChatGPT doesn’t even explain where this knowledge comes from. It blackboxes its sources,” she says.

It’s important to note OpenAI, the developer behind ChatGPT—and, by extension, third-party spin offs like Historical Figures—has made much of its research and foundational designs available for anyone to examine. Getting a detailed understanding of the vast internet text repositories it uses to train its AI’s understanding, however, is much more convoluted. Even asking ChatGPT to cite its sources fails to offer anything more specific than “publicly available sources” it “might” draw from, like Wikipedia.

As such, programs like Chaddha’s Historical Figures app provide skewed, sometimes flat out wrong narratives while also failing to explain how its narrative was even constructed in the first place. Compare that to historical academic papers and everyday journalism, replete with source citations, footnotes, and paper trails. “There are histories. There is no one narrative,” says Babintseva. “Single narratives only exist in totalitarian states, because they are really invested in producing one narrative, and diminishing the narratives of those who want to produce narratives that diverge from the approved party line.”

It wasn’t always this way. Until the late 90s, artificial intelligence research centered on “explainable AI,” with creators focusing on how human experts such as psychologists, geneticists, and doctors make decisions. By the end of the 1990s, however, AI developers began to shift away from this philosophy, deeming it largely irrelevant to their actual goals. Instead, they opted to pursue neural networks, which often arrive at conclusions even their own designers can’t fully explain.

[Related: Youth mental health service faces backlash after experimenting with AI-chatbot advice.]

Babintseva and fellow science and technology studies scholars urge a return to the explainable AI models, at least for the systems that have real effects on human lives and behaviors. AI should aid research and human thought, not replace it, she says, and hopes organizations such as the National Science Foundation push forward with fellowships and grant programs conducive to research in this direction.

Until then, apps like Historical Figures will likely continue cropping up, all based on murky logic and unclear sourcing while advertising themselves as new, innovative educational alternatives. What’s worse, programs like ChatGPT will continue their reliance on human under-labor to produce the knowledge foundations without ever acknowledging those voices. “It represents this knowledge as some kind of unique, uncanny AI voice,” Babintseva says, rather than a product of multiple complex human experiences and understandings.

[Related: This AI verifies if you actually LOL.]

In the end, experts caution that Historical Figures should be viewed as nothing more than the latest digital parlor trick. Douglas Rushkoff, a preeminent futurist and most recent author of Survival of the Richest: Escape Fantasies of the Tech Billionaires, tried to find a silver, artificial lining to the app in an email to PopSci. “Well, I’d rather use AI to… try out ideas on dead people [rather] than to replace the living. At least that’s something we can’t do otherwise. But the choice of characters seems more designed to generate news than to really provide people with a direct experience of Hitler,” he writes.

“And, seeing as how you emailed me for a comment, it seems that the stunt has worked!”