Neuroscientists are already able to read some basic thoughts, like whether an individual test subject is looking at a picture of a cat or an image with a specific left or right orientation. They can even read pictures that you’re simply imagining in your mind’s eye. Even leaders in the field are shocked by how far we’ve come in our ability to peer into people’s minds. Will brain scans of the future be able to tell if a person is lying or telling the truth?

Suggest whether a consumer wants to buy a car? Reveal our secret likes and dislikes, or our hidden prejudices? While we aren’t there yet, these possibilities have dramatic social, legal and ethical implications.

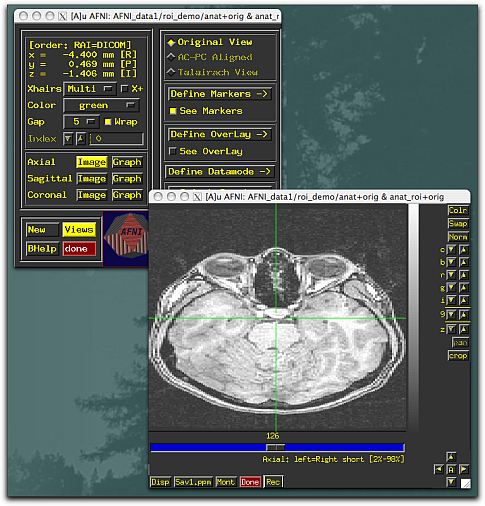

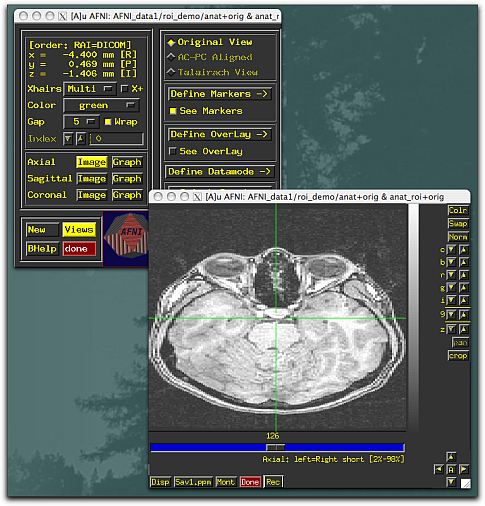

Last night at the World Science Festival in New York, leading neuroscientists took the stage to discuss current research into functional Magnetic Resonance Imaging (fMRI), a type of scan that indirectly measures neural activity by measuring the change in the blood oxygen level in the brain. Neurons require oxygen in order to fire, so if a person is thinking about or looking at a specific image, by looking at the oxygen levels the scientists can see the patterns that “light up” in the brain, and link them to a specific word or image. Study results in this field are astonishing. Work out of Frank Tong’s lab at Vanderbilt University, one of the event’s panelists, shows that the researchers can read the orientation of an object that a person is looking out — say a striped pattern that goes off to the left or the right — 95 percent of the time. His group also, with 83 percent accuracy, can predict which of two patterns an individual is holding in their memory.

But deciphering the patterns that result from one word or image is fairly simple. Unraveling the entirety of our thoughts is not. John-Dylan Haynes, a neuroscientist at the Bernstein Center for Computational Neuroscience in Berlin and another panelist, says that the researchers are not truly reading minds: “We don’t understand the language of the brain, the syntax and the semantics of neural language.” At this point, he says, they are just using statistical analysis to analyze brain patterns during very specific object-oriented tasks.

Some of Haynes’ own work, however, seems eerily akin to mind reading. In an experiment reminiscent of Benjamin Libet’s 1985 Delay Test — in which electroencephalogram (EEG) scans indicated neural activity before a test subject was conscious of making a decision to move their hand, encouraging many a debate about free will — Haynes’ team was able to predict whether or not a test subject would press a button with their left or right hand before the subject was aware which they would pick, simply by reading their brain scans.

The prospect of mind reading brings up privacy issues, raises deep ethical questions, and will doubtless eventually bring complicated legal dilemmas. Emory University bioethicist Paul Root Wolpe, a leading voice in the relatively new field of neuroethics, urges society to be “vigilant” as these technologies advance, and think about how to set the boundaries on who should be scanned and when. One potential grey area is the Fifth Amendment: Will brain scanning count as testimonial self-incrimination in a court case, or will it fall into the same category as submitting hair and blood samples? Other questions to consider: Can fMRIs act as trustworthy lie detectors or indicators of racial prejudice in a hate crime, and should the tests be admissible in court? Wolpe predicts that the Supreme Court will have to decide on questions like these within the next decade.

For now, however, all of the panelists agree: do not trust current fMRI products that are marketed as lie detectors. They say these may be over-glorified polygraph tests, and may simply measure emotional responses to stress, rather than “truth.” More research needs to be done to confirm the validity of such machines. But it may happen sooner than you think.