Scientists from every discipline have more data than ever, but it’s only as useful as the meaning behind it. Every bit of information is only explained by the context in which it was gathered, and often in the context in which it is used. “There is no such thing as raw data,” says Bill Anderson of the School of Information at the University of Texas at Austin and associate editor of the CODATA Data Science Journal.

Take the number 37, Anderson says. Other than stating a numerical order, it means little on its own. But with some more information — 37 degrees Celsius, for instance — it can take on more meaning. Now give it some context: 37 degrees C is normal body temperature. Now 37 represents something useful, something a doctor or researcher could use, and it becomes a piece of knowledge that could comfort a patient or answer a question.

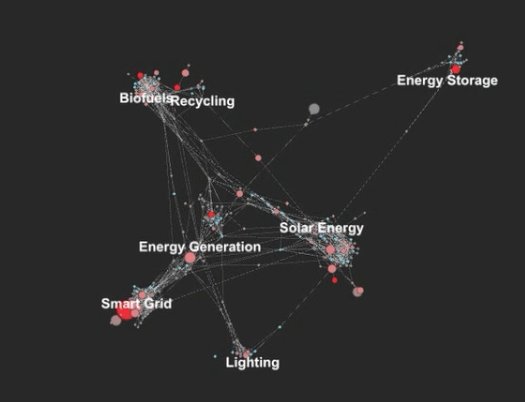

Click to launch the photo gallery

A scientist may think he’s gathering data for one experiment, but increasingly, the records will be used by many more people than just his research team — it will be parsed and re-parsed, dumped into databases and models, and scrutinized by several different teams in different disciplines. Without proper context and record-keeping, it can be difficult for others to use data in new ways, but better data husbandry can help. That way, scientists can be assured their data is congruent and they’re comparing apples to apples, or normal body temperature to high temperature, as it were.

Data stewardship is important for everything from politics to climate change — which became clear again last week after another climate study examined 1.6 billion surface temperature records, and joined the chorus of scientists who agree the globe is warming. Click through to the photo gallery to see five projects seeking to keep better tabs on data.