When babies learn to grasp things, they combine two systems, vision and motor skills. These two mechanisms, coupled with lots of trial and error, is how we can pick up pencil differently than a stapler, and now robots are starting to learn the same way.

Google is teaching its robots a simple task, picking up objects in one bin and placing them in another. They’re not the first robot to pick something up, but these robots are actually learning new ways to pick up objects of different shapes, sizes, and characteristics based on constant feedback. For instance, they’ve robot learned to pick up a soft object differently than a hard object.

Other projects, like Cornell’s DeepGrasping paper, analyze an object once for the best place to grasp, attempt to pick it up, then try again if it failed. Google’s approach continuously analyzes the object and the robot hand’s relation to it, making it more adaptable, like a human.

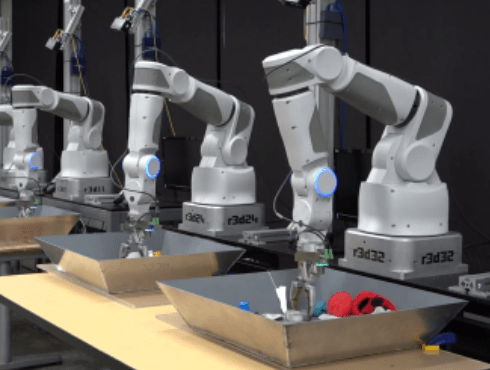

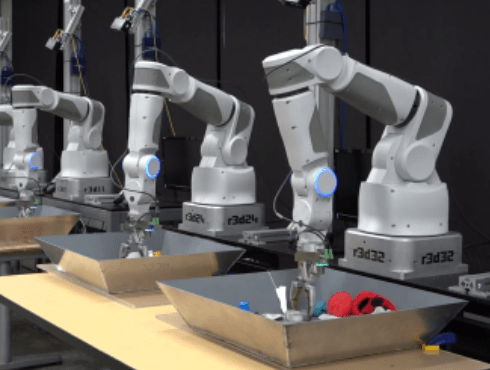

These robots are really just arms with brains, hooked up to a camera. They have two grasping fingers attached to a triple-joined arm, which are controlled by two deep neural networks. Deep neural networks are a popular flavor of artificial intelligence, because of their aptitude in being able to make predictions based on large amounts of data. In this case, one neural network is simply looking at photos of the bin and predicts whether the robot’s hand can correctly grasp the object. The other interprets how well the hand is grabbing, so it can inform the first network to make adjustments.

Researchers noted that the robots didn’t need to be calibrated based on different camera placement. As long as the camera had a clear view of the bin and arm, the neural network would be able to adjust and continue learning to pick up objects.

Over the course of two months, Google had its robots pick up objects 800,000 times. Six to 14 robots were working on picking up objects at any given time, and the only human role was to reload the robot’s bin of objects. The objects were ordinary household objects: office supplies, children’s toys, and sponges.

The most surprising outcome to the researchers, noted in the paper published on ArXiv.org, was that the robots learned to pick up hard and soft objects in different ways. For an object that was perceived as rigid, the grippers would just grasp the outer edges of the object and squeeze to hold it tightly. But for a soft object like a sponge, the neural network realized it would be easier to place one gripping finger in the middle and one around the edge, and then squeeze.

This work is separated from other grasping robots by the constant, direct feedback that helps the neural network learn, with very little human interference. This allows the robot arm to even pick up things it has never seen before, with a high rate of success. Researchers logged a failure rate for picking up new objects from 10 to 20 percent based on the object, and if the robot failed to pick up an object, it would try again. Google fares a little worse than Cornells’s DeepGrasping project, which ranged from consistent success on things like plush toys to 16 percent failure on hard objects.

Teaching robots to understand the world around them, and their physical limits, is an important process for things like self-driving cars, autonomous robots, delivery drones, and every other futuristic idea that involves robots interacting with the natural world.

Next, the researchers want to test the robots in real-world conditions, outside of the lab. That means varying lighting and location, objects that move, and wear and tear on the robot.