New Computer Chip Modeled on a Living Brain Can Learn and Remember

IBM, with help from DARPA, has built two working prototypes of a "neurosynaptic chip." Based on the neurons and synapses of the brain, these first-generation cognitive computing cores could represent a major leap in power, speed and efficiency

A pair of brain-inspired cognitive computer chips unveiled today could be a new leap forward — or at least a major fork in the road — in the world of computer architecture and artificial intelligence.

About a year ago, we told you about IBM’s project to map the neural circuitry of a macaque, the most complex brain networking project of its kind. Big Blue wasn’t doing it just for the sake of science — the goal was to reverse-engineer neural networks, helping pave the way to cognitive computer systems that can think as efficiently as the brain. Now they’ve made just such a system — two, actually — and they’re calling them neurosynaptic chips.

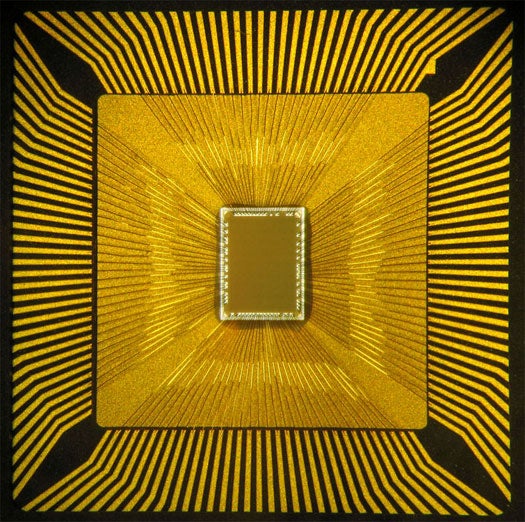

Built on 45 nanometer silicon/metal oxide semiconductor platform, both chips have 256 neurons. One chip has 262,144 programmable synapses and the other contains 65,536 learning synapses — which can remember and learn from their own actions. IBM researchers have used the compute cores for experiments in navigation, machine vision, pattern recognition, associative memory and classification, the company says. It’s a step toward redefining computers as adaptable, holistic learning systems, rather than yes-or-no calculators.

“This new architecture represents a critical shift away form today’s traditional von Neumann computers, to extremely power-efficient architecture,” Dharmendra Modha, project leader for IBM Research, said in an interview. “It integrates memory with processors, and it is fundamentally massively parallel and distributed as well as event-driven, so it begins to rival the brain’s function, power and space.”

You can read up on Von Neumann architecture over here, but essentially it is a system with two data portals, which are shared by the input instructions and output data. This creates a bottleneck that will fundamentally limit the speed of memory transfer. IBM’s system eliminates that bottleneck by putting the circuits for data computation and storage together, allowing the system to compute information from multiple sources at the same time with greater efficiency. Also like the brain, the chips have synaptic plasticity, meaning certain regions can be reconfigured to perform tasks to which they were not initially assigned.

IBM’s long-term goal is to build a chip system with 10 billion neurons and 100 trillion synapses that consumes just one kilowatt-hour of electricity and fits inside a shoebox, Modha said.

The project is funded by DARPA’s SyNAPSE (Systems of Neuromorphic Adaptive Plastic Scalable Electronics) initiative, and IBM just completed phases 0 and 1. IBM’s project, which involves collaborators from Columbia University, Cornell University, the University of California-Merced and the University of Wisconsin-Madison, just received another $21 million in funding for phase 2, the company said.

Computer scientists have been working for some time on systems that can emulate the brain’s massively parallel, low-power computing prowess, and they’ve made several breakthroughs. Last year, computer engineer Steve Furber described a synaptic computer network that consists of tens of thousands of cellphone chips.

The most notable computer-brain achievements have been in the field of memristors. As their name implies, a memory resistor can “remember” the last resistance that it possessed when current was flowing through it — so after current is turned back on, the resistance of the circuit will be the same. We will not attempt to delve too deeply here, but this basically makes a system much more efficient.

A Map of the Mind

Hewlett-Packard has been developing memristors since first describing them in 2008, and has also been part of the SyNAPSE project. Last spring, HP engineers described a titanium dioxide memristor that uses low power.

For a brain-based computer system, memristors can function as a computer analogue for a synapse, which also stores information about previous data transfer. IBM’s chip doesn’t use a memristor architecture, but it does integrate memory with computation power — and it uses computer neurons and axons to do it. The building blocks are simple, but the architecture is unique, said Rajit Manohar, associate dean for research and graduate studies in the engineering school at Cornell.

“When a neuron changes its state, the state it is modifying is its own state, not the state of something else. So you can physically co-locate the circuit to do the computation, and the circuit to store the state. They can be very close to each other, so that cooperation becomes very efficient,” he said.

Modha said it is just a new way to store memory.

“A bit is a bit is a bit. You could store a bit in a memristor, or a phase-change memory, or a nano-electromechanical switch, or SRAM, or any form of memory that you please. But by itself, that does not a complete architecture make,” Modha said. “It has no computational capability.”

But this new chip does have that power, he said. It integrates memory with processor capability on a typical SOI-CMOS platform, using traditional transistors in a new design. Along with integrated memory to stand in for synapses, the neurosynaptic “core” uses typical transistors for input-output capability, i.e. neurons.

Chip Simulation

This new architecture will not replace traditional computers, however. “Both will be with us for a long time to come, and continue to serve humanity,” Modha predicted.

The idea is that future powerful chips based on this brain-network design will be able to ingest and compute information from multiple inputs and make sense of it all — just like the brain does.

A cognitive computer monitoring the oceans could record and compute variables like temperature, wave height and acoustics, and decide whether to issue tsunami or hurricane warnings. Or a grocer stocking shelves could use a special glove that monitors scent, texture and sight to flag contaminated produce, Modha said. Modern computers can’t handle that level of detail from so many inputs, he said. But our brains do it all the time — grab a rotting peach, and your senses of touch, smell and sight work in concert instantaneously to determine that the fruit is bad.

To do this, the brain uses electrical signals between some 150 trillion synapses, all while sipping energy — our brains need about 20 watts to function. Understanding how this works is key to building brain-based computers, which is why IBM has been working with neuroscientists to study monkey and cat brains. That research is progressing, Modha said.

But it will be quite some time before computer chips can truly match the ultra-efficient computational powerhouses that nature gave us.

Reading Handwriting