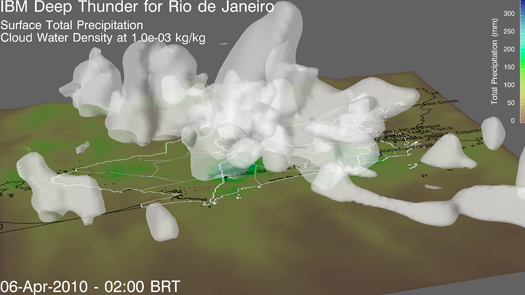

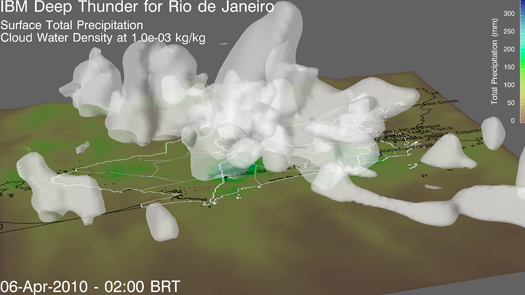

In Rio de Janeiro, when a massive storm comes in off the Atlantic, like one did a couple of years ago, hundreds of lives and thousands of homes can be lost in a single afternoon. But in a new state-of-the art command center, a kind of municipal war room dedicated to making the entire city more efficient, supercomputers are monitoring the weather via high-powered weather models custom engineered by IBM. Deep Thunder, as the weather-modeling project is known, keeps city leaders and regional agencies abreast of what the skies have in store, square kilometer by square kilometer, both in real time and 48 hours into the future.

Even a decade ago, something like this wouldn’t have been possible. But now, explosions in computing power and sophisticated software design are driving a revolution in atmospheric modeling that allows researchers to predict the weather and its impacts in whole new ways–ways that might not just save us from the occasional wrath of a violent storm, but also from ourselves.

“Since 1950 we’ve basically witnessed a revolution in weather forecasting,” says Dr. Louis W. Uccellini, director of the National Centers for Environmental Prediction at the U.S. National Weather Service, noting the proliferation of satellites in orbit and sensor networks on the ground and in the sky that now provide terabytes of global weather data. What’s been missing is a way to find meaning in all of this data. “Right now, the biggest weakness is the computational capacity that we’re able to bring to this enterprise,” he says.

That’s changing as supercomputing power becomes commonplace. The NWS itself is in the process of procuring its next-generation computing platform. More computing power means better weather models running on better algorithms crunching more data.

Uccellini offers a simplified example: whereas earlier generations of the NWS’s weather models relied generally upon various atmospheric data to make their projections, the NWS now commonly runs atmospheric models alongside land models, ice models, and ocean models. So while those earlier projections might predict that it was going to snow, Uccellini says, scientists can now mash up the atmospheric and land model data to predict if that snow is going to stick. The NWS’s next-gen computer should churn out even richer forecasts, with more layers of meaning.

If predicting the weather was our only concern, we could end the story there. But that’s rarely the case.

PREDICTING THE IMPACTS

“If all you can do is solve the weather problem, that’s insufficient,” says Lloyd Treinish, who runs the Deep Thunder initiative from IBM’s Yorktown, N.Y., research campus. “That doesn’t solve the business problem, which is connected to the impact of the weather.”

Deep Thunder isn’t new–the project has been modeling weather in the New York area for a decade–but it is pushing the current state of the art in weather forecasting. It is a computing solution designed to provide extremely accurate forecasts tailored to the specific needs and problems. It doesn’t provide particularly long-term weather projections–the further out a model attempts to forecast, the less solid data is available; even with all the computing power scientists could dream of, there will always be atmospheric variables that degrade the accuracy of forecasts the further out they are made–but out to about 48 hours it is remarkably precise.

What makes Deep Thunder and models like it so interesting–and potentially game-changing–is what Treinish calls coupling–the use of weather models to predict not just the weather, but the impacts of weather. For that, Deep Thunder uses data from NOAA, NASA, the NWS, the U.S. Geological Survey–as well as, uniquely, detailed data that’s not about weather per se, but that’s relevant to whatever particular problem the project is tackling.

Take the example of a power utility. Plug information relevant to that utility into Deep Thunder–where its power stations are, where each transformer is, how its service trucks are distributed throughout the area, historical grid data–and run it through Deep Thunder. The algorithms can tell you whether tomorrow’s incoming thunderstorm is likely to cause a power outage, and if so where. The utility can then reorient its resources to deal with the threat ahead of time, restoring power faster and keeping life (and commerce) humming along at a normal pace.

Extrapolate that scenario to any number of human weather-related problems, and it’s easy to see how these new models are going to reshape economies, cities, government agencies, and just about everything else that happens under the sky. With sophisticated weather models coupled to their particular problems, freight companies can minimize delays, governments could better plan for severe storms (like the snowstorms that crippled New York last winter), utilities could better plan for tomorrow’s energy loads, and agencies could even brace for specific disasters that at best interrupt life and at worst end it.

That’s what Rio de Janeiro’s command center is all about. Working with IBM as part of its Smarter Cities initiative, local and regional authorities there are building a central information hub that ties the various stakeholders in Rio together: agencies, state and local governments, police, firefighters, sanitation, city services. All share information so they can quickly react to problems and execute their offices efficiently. Deep Thunder was one of the first technologies implemented there.

“There’s a very specific problem that they’re trying to address,” Treinish says. “These large storms that impact the city can easily dump a foot of rain in 24 hours, and when they’ve had events like this it leads to significant loss of life and significant property loss.”

In Rio, a one-size-fits-all approach to its unique topography and weather is of little use. The urban landscape there is unique, with huge shanty neighborhoods built into the steep hillsides surrounding the city proper.

“These storms come off the ocean and they interact with the very complex terrain in Rio, and potentially the water begins to accumulate on these steep hillsides and it leads to mudslides,” Treinish says. “So the large amount of rain and the flooding is a problem, but ultimately it’s the mudslides that lead to the societal and economic impacts in the city.”

In the Rio datacenter, Deep Thunder takes into account city-specific soil composition data, hydrology models, urban flooding models, topographical data, population density and land use data. With the resulting output, city authorities can pinpoint which hillsides are at the greatest risk of mudslides during a certain storm and allocate resources (and warn inhabitants) accordingly.

Such high-powered, coupled models won’t just solve problems for governments and businesses–they could potentially solve some of humanity’s greatest big-picture challenges as well.

Homes and buildings that can plan for the day ahead computationally will be astronomically more efficient than today’s “smartest” structures. For a “smart grid” to be smart, it first has to know what’s happening, and knowing–down to the mile, down to the building, down to the very person–what kind of energy is likely going to be drained from the grid in a given hour is key to making it work.

“Weather forecasts could drive the cooling and heating of your home, hands-off,” says John Bosse, director of energy and government services for Earth Networks, the company that runs WeatherBug (a maker of apps and desktop widgets that keep users updated on the weather) and overseer of one of the nation’s larger commercial weather sensor networks. “Most people just set their thermostat at a temperature. You could be so much more efficient. It’s not like you have to look at the forecast every morning and program your thermostat, this would all be automated.”

More precise weather modeling is also critical to enabling the rollout of renewable, carbon-free energy sources like wind and solar. Their resources are the weather, after all, but their inconsistency is the main obstacle keeping utilities from relying on them for more than an auxiliary role. If you know with a high degree of certainty how much sunlight and how much wind you’ll have from day to day, you can lean more heavily on those carbon-free options–and power down the coal plants when the weather is right.

This is a future that is going to take some time (and a lot of investment) to realize, but the seeds are already taking root in places like Rio. As weather sensor networks are integrated–NOAA has already begun stitching together America’s patchwork sensor networks, creating a network of networks that algorithms can pull from–and computing power continues to fall in price and proliferate, this kind of modeling will become commonplace.

It will become more accurate too, Treinish says, because those sensors will inform the algorithms and vice versa. “It’s the enabling of a feedback loop between the observations and the modeling and the simulations,” Treinish says. “Better weather observations enable better models, and better models can be used to determine additional ways to sample the atmosphere.”

How will all this impact your local morning weather report? “It’s probably not going to be striking,” Bosse says. “It’s not going to be night and day. What you are going to see is more precision and better accuracy in short term forecasts.” Meaning your local Roker will still stand in front of those radar maps and talk about pressure systems moving this way and that.

But you may notice that the hour-by-hour forecast predicting what time an afternoon shower will roll in becoming increasingly more accurate. That’s the algorithms at work, chipping away at atmospheric uncertainties and helping to build a smarter, more efficient future.