It was a courtroom first. Late last year, an Illinois judge allowed functional magnetic-resonance imaging (fMRI) as evidence during the sentencing phase of a murder trial. Defense attorneys argued that the scan showed signs of mental illness and hoped it would convince the jury to show mercy. It didn’t. They sentenced Brian Dugan to death for killing a 10-year-old girl. Despite the inability to sway a jury, many lawyers say the case is a watershed moment: It opens the door for all kinds of fMRI analysis, including the work of two companies that say they can read brain activity to detect deceit. In essence, fMRI could someday become an unbeatable lie detector. The reality, though, is a little more complicated.

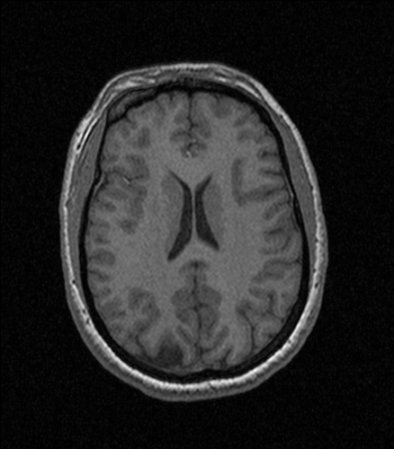

fMRI scanners detect variations in the magnetic properties of blood as oxygen levels change in response to neural activity. The more a section of the brain works, the more oxygen it demands and the brighter it glows on the scan. When a person is recalling a memory or formulating a lie, the part of the brain doing that work will light up. The next step is decoding what activity in each part of the brain means. Researchers are developing “deceit patterns” based on tests with people—images of the way a deceitful brain looks in fMRI scans—that computers analyze to determine whether the person was answering truthfully. But because no two brains are exactly alike, standardizing the incriminating patterns and delivering consistent results has so far proved elusive.

The two companies marketing fMRI lie detectors, No Lie MRI in California and Cephos in Massachusetts, have reported accuracy rates from 75 to 98 percent. That’s not good enough, says Joy Hirsch, director of the Program for Imaging and Cognitive Sciences at Columbia University: “Someone’s life could be in the hands of this technology.”

Joel Huizenga, the founder and CEO of No Lie MRI, agrees and notes that, with acceptance of fMRI evidence growing, his company is participating in more studies than ever to improve recognition of deceit patterns. “Are we as good as we can be? No, of course not,” he says. His counterpart at Cephos, Steven Laken, says that no matter the error rate, jurors have to be told that fMRI results are not infallible and should be interpreted only for supporting a judgment, not as definitive evidence of guilt.

Even so, studies show that jurors focus on salient points of evidence and downplay the probability of error—they tend to believe that scientific-looking results, presented by experts, are true. The answer is to make the fMRI as reliable as it can be, says F. Andrew Kozel, a researcher at the University of Texas Southwestern Medical Center who studies lie detection through fMRI. That will take more research.

His latest study, partially funded by Cephos and published last year, used fMRI to test people who had participated in a mock crime as part of the experiment. Although the test caught guilty parties who lied, sometimes it nailed innocent folks who were telling the truth. Kozel is seeking funding to test scenarios that are as close as possible to ones an fMRI might be used to evaluate in court.

“Might” is still the operative word. Despite the decision in Illinois, judges typically scrutinize the merit of new scientific methods before admitting them in an actual trial. “I believe there will be more attempts to have this testimony introduced in court,” says Michael Perlin, a law professor at New York Law School who studies how courts use fMRI results. But if attorneys can’t prove it’s reliable and relevant, they’ll probably fail.

The real test will come when prosecutors try to use fMRI to bolster their cases. Experts tend to agree that, for now, the technology delivers mixed results. Using a picture of someone’s brain to justify a prison sentence—or worse—may be too much to ask.