We may earn revenue from the products available on this page and participate in affiliate programs. Learn more ›

Modern smartphones have increasingly large collections of cameras strewn across their backs. The Nokia9 PureView, for instance, has five separate cameras, all with their own explicit purpose. This morning Huawei announced its new P30 smartphone, and while it doesn’t have the most cameras—just four rear-facing imaging devices here—it does boast some interesting photographic tricks.

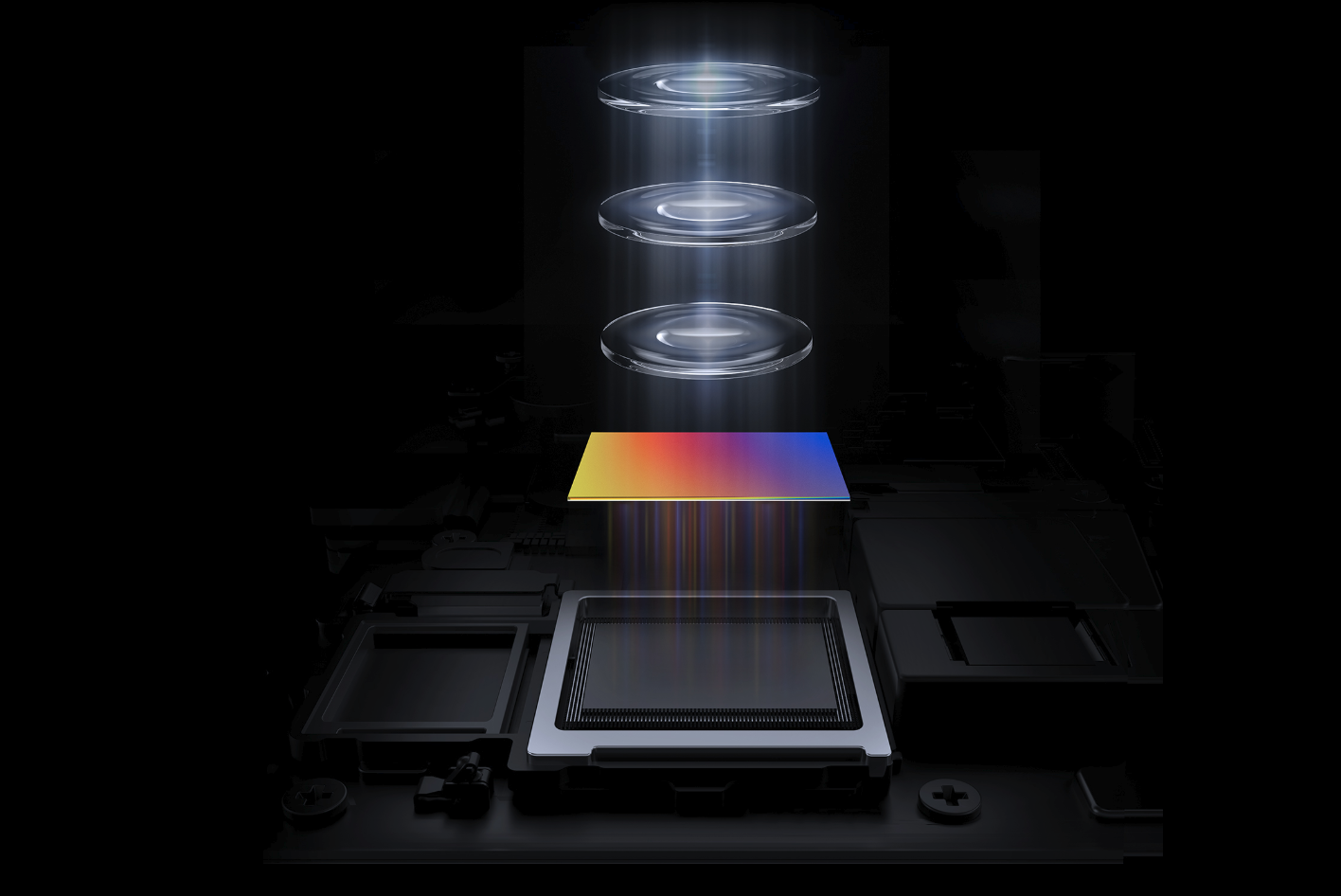

The most compelling change comes in the form of the main camera’s sensor, which has 40 total megapixels, but combines them into groups to eventually create 10-megapixel final photos. Almost all modern digital cameras are built on the same basic premise. A field of light-sensitive pixels sit underneath an array of colored filters. Each pixel gets a red, blue, or green filter and the whole array is arranged into a specific pattern.

When you take a picture, each pixel captures light through its colored filter, then the image processor in the camera looks at that data and turns it into true color information.

The sensor in Huawei’s main camera uses basically the same red and green filters over the pixels, but it replaces the blue filters with yellow ones. Huawei says it’s doing this to let in more light. A blue filter essentially blocks out all of the light you could consider red or green, while a yellow filter allows both to get through. The company says this swap allows for up to 40 percent more light to hit the photosensitive part of the device.

Allowing in more light, at least in theory, lets the camera shoot in darker settings without creating an image that’s too dark or filled with digital noise. If you’re familiar with camera jargon, the P30 Pro claims an equivalent maximum ISO value of more than 400,000, which is comparable with very high-end cameras (though, other differences like sensor size and lens quality obviously make the comparison difficult to balance).

So does this setup create better photos? There are only some early sample images out there at the moment, but it’s important to note that most of this color filtering happens without the user ever even knowing about it. Even if you pry off the lens and look at the sensor itself, you wouldn’t necessarily notice a difference in its appearance. And while some have wondered if this will make images more yellow, it won’t. The camera’s processor is still crunching numbers to try and get realistic color data for the scene, but it’s just working from different raw materials. It’s possible there could be some color weirdness in certain situations, but the company could also tweak the computational process down the road to change the way it renders the images.

Because of its low-light acumen, the P30 has drawn some comparisons to Google’s really impressive Night Sight technology, which lives inside the Pixel 3 phones. In that case, however, Google is using more standard camera hardware and relying heavily on combining multiple exposures taken in rapid succession—the bulk of the real photography magic happens during the processing. Huawei is trying to cram more light into the sensor during every single shot.

The rest of the cameras on the P30 are interesting, too. There’s a wide-angle camera with a 20-megapixel sensor and a roughly 120-degree field-of-view, as well as a camera with 5x optical zoom.

The last camera on the P30 is specifically meant to help measure depth for things like augmented reality, although it’s not active yet for any practical purpose. Still, that’s something we’ll see more of as phones crave distance data for things like capturing 3D virtual images or even faking blur in portrait modes and the Samsung Galaxy S10 is already using one.