Taking powerful artificial intelligence software and making it open source, so anyone in the world can use it, seems like something out of a sci-fi movie, but both Google and Microsoft have done exactly that in recent months. Now Facebook is going a step further and opening up its powerful AI computer hardware designs to the world.

It’s a big move, because while software platforms can certain make AI research easier, more replicable, and more shareable, the whole process is nearly impossible without powerful computers.

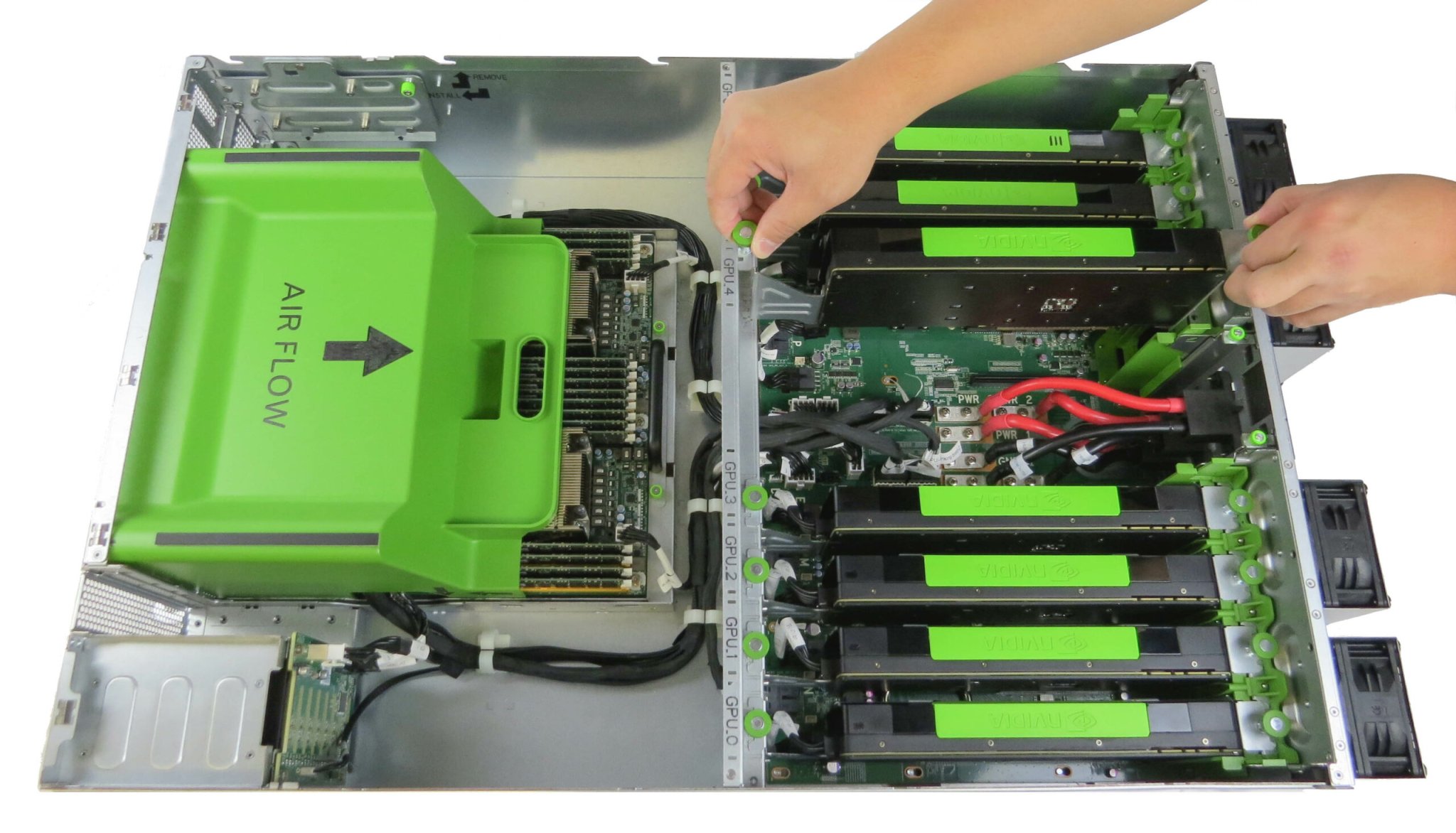

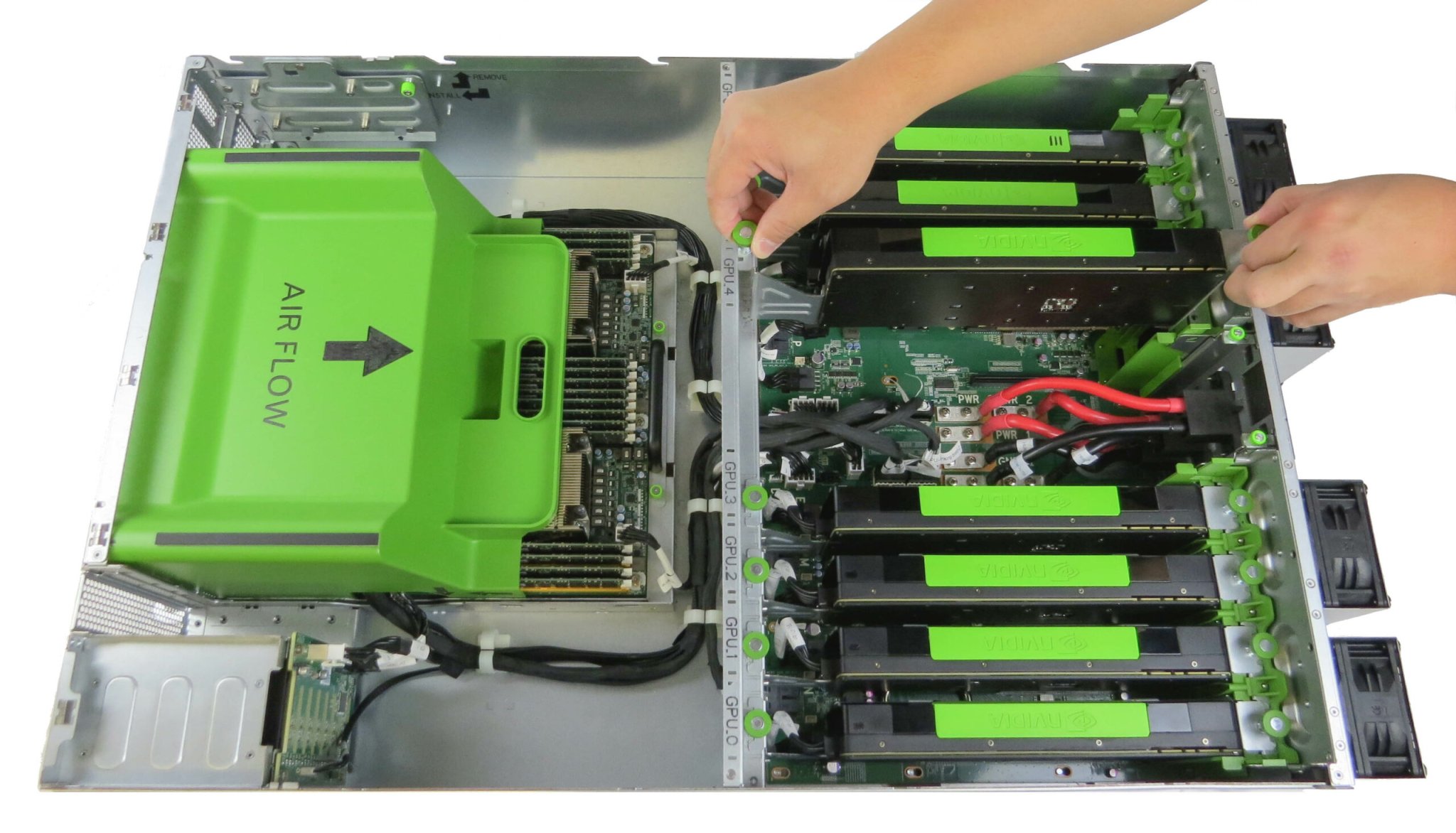

Today, Facebook announced that it is open sourcing the designs of its servers — which it claims run twice as fast as before. The new design, called Big Sur, calls for eight high-powered graphics processing units, or GPUs, amongst the other traditional parts of the computer like the central processing unit, or CPU, hard drive, and motherboard. But Facebook says that the new GPUs especially allow its researchers to work with double the size and speed of their machine learning models.

Why it matters

Regularly working with images or audio takes can be taxing for consumer-grade equipment, and some types of artificial intelligence have to break down and learn from 10 million pictures in order to learn from them. The process, called training, requires serious computing power.

First, let’s establish some basics— the underbelly of A.I. can be a daunting and complex. Artificial intelligence is an umbrella term for a number of approaches towards creating an artificial system that mimics human thought and reasoning. There have been many approaches to this; right now the most popular methods are different kinds of artificial neural networks for deep learning. These networks have to be trained, or shown examples, before they can output information. To have the computer learn what a cat is, you need you show it potentially millions of pictures of cats (although Facebook’s methods have dramatically reduced that number). The neural networks are virtual clusters of mathematical units that can individually process small pieces of information, like pixels, and when brought together and layered can tackle infinitely more complex tasks.

This means that millions of photos or phrases or bits of audio need to be broken down and looked at by potentially millions of artificial neurons, on different levels of abstraction. If we’re looking at the parts of the traditional computer that are candidates for this job, we’re given two options: the processor (CPU) or the graphics processor unit (GPU).

The difference between CPU and GPU

The CPU, the main “brain” of the modern computer, is great for working on a few general computing tasks. It has relatively few cores (4-8 in consumer computers and phones), but each core has a deeper cache memory for working on one thing for more times. It taps the computer’s random access memory (RAM) for data needed in its processes.

The GPU is the opposite. A single server-oriented GPU can have thousands of cores with little memory, optimized for executing tiny, repeated tasks (like rendering graphics). Getting back to artificial intelligence, the multitude of cores in a GPU allow more computations to be run in at the same time, speeding up the whole endeavor. CPUs used to be the go-to for this kind of heavy processing, but large-scale projects required vast fleets of networks chips, more than if computed with GPUs, according to Serkan Piantino, Engineering Director of Facebook’s A.I. Research.

“The benefit that GPUs offer is sheer density of computation in one place,” Piantino said. “At the moment, GPUs are the best for a lot of the networks we care about.”

Facebook says Big Sur works with a wide range of GPUs from different manufacturers, but they’re specifically using a recently released model from Nvidia, which has been pitching their products heavily towards artificial intelligence research. In their tests of CPU vs GPU performance for image training, dual 10-core Ivy Bridge CPUs (read: very fast) processed 256 images in 2 minutes 17 seconds. One of their server-oriented K40 GPUs processed the same images in just 28.5 seconds. And the newer model that Facebook uses in Big Sur, Nvidia’s M40, is actually faster.

Many Nvidia devices also come with the Compute Unified Device Architecture (CUDA) platform, that allows developers to write native code like C or C++ directly to the GPU, to orchestrate the cores in parallel with greater precision. CUDA is a staple at many A.I. research centers, like Facebook, Microsoft, and Baidu.

Replicating the human brain?

The GPU is the workhorse of modern A.I., but a few researchers think that the status quo of computing isn’t the answer. Federally-funded DARPA (Defense Advanced Research Projects Agency) partnered with IBM in 2013 on the SyNapse program, with a goal to create a new breed of computer chip that learns naturally—the very act of receiving inputs would teach the hardware. The outcome was TrueNorth: a “neuromorphic” chip announced in 2014.

TrueNorth is made of 5.4 billion transistors, which are structured into 1 million artificial neurons. The artificial neurons build 256 million artificial synapses, which pass information along from neuron to neuron when data is received. The data travels through neurons, creating patterns that can be translated into usable information for the network.

In Europe, a team of researchers is working on a project called FACETS, or Fast Analog Computing with Emergent Transient States. Their chip has 200,000 neurons, but 50 million synaptic connections. IBM and the FACETS team have built their chips to be scalable, meaning able to work in parallel to vastly increase compute power. This year IBM clustered 48 TrueNorth chips to build a 48 million neuron network, and MIT Technology Review reports that FACETS hopes to achieve a billion neurons with ten trillion synapses.

Even with that number, we’re still far from recreating the human brain, which consists of 86 billion neurons and could contain 100 trillion synapses. (IBM has hit this 100 trillion number in previous TrueNorth trials, but the chip ran 1542 times slower than real-time, and took a 96-rack supercomputer.)

Alex Nugent, the founder of Knowm and DARPA SyNapse alum, is trying to bring the future of computing with a special breed of memristors, which he says would replace the CPU, GPU, and RAM that run on transistors.

The memristor has been a unicorn of the tech industry since 1971, when computer scientist Leon Chua first proposed the theory as “The Missing Circuit Element.” Theoretically, a memristor serves as a replacement to a traditional transistor, the building block of the modern computer.

A transistor can exist in two states (on or off). Oversimplified, a computer is nothing but a vast array of transistors fluctuating between on and off. A memristor uses electrical current to change the resistance of metal, which gives greater flexibility in these values. Instead of two states like a transistor, a memristor can theoretically have four or six, multiplying the complexity of information an array of memristors could hold.

Biological efficiency

Nugent worked with hardware developer Kris Campbell from Boise State University to actually create a specific chip that works with what he calls AHaH (Anti-Hebbian and Hebbian) learning. This method uses memristors to mimic chains of neurons in the brain. The ability of the memristors to change their resistance based on applied voltage in bidirectional steps is very similar to the way neurons transmit their own minuscule electric charge, says Nugent. This allows them to adapt as they use. Since their resistance acts as a natural memory, memristors would break what some researchers call the von Neumann bottleneck, data processing cap created when data is transferred between the processor and RAM.

“AHaH computing says ‘Let’s take this building block and build up from it,’” Nugent said in an interview with Popular Science. “By basically exploiting these ’neurons,’ connecting them up in different ways and pairing their outputs in different ways, you can do learning operations.”

This is how Nugent sees this work being not only applicable to general computing, but specifically oriented towards machine learning.

“As soon as you take the density that we can already achieve today, you pair that with memristors, you pair it with a theory that enables us to use it, you stack the chips in three dimensions, you end up with biological efficiency,” Nugent said. “What you end up with is intelligent technology.”