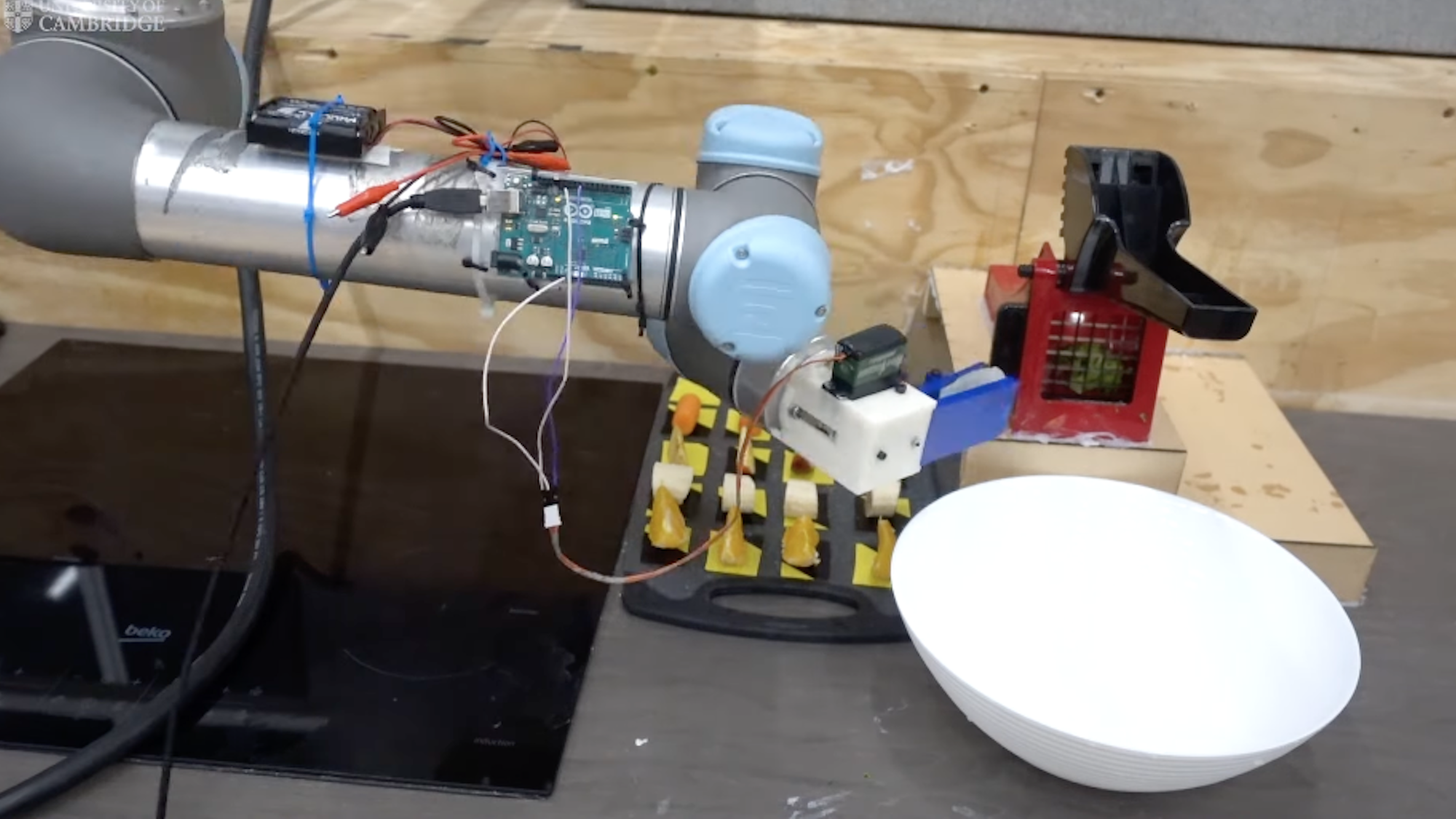

It may not win a restaurant any Michelin stars, but a research team’s new robotic ‘chef’ is still demonstrating some impressive leaps forward for culinary tech. As detailed in the journal IEEE Access, a group of engineers at the University of Cambridge’s Bio-Inspired Robotics Laboratory recently cooked up a robot capable of assembling a slate of salads after watching human demonstration videos. From there, the robot chef was even able to create its own, original salad based on its previous learning.

“We wanted to see whether we could train a robot chef to learn in the same incremental way that humans can—by identifying the ingredients and how they go together in the dish,” the paper’s first author Greg Sochacki, a Cambridge PhD candidate in information engineering, said in a statement.

[Related: 5 recipe apps to help organize your meals.]

What makes the team’s AI salad maker even more impressive is that the robot utilized a publicly available, off-the-shelf neural network already programmed to visually identify fruits and vegetables such as oranges, bananas, apples, broccoli, and carrots. The neural network also examined each video frame to identify the various objects, features, and movements depicted—for instance, the ingredients used, knives, and the human trainer’s face, hands, and arms. Afterwards, the videos and recipes were converted into vectors that the robot could then mathematically analyze.

Of the 16 videos observed, the robot correctly identified the recipe depicted 93 percent of the time, all while only recognizing 83 percent the human chef’s movements. Its observational abilities were so detailed, in fact, that the robot could tell when a recipe demonstration featured a double portion of an ingredient or if a human made a mistake, and know that these were variations on a learned recipe, and not an entirely new salad. According to the paper’s abstract, “A new recipe is added only if the current observation is substantially different than all recipes in the cookbook, which is decided by computing the similarity between the vectorizations of these two.”

Sochacki went only to explain that, while the recipes aren’t complex (think an un-tossed vegetable medley minus any dressings or flourishes), the robot was still “really effective at recognising, for example, that two chopped apples and two chopped carrots is the same recipe as three chopped apples and three chopped carrots.”

[Related: What robots can and can’t do for a restaurant.]

That said, there are still some clear limitations to the robotic chef’s chops—mainly, it needs clear, steady video footage of a dish being made with unimpeded views of human movements and their ingredients. Still, researchers are confident video platforms like YouTube could be utilized to train such robots on countless new recipes, even if they are unlikely to learn any creations from the site’s most popular influencers, whose clips traditionally feature fast editing and visual effects. Time to throw on some old reruns of Julia Child’s The French Chef and get to chopping.