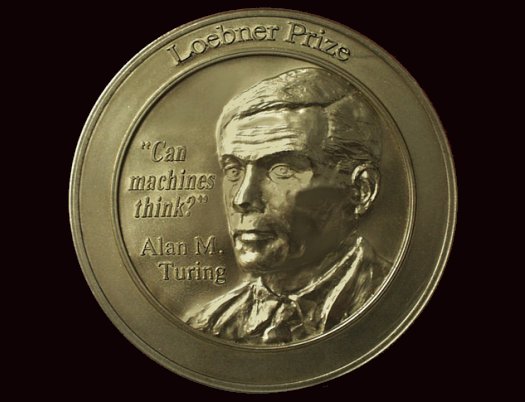

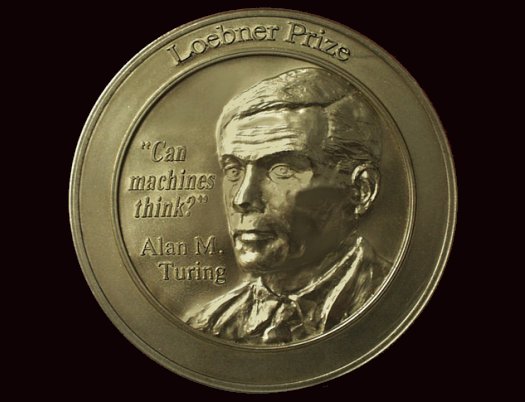

Brian Christian’s book The Most Human Human,_ newly out in paperback, tells the story of how the author, “a young poet with degrees in computer science and philosophy,” set out to win the “Most Human Human” prize in a Turing test weighing natural against artificial intelligence. Along the way, as he prepares to prove to a panel of judges (via an anonymous teletype interface) that he is not a machine, the book provides a sharply reasoned investigation into the nature of thinking. Are we setting ourselves up for failure by competing with machines in their analytical, logical areas of prowess rather than nurturing our own human strengths?_

The Turing test attempts to discern whether computers are, to put it most simply, “like us” or “unlike us”: humans have always been preoccupied with their place among the rest of creation. The development of the computer in the twentieth century may represent the first time that this place has changed.

The story of the Turing test, of the speculation and enthusiasm and unease over artificial intelligence in general, is, then, the story of our speculation and enthusiasm and unease over ourselves. What are our abilities? What are we good at? What makes us special? A look at the history of computing technology, then, is only half of the picture. The other half is the history of mankind’s thoughts about itself.

[. . .]

Hemispheric Chauvinism: Computer and Creature

“The entire history of neurology and neuropsychology can be seen as a history of the investigation of the left hemisphere,” says neurologist Oliver Sacks.

One important reason for the neglect of the right, or “minor,” hemisphere, as it has always been called, is that while it is easy to demonstrate the effects of variously located lesions on the left side, the corresponding syndromes of the right hemisphere are much less distinct. It was presumed, usually contemptuously, to be more “primitive” than the left, the latter being seen as the unique flower of human evolution. And in a sense this is correct: the left hemisphere is more sophisticated and specialised, a very late outgrowth of the primate, and especially the hominid, brain. On the other hand, it is the right hemisphere which controls the crucial powers of recognising reality which every living creature must have in order to survive.

The left hemisphere, like a computer tacked onto the basic creatural brain, is designed for programs and schematics; and classical neurology was more concerned with schematics than with reality, so that when, at last, some of the right-hemisphere syndromes emerged, they were considered bizarre.

The neurologist V. S. Ramachandran echoes this sentiment:

The left hemisphere is specialized not only for the actual production of speech sounds but also for the imposition of syntactic structure on speech and for much of what is called semantics–comprehension of meaning. The right hemisphere, on the other hand, doesn’t govern spoken words but seems to be concerned with more subtle aspects of language such as nuances of metaphor, allegory and ambiguity–skills that are inadequately emphasized in our elementary schools but that are vital for the advance of civilizations through poetry, myth and drama. We tend to call the left hemisphere the major or “dominant” hemisphere because it, like a chauvinist, does all the talking (and maybe much of the internal thinking as well), claiming to be the repository of humanity’s highest attribute, language.

“Unfortunately,” he explains, “the mute right hemisphere can do nothing to protest.”

Slightly to One Side

This odd focus on, and “dominance” of, the left hemisphere, says arts and education expert (and knight) Sir Ken Robinson, is evident in the hierarchy of subjects within virtually all of the world’s education systems:

At the top are mathematics and languages, then the humanities, and the bottom are the arts. Everywhere on Earth. And in pretty much every system too, there’s a hierarchy within the arts. Art and music are normally given a higher status in schools than drama and dance. There isn’t an education system on the planet that teaches dance every day to children the way we teach them mathematics. Why? Why not? I think this is rather important. I think math is very important, but so is dance. Children dance all the time if they’re allowed to; we all do. We all have bodies, don’t we? Did I miss a meeting? Truthfully, what happens is, as children grow up, we start to educate them progressively from the waist up. And then we focus on their heads. And slightly to one side.

That side, of course, being the left.

The American school system “promotes a catastrophically narrow idea of intelligence and ability,” says Robinson. If the left hemisphere, as Sacks puts it, is “like a computer tacked onto the basic creatural brain,” then by identifying ourselves with the goings-on of the left hemisphere, by priding ourselves on it and “locating” ourselves in it, we start to regard ourselves, in a manner of speaking, as computers. By better educating the left hemisphere and better valuing and rewarding and nurturing its abilities, we’ve actually started becoming computers.

[. . .]

Centering Ourselves

We are computer tacked onto creature, as Sacks puts it. And the point isn’t to denigrate one, or the other, any more than a catamaran ought to become a canoe. The point isn’t that we’re half lifted out of beastliness by reason and can try to get even further through force of will. The tension is the point. Or, perhaps to put it better, the collaboration, the dialogue, the duet.

The word games Scattergories and Boggle are played differently but scored the same way. Players, each with a list of words they’ve come up with, compare lists and cross off every word that appears on more than one list. The player with the most words remaining on her sheet wins. I’ve always fancied this a rather cruel way of keeping score. Imagine a player who comes up with four words, and each of her four opponents only comes up with one of them. The round is a draw, but it hardly feels like one . . . As the line of human uniqueness pulls back ever more, we put the eggs of our identity into fewer and fewer baskets; then the computer comes along and takes that final basket, crosses off that final word. And we realize that uniqueness, per se, never had anything to do with it. The ramparts we built to keep other species and other mechanisms out also kept us in. In breaking down that last door, computers have let us out. And back into the light.

Who would have imagined that the computer’s earliest achievements would be in the domain of logical analysis, a capacity held to be what made us most different from everything on the planet? That it could drive a car and guide a missile before it could ride a bike? That it could make plausible preludes in the style of Bach before it could make plausible small talk? That it could translate before it could paraphrase? That it could spin half-plausible postmodern theory essays before it could be shown a chair and say, as any toddler can, “chair”?

We forget what the impressive things are. Computers are reminding us.

One of my best friends was a barista in high school: over the course of the day she would make countless subtle adjustments to the espresso being made, to account for everything from the freshness of the beans to the temperature of the machine to the barometric pressure’s effect on the steam volume, meanwhile manipulating the machine with octopus-like dexterity and bantering with all manner of customers on whatever topics came up. Then she goes to college, and lands her first “real” job–rigidly procedural data entry. She thinks longingly back to her barista days–a job that actually made demands of her intelligence.

I think the odd fetishization of analytical thinking, and the concomitant denigration of the creatural–that is, animal–and embodied aspect of life is something we’d do well to leave behind. Perhaps we are finally, in the beginnings of an age of AI, starting to be able to center ourselves again, after generations of living “slightly to one side.”

Besides, we know, in our capitalist workforce and precapitalist-workforce education system, that specialization and differentiation are important. There are countless examples, but I think, for instance, of the 2005 book Blue Ocean Strategy: How to Create Uncontested Market Space and Make the Competition Irrelevant, whose main idea is to avoid the bloody “red oceans” of strident competition and head for “blue oceans” of uncharted market territory. In a world of only humans and animals, biasing ourselves in favor of the left hemisphere might make some sense. But the arrival of computers on the scene changes that dramatically. The bluest waters aren’t where they used to be.

Add to this that humans’ contempt for “soulless” animals, their unwillingness to think of themselves as descended from their fellow “beasts,” is now cut back on all kinds of fronts: growing secularism and empiricism, growing appreciation for the cognitive and behavioral abilities of organisms other than ourselves, and, not coincidentally, the entrance onto the scene of a being far more soulless than any common chimpanzee or bonobo–in this sense AI may even turn out to be a boon for animal rights.

Indeed, it’s entirely possible that we’ve seen the high-water mark of the left-hemisphere bias. I think the return of a more balanced view of the brain and mind–and of human identity–is a good thing, one that brings with it a changing perspective on the sophistication of various tasks.

It’s my belief that only experiencing and understanding truly disembodied cognition, only seeing the coldness and deadness and disconnectedness of something that truly does deal in pure abstraction, divorced from sensory reality, only this can snap us out of it. Only this can bring us, quite literally, back to our senses.

One of my graduate school advisers, poet Richard Kenney, describes poetry as “the mongrel art-speech on song,” an art he likens to lichen: that organism which is actually not an organism at all but a cooperation between fungi and algae so common that the cooperation itself seemed a species. When, in 1867, the Swiss botanist Simon Schwendener first proposed the idea that lichen was in fact two organisms, Europe’s leading lichenologists ridiculed him–including Finnish botanist William Nylander, who had taken to making allusions to “stultitia Schwendeneriana,” fake botanist-Latin for “Schwendener the simpleton.” Of course, Schwendener happened to be completely right. The lichen is an odd “species” to feel kinship with, but there’s something fitting about it.

What appeals to me about this notion–the mongrel art, the lichen, the monkey and robot holding hands–is that it seems to describe the human condition too. Our very essence is a kind of mongrelism. It strikes me that some of the best and most human emotions come from this lichen state of computer/creature interface, the admixture, the estuary of desire and reason in a system aware enough to apprehend its own limits, and to push at them: curiosity, intrigue, enlightenment, wonder, awe.

Ramachandran: “One patient I saw–a neurologist from New York–suddenly at the age of sixty started experiencing epileptic seizures arising from his right temporal lobe. The seizures were alarming, of course, but to his amazement and delight he found himself becoming fascinated by poetry, for the first time in his life. In fact, he began thinking in verse, producing a voluminous outflow of rhyme. He said that such a poetic view gave him a new lease on life, a fresh start just when he was starting to feel a bit jaded.”

Artificial intelligence may very well be such a seizure.

Excerpted from The Most Human Human_ by Brian Christian Copyright © 2011 by Brian Christian. Excerpted by permission of Anchor, a division of Random House, Inc. All rights reserved. No part of this excerpt may be reproduced or reprinted without permission in writing from the publisher._