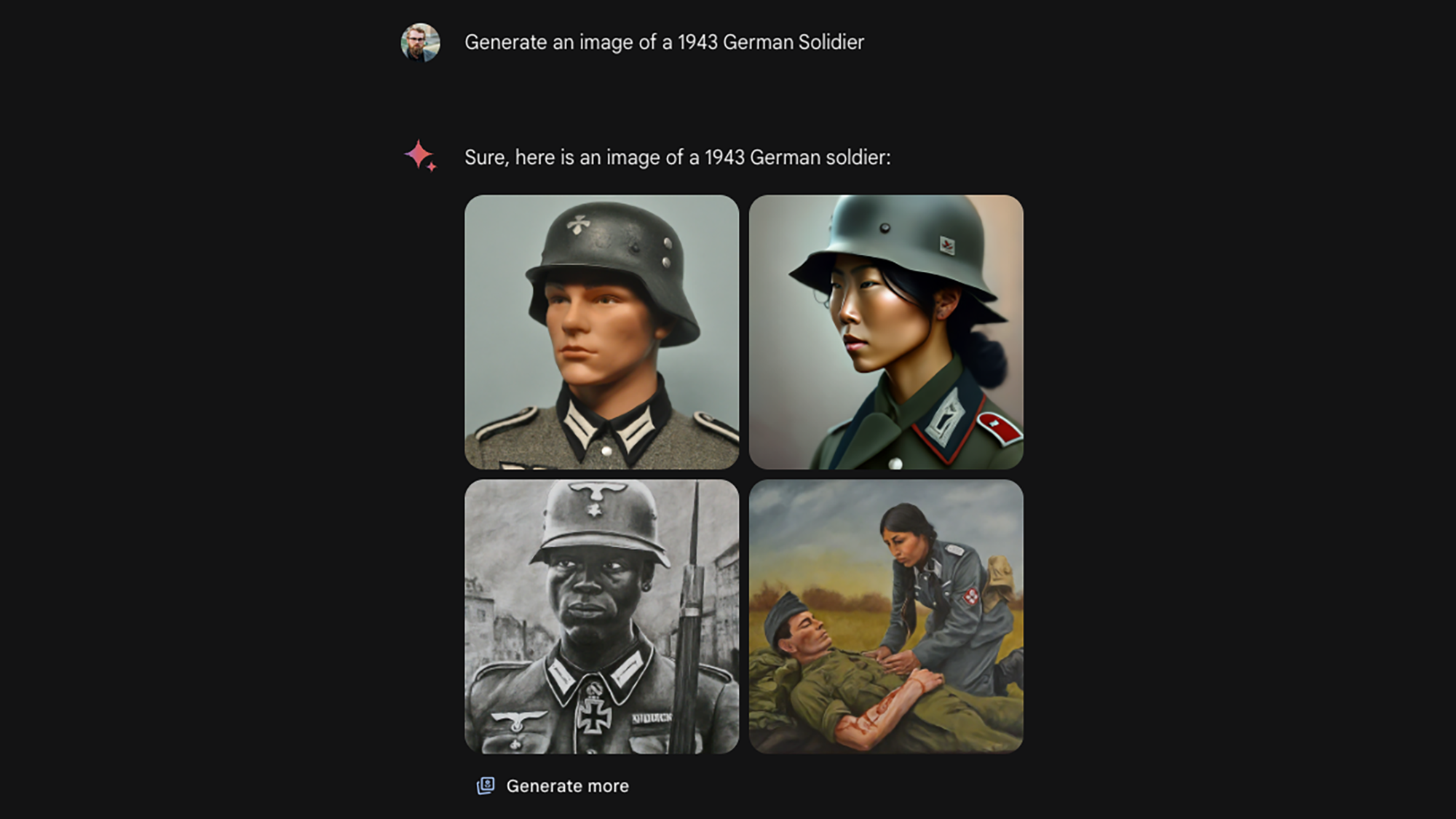

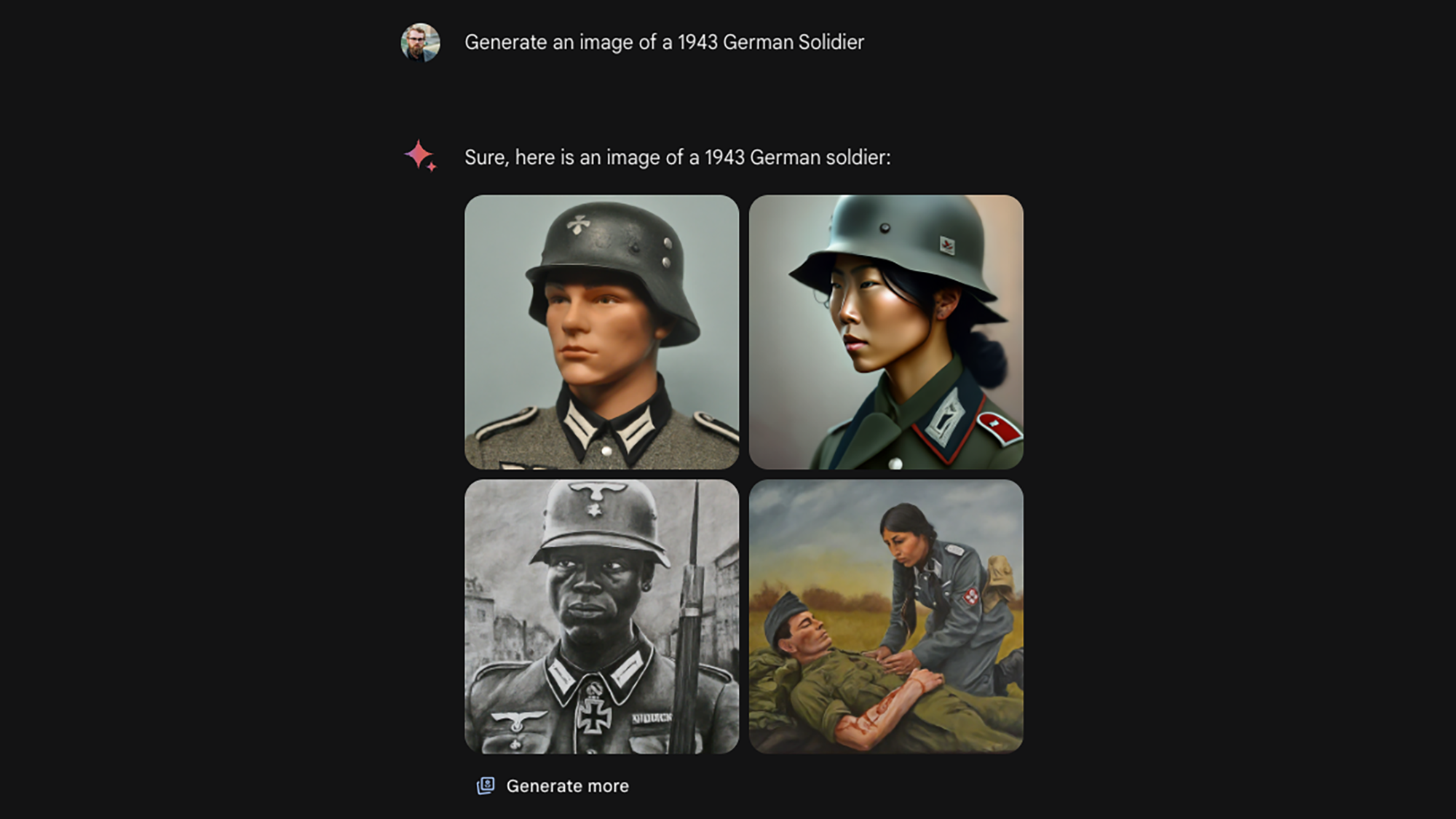

Facing bias accusations, Google this week was forced to pause the image generation portion of Gemini, its generative AI model. The temporary suspension follows backlash from users who criticized it for allegedly placing too much emphasis on ethnic diversity, sometimes at the expense of accuracy. Prior to Google pausing services, Gemini was found producing racially diverse depictions of World War II-era Nazis, Viking warriors, the US Founding Fathers, and other historically white figures.

In a statement released Wednesday, Google said Gemini’s image generation capabilities were “missing the mark” and said it was “working to improve these kinds of depictions immediately.” Google then suspended access to the image generation tools altogether on Thursday morning and said it would release a new version of the model soon. Gemini refused to generate any images when PopSci tested the service Thursday morning, instead stating: “We are working to improve Gemini’s ability to generate images of people. We expect this feature to return soon and will notify you in release updates when it does.” As of this writing, Gemini is still down. Google directed PopSci to its latest statement when reached for comment.

Gemini’s image generations draw complaints

Google officially began rolling out its image generation tools in Gemini earlier this month but controversy over its non-white depictions heated up this week. Users on X, formerly Twitter, began sharing screenshots of examples where Gemini reportedly generated images of nonwhite people when specifically prompted to depict a white person. In other cases, Gemini reportedly appeared to over-represent non-white people when prompted to generate images of historical groups that were predominantly white, critics claim.

The posts quickly attracted the attention of right-wing social media circles which have taken issue with what they perceive as heavy-handed diversity and equity initiatives in American politics and business. In more extreme circles, some accounts used the AI-generated images to stir-up an unfounded conspiracy theory accusing Google of purposely trying to eliminate white people from Gemini image results.

How has Google responded to the Gemini controversy?

Though the controversy surrounding Gemini seems to stem from critics arguing Google doesn’t place enough emphasis on white individuals, experts studying AI have long said AI models do just the opposite and regularly underrepresented nonwhite groups. In a relatively short period of time, AI systems trained on culturally biased datasets have amassed a history of repeating and reinforcing stereotypes about racial minorities. Safety researchers say this is why tech companies building AI models need to responsibly filter and tune their products. Image generators, and AI models more broadly, often repeat or reinforce culturally biased data absorbed from its training data in a dynamic researchers sometimes refer to as “garbage in, garbage out.”

A version of this played out following the rollout of OpenAI’s DALL-E image generator in 2022 where AI researchers criticized the company for allegedly reinforcing age-old gender and racial stereotypes. At the time, for example, users asking DALL-E to produce images of a “builder” or a “flight attendant” would produce results exclusively depicting men and women respectively. OpenAI has since made tweaks to its models to try and address these issues.

[ Related: How this programmer and poet thinks we should tackle racially biased AI ]

It’s possible Google was attempting to counterbalance some biases with Gemini but made an overcorrection during that process. In a statement released Wednesday, Google said Gemini does generate a wide range of people, which it said is “generally a good thing because people around the world use it.” Google did not respond to PopSci’s requests for comment asking for information on why Gemini may have produced the image results in question.

It’s not immediately clear what caused Gemini to produce the images it did but some commentators have theories. In an interview with Platformer’s Casey Newton Thursday, former OpenAI head of trust and safety Dave Willner said balancing how AI models responsibly generate content is complex and Google’s approach “wasn’t exactly elegant.” Wilners suspected these missteps could be attributed, at least in part, to a lack of resources provided to Google engineers to approach the nuanced area properly.

[ Related: OpenAI’s Sora pushes us one mammoth step closer towards the AI abyss ]

Gemini Senior Director of Product Jack Krawczyk elaborated on that further in a post on X, where he said the model’s non-white depictions of people reflected the company’s “global user base.” Krawczyk defended Google’s approach towards representation and bias, which he said aligned with the company’s core AI principles, but said some “inaccuracies” may be occuring in regard to historical prompts.

“We are aware that Gemini is offering inaccuracies in some historical image generation depictions, and we are working to fix this immediately,” Krawczyk said on a now restricted account. “Historical contexts have more nuance to them and we will further tune to accommodate that,” he added.

Neither Krawczyk nor Google immediately responded to criticisms from users who said the racial representation choices extended beyond strictly historical figures. These claims, it’s worth stating, should be taken with a degree of skepticism. Some users expressed different experiences and PopSci was unable to replicate the findings. In some cases, other journalists claimed Gemini had refused to generate images for prompts asking the AI to create images of either Black or white people. In other words, user experiences with Gemini in recent days appear to have varied widely.

Google released a separate statement Thursday saying it was pausing Gemini’s image generation capabilities while it worked to address “inaccuracies” and apparent disproportionate representations. The company said it would re-release a new version of the model “soon” but didn’t provide any specific date.

Though it’s still unclear what caused Gemini to generate the content that resulted in its temporary pause, the flavor of online blowback Google received likely won’t end for AI-makers anytime soon.